pub mod ml {

//! # Machine Learning

//!

//! The Machine Learning Library (MLL) is a set of classes and functions for statistical

//! classification, regression, and clustering of data.

//!

//! Most of the classification and regression algorithms are implemented as C++ classes. As the

//! algorithms have different sets of features (like an ability to handle missing measurements or

//! categorical input variables), there is a little common ground between the classes. This common

//! ground is defined by the class cv::ml::StatModel that all the other ML classes are derived from.

//!

//! See detailed overview here: [ml_intro].

use crate::{mod_prelude::*, core, sys, types};

pub mod prelude {

pub use { super::ParamGridTraitConst, super::ParamGridTrait, super::TrainDataTraitConst, super::TrainDataTrait, super::StatModelTraitConst, super::StatModelTrait, super::NormalBayesClassifierTraitConst, super::NormalBayesClassifierTrait, super::KNearestTraitConst, super::KNearestTrait, super::SVM_KernelTraitConst, super::SVM_KernelTrait, super::SVMTraitConst, super::SVMTrait, super::EMTraitConst, super::EMTrait, super::DTrees_NodeTraitConst, super::DTrees_NodeTrait, super::DTrees_SplitTraitConst, super::DTrees_SplitTrait, super::DTreesTraitConst, super::DTreesTrait, super::RTreesTraitConst, super::RTreesTrait, super::BoostTraitConst, super::BoostTrait, super::ANN_MLPTraitConst, super::ANN_MLPTrait, super::LogisticRegressionTraitConst, super::LogisticRegressionTrait, super::SVMSGDTraitConst, super::SVMSGDTrait };

}

/// The simulated annealing algorithm. See [Kirkpatrick83](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Kirkpatrick83) for details.

pub const ANN_MLP_ANNEAL: i32 = 2;

/// The back-propagation algorithm.

pub const ANN_MLP_BACKPROP: i32 = 0;

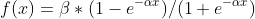

/// Gaussian function:

pub const ANN_MLP_GAUSSIAN: i32 = 2;

/// Identity function:

pub const ANN_MLP_IDENTITY: i32 = 0;

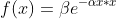

/// Leaky ReLU function: for x>0  and x<=0

pub const ANN_MLP_LEAKYRELU: i32 = 4;

/// Do not normalize the input vectors. If this flag is not set, the training algorithm

/// normalizes each input feature independently, shifting its mean value to 0 and making the

/// standard deviation equal to 1. If the network is assumed to be updated frequently, the new

/// training data could be much different from original one. In this case, you should take care

/// of proper normalization.

pub const ANN_MLP_NO_INPUT_SCALE: i32 = 2;

/// Do not normalize the output vectors. If the flag is not set, the training algorithm

/// normalizes each output feature independently, by transforming it to the certain range

/// depending on the used activation function.

pub const ANN_MLP_NO_OUTPUT_SCALE: i32 = 4;

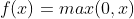

/// ReLU function:

pub const ANN_MLP_RELU: i32 = 3;

/// The RPROP algorithm. See [RPROP93](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_RPROP93) for details.

pub const ANN_MLP_RPROP: i32 = 1;

/// Symmetrical sigmoid:

///

/// Note:

/// If you are using the default sigmoid activation function with the default parameter values

/// fparam1=0 and fparam2=0 then the function used is y = 1.7159\*tanh(2/3 \* x), so the output

/// will range from [-1.7159, 1.7159], instead of [0,1].

pub const ANN_MLP_SIGMOID_SYM: i32 = 1;

/// Update the network weights, rather than compute them from scratch. In the latter case

/// the weights are initialized using the Nguyen-Widrow algorithm.

pub const ANN_MLP_UPDATE_WEIGHTS: i32 = 1;

/// Discrete AdaBoost.

pub const Boost_DISCRETE: i32 = 0;

/// Gentle AdaBoost. It puts less weight on outlier data points and for that

/// reason is often good with regression data.

pub const Boost_GENTLE: i32 = 3;

/// LogitBoost. It can produce good regression fits.

pub const Boost_LOGIT: i32 = 2;

/// Real AdaBoost. It is a technique that utilizes confidence-rated predictions

/// and works well with categorical data.

pub const Boost_REAL: i32 = 1;

/// each training sample occupies a column of samples

pub const COL_SAMPLE: i32 = 1;

pub const DTrees_PREDICT_AUTO: i32 = 0;

pub const DTrees_PREDICT_MASK: i32 = 768;

pub const DTrees_PREDICT_MAX_VOTE: i32 = 512;

pub const DTrees_PREDICT_SUM: i32 = 256;

/// A symmetric positively defined matrix. The number of free

/// parameters in each matrix is about . It is not recommended to use this option, unless

/// there is pretty accurate initial estimation of the parameters and/or a huge number of

/// training samples.

pub const EM_COV_MAT_DEFAULT: i32 = 1;

/// A diagonal matrix with positive diagonal elements. The number of

/// free parameters is d for each matrix. This is most commonly used option yielding good

/// estimation results.

pub const EM_COV_MAT_DIAGONAL: i32 = 1;

/// A symmetric positively defined matrix. The number of free

/// parameters in each matrix is about . It is not recommended to use this option, unless

/// there is pretty accurate initial estimation of the parameters and/or a huge number of

/// training samples.

pub const EM_COV_MAT_GENERIC: i32 = 2;

/// A scaled identity matrix . There is the only

/// parameter  to be estimated for each matrix. The option may be used in special cases,

/// when the constraint is relevant, or as a first step in the optimization (for example in case

/// when the data is preprocessed with PCA). The results of such preliminary estimation may be

/// passed again to the optimization procedure, this time with

/// covMatType=EM::COV_MAT_DIAGONAL.

pub const EM_COV_MAT_SPHERICAL: i32 = 0;

pub const EM_DEFAULT_MAX_ITERS: i32 = 100;

pub const EM_DEFAULT_NCLUSTERS: i32 = 5;

pub const EM_START_AUTO_STEP: i32 = 0;

pub const EM_START_E_STEP: i32 = 1;

pub const EM_START_M_STEP: i32 = 2;

pub const KNearest_BRUTE_FORCE: i32 = 1;

pub const KNearest_KDTREE: i32 = 2;

pub const LogisticRegression_BATCH: i32 = 0;

/// Set MiniBatchSize to a positive integer when using this method.

pub const LogisticRegression_MINI_BATCH: i32 = 1;

/// Regularization disabled

pub const LogisticRegression_REG_DISABLE: i32 = -1;

/// %L1 norm

pub const LogisticRegression_REG_L1: i32 = 0;

/// %L2 norm

pub const LogisticRegression_REG_L2: i32 = 1;

/// each training sample is a row of samples

pub const ROW_SAMPLE: i32 = 0;

/// Average Stochastic Gradient Descent

pub const SVMSGD_ASGD: i32 = 1;

/// More accurate for the case of linearly separable sets.

pub const SVMSGD_HARD_MARGIN: i32 = 1;

/// Stochastic Gradient Descent

pub const SVMSGD_SGD: i32 = 0;

/// General case, suits to the case of non-linearly separable sets, allows outliers.

pub const SVMSGD_SOFT_MARGIN: i32 = 0;

pub const SVM_C: i32 = 0;

/// Exponential Chi2 kernel, similar to the RBF kernel:

/// .

pub const SVM_CHI2: i32 = 4;

pub const SVM_COEF: i32 = 4;

/// Returned by SVM::getKernelType in case when custom kernel has been set

pub const SVM_CUSTOM: i32 = -1;

/// C-Support Vector Classification. n-class classification (n  2), allows

/// imperfect separation of classes with penalty multiplier C for outliers.

pub const SVM_C_SVC: i32 = 100;

pub const SVM_DEGREE: i32 = 5;

/// -Support Vector Regression. The distance between feature vectors

/// from the training set and the fitting hyper-plane must be less than p. For outliers the

/// penalty multiplier C is used.

pub const SVM_EPS_SVR: i32 = 103;

pub const SVM_GAMMA: i32 = 1;

/// Histogram intersection kernel. A fast kernel. .

pub const SVM_INTER: i32 = 5;

/// Linear kernel. No mapping is done, linear discrimination (or regression) is

/// done in the original feature space. It is the fastest option. .

pub const SVM_LINEAR: i32 = 0;

pub const SVM_NU: i32 = 3;

/// -Support Vector Classification. n-class classification with possible

/// imperfect separation. Parameter  (in the range 0..1, the larger the value, the smoother

/// the decision boundary) is used instead of C.

pub const SVM_NU_SVC: i32 = 101;

/// -Support Vector Regression.  is used instead of p.

/// See [LibSVM](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_LibSVM) for details.

pub const SVM_NU_SVR: i32 = 104;

/// Distribution Estimation (One-class %SVM). All the training data are from

/// the same class, %SVM builds a boundary that separates the class from the rest of the feature

/// space.

pub const SVM_ONE_CLASS: i32 = 102;

pub const SVM_P: i32 = 2;

/// Polynomial kernel:

/// .

pub const SVM_POLY: i32 = 1;

/// Radial basis function (RBF), a good choice in most cases.

/// .

pub const SVM_RBF: i32 = 2;

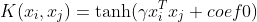

/// Sigmoid kernel: .

pub const SVM_SIGMOID: i32 = 3;

pub const StatModel_COMPRESSED_INPUT: i32 = 2;

pub const StatModel_PREPROCESSED_INPUT: i32 = 4;

/// makes the method return the raw results (the sum), not the class label

pub const StatModel_RAW_OUTPUT: i32 = 1;

pub const StatModel_UPDATE_MODEL: i32 = 1;

pub const TEST_ERROR: i32 = 0;

pub const TRAIN_ERROR: i32 = 1;

/// categorical variables

pub const VAR_CATEGORICAL: i32 = 1;

/// same as VAR_ORDERED

pub const VAR_NUMERICAL: i32 = 0;

/// ordered variables

pub const VAR_ORDERED: i32 = 0;

/// possible activation functions

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ANN_MLP_ActivationFunctions {

/// Identity function:

IDENTITY = 0,

/// Symmetrical sigmoid:

///

/// Note:

/// If you are using the default sigmoid activation function with the default parameter values

/// fparam1=0 and fparam2=0 then the function used is y = 1.7159\*tanh(2/3 \* x), so the output

/// will range from [-1.7159, 1.7159], instead of [0,1].

SIGMOID_SYM = 1,

/// Gaussian function:

GAUSSIAN = 2,

/// ReLU function:

RELU = 3,

/// Leaky ReLU function: for x>0  and x<=0

LEAKYRELU = 4,

}

opencv_type_enum! { crate::ml::ANN_MLP_ActivationFunctions }

/// Train options

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ANN_MLP_TrainFlags {

/// Update the network weights, rather than compute them from scratch. In the latter case

/// the weights are initialized using the Nguyen-Widrow algorithm.

UPDATE_WEIGHTS = 1,

/// Do not normalize the input vectors. If this flag is not set, the training algorithm

/// normalizes each input feature independently, shifting its mean value to 0 and making the

/// standard deviation equal to 1. If the network is assumed to be updated frequently, the new

/// training data could be much different from original one. In this case, you should take care

/// of proper normalization.

NO_INPUT_SCALE = 2,

/// Do not normalize the output vectors. If the flag is not set, the training algorithm

/// normalizes each output feature independently, by transforming it to the certain range

/// depending on the used activation function.

NO_OUTPUT_SCALE = 4,

}

opencv_type_enum! { crate::ml::ANN_MLP_TrainFlags }

/// Available training methods

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ANN_MLP_TrainingMethods {

/// The back-propagation algorithm.

BACKPROP = 0,

/// The RPROP algorithm. See [RPROP93](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_RPROP93) for details.

RPROP = 1,

/// The simulated annealing algorithm. See [Kirkpatrick83](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Kirkpatrick83) for details.

ANNEAL = 2,

}

opencv_type_enum! { crate::ml::ANN_MLP_TrainingMethods }

/// Boosting type.

/// Gentle AdaBoost and Real AdaBoost are often the preferable choices.

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum Boost_Types {

/// Discrete AdaBoost.

DISCRETE = 0,

/// Real AdaBoost. It is a technique that utilizes confidence-rated predictions

/// and works well with categorical data.

REAL = 1,

/// LogitBoost. It can produce good regression fits.

LOGIT = 2,

/// Gentle AdaBoost. It puts less weight on outlier data points and for that

/// reason is often good with regression data.

GENTLE = 3,

}

opencv_type_enum! { crate::ml::Boost_Types }

/// Predict options

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum DTrees_Flags {

PREDICT_AUTO = 0,

PREDICT_SUM = 256,

PREDICT_MAX_VOTE = 512,

PREDICT_MASK = 768,

}

opencv_type_enum! { crate::ml::DTrees_Flags }

/// Type of covariation matrices

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum EM_Types {

/// A scaled identity matrix . There is the only

/// parameter  to be estimated for each matrix. The option may be used in special cases,

/// when the constraint is relevant, or as a first step in the optimization (for example in case

/// when the data is preprocessed with PCA). The results of such preliminary estimation may be

/// passed again to the optimization procedure, this time with

/// covMatType=EM::COV_MAT_DIAGONAL.

COV_MAT_SPHERICAL = 0,

/// A diagonal matrix with positive diagonal elements. The number of

/// free parameters is d for each matrix. This is most commonly used option yielding good

/// estimation results.

COV_MAT_DIAGONAL = 1,

/// A symmetric positively defined matrix. The number of free

/// parameters in each matrix is about . It is not recommended to use this option, unless

/// there is pretty accurate initial estimation of the parameters and/or a huge number of

/// training samples.

COV_MAT_GENERIC = 2,

// A symmetric positively defined matrix. The number of free

// parameters in each matrix is about . It is not recommended to use this option, unless

// there is pretty accurate initial estimation of the parameters and/or a huge number of

// training samples.

// Duplicate, use COV_MAT_DIAGONAL instead

// COV_MAT_DEFAULT = 1,

}

opencv_type_enum! { crate::ml::EM_Types }

/// %Error types

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ErrorTypes {

TEST_ERROR = 0,

TRAIN_ERROR = 1,

}

opencv_type_enum! { crate::ml::ErrorTypes }

/// Implementations of KNearest algorithm

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum KNearest_Types {

BRUTE_FORCE = 1,

KDTREE = 2,

}

opencv_type_enum! { crate::ml::KNearest_Types }

/// Training methods

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum LogisticRegression_Methods {

BATCH = 0,

/// Set MiniBatchSize to a positive integer when using this method.

MINI_BATCH = 1,

}

opencv_type_enum! { crate::ml::LogisticRegression_Methods }

/// Regularization kinds

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum LogisticRegression_RegKinds {

/// Regularization disabled

REG_DISABLE = -1,

/// %L1 norm

REG_L1 = 0,

/// %L2 norm

REG_L2 = 1,

}

opencv_type_enum! { crate::ml::LogisticRegression_RegKinds }

/// Margin type.

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum SVMSGD_MarginType {

/// General case, suits to the case of non-linearly separable sets, allows outliers.

SOFT_MARGIN = 0,

/// More accurate for the case of linearly separable sets.

HARD_MARGIN = 1,

}

opencv_type_enum! { crate::ml::SVMSGD_MarginType }

/// SVMSGD type.

/// ASGD is often the preferable choice.

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum SVMSGD_SvmsgdType {

/// Stochastic Gradient Descent

SGD = 0,

/// Average Stochastic Gradient Descent

ASGD = 1,

}

opencv_type_enum! { crate::ml::SVMSGD_SvmsgdType }

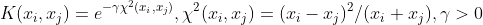

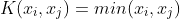

/// %SVM kernel type

///

/// A comparison of different kernels on the following 2D test case with four classes. Four

/// SVM::C_SVC SVMs have been trained (one against rest) with auto_train. Evaluation on three

/// different kernels (SVM::CHI2, SVM::INTER, SVM::RBF). The color depicts the class with max score.

/// Bright means max-score \> 0, dark means max-score \< 0.

///

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum SVM_KernelTypes {

/// Returned by SVM::getKernelType in case when custom kernel has been set

CUSTOM = -1,

/// Linear kernel. No mapping is done, linear discrimination (or regression) is

/// done in the original feature space. It is the fastest option. .

LINEAR = 0,

/// Polynomial kernel:

/// .

POLY = 1,

/// Radial basis function (RBF), a good choice in most cases.

/// .

RBF = 2,

/// Sigmoid kernel: .

SIGMOID = 3,

/// Exponential Chi2 kernel, similar to the RBF kernel:

/// .

CHI2 = 4,

/// Histogram intersection kernel. A fast kernel. .

INTER = 5,

}

opencv_type_enum! { crate::ml::SVM_KernelTypes }

/// %SVM params type

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum SVM_ParamTypes {

C = 0,

GAMMA = 1,

P = 2,

NU = 3,

COEF = 4,

DEGREE = 5,

}

opencv_type_enum! { crate::ml::SVM_ParamTypes }

/// %SVM type

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum SVM_Types {

/// C-Support Vector Classification. n-class classification (n  2), allows

/// imperfect separation of classes with penalty multiplier C for outliers.

C_SVC = 100,

/// -Support Vector Classification. n-class classification with possible

/// imperfect separation. Parameter  (in the range 0..1, the larger the value, the smoother

/// the decision boundary) is used instead of C.

NU_SVC = 101,

/// Distribution Estimation (One-class %SVM). All the training data are from

/// the same class, %SVM builds a boundary that separates the class from the rest of the feature

/// space.

ONE_CLASS = 102,

/// -Support Vector Regression. The distance between feature vectors

/// from the training set and the fitting hyper-plane must be less than p. For outliers the

/// penalty multiplier C is used.

EPS_SVR = 103,

/// -Support Vector Regression.  is used instead of p.

/// See [LibSVM](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_LibSVM) for details.

NU_SVR = 104,

}

opencv_type_enum! { crate::ml::SVM_Types }

/// Sample types

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum SampleTypes {

/// each training sample is a row of samples

ROW_SAMPLE = 0,

/// each training sample occupies a column of samples

COL_SAMPLE = 1,

}

opencv_type_enum! { crate::ml::SampleTypes }

/// Predict options

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum StatModel_Flags {

UPDATE_MODEL = 1,

// makes the method return the raw results (the sum), not the class label

// Duplicate, use UPDATE_MODEL instead

// RAW_OUTPUT = 1,

COMPRESSED_INPUT = 2,

PREPROCESSED_INPUT = 4,

}

opencv_type_enum! { crate::ml::StatModel_Flags }

/// Variable types

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum VariableTypes {

/// same as VAR_ORDERED

VAR_NUMERICAL = 0,

// ordered variables

// Duplicate, use VAR_NUMERICAL instead

// VAR_ORDERED = 0,

/// categorical variables

VAR_CATEGORICAL = 1,

}

opencv_type_enum! { crate::ml::VariableTypes }

pub type ANN_MLP_ANNEAL = crate::ml::ANN_MLP;

/// Creates test set

#[inline]

pub fn create_concentric_spheres_test_set(nsamples: i32, nfeatures: i32, nclasses: i32, samples: &mut impl core::ToOutputArray, responses: &mut impl core::ToOutputArray) -> Result<()> {

output_array_arg!(samples);

output_array_arg!(responses);

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_createConcentricSpheresTestSet_int_int_int_const__OutputArrayR_const__OutputArrayR(nsamples, nfeatures, nclasses, samples.as_raw__OutputArray(), responses.as_raw__OutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Generates _sample_ from multivariate normal distribution

///

/// ## Parameters

/// * mean: an average row vector

/// * cov: symmetric covariation matrix

/// * nsamples: returned samples count

/// * samples: returned samples array

#[inline]

pub fn rand_mv_normal(mean: &impl core::ToInputArray, cov: &impl core::ToInputArray, nsamples: i32, samples: &mut impl core::ToOutputArray) -> Result<()> {

input_array_arg!(mean);

input_array_arg!(cov);

output_array_arg!(samples);

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_randMVNormal_const__InputArrayR_const__InputArrayR_int_const__OutputArrayR(mean.as_raw__InputArray(), cov.as_raw__InputArray(), nsamples, samples.as_raw__OutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Constant methods for [crate::ml::ANN_MLP]

pub trait ANN_MLPTraitConst: crate::ml::StatModelTraitConst {

fn as_raw_ANN_MLP(&self) -> *const c_void;

/// Returns current training method

#[inline]

fn get_train_method(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getTrainMethod_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Integer vector specifying the number of neurons in each layer including the input and output layers.

/// The very first element specifies the number of elements in the input layer.

/// The last element - number of elements in the output layer.

/// ## See also

/// setLayerSizes

#[inline]

fn get_layer_sizes(&self) -> Result<core::Mat> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getLayerSizes_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Mat::opencv_from_extern(ret) };

Ok(ret)

}

/// Termination criteria of the training algorithm.

/// You can specify the maximum number of iterations (maxCount) and/or how much the error could

/// change between the iterations to make the algorithm continue (epsilon). Default value is

/// TermCriteria(TermCriteria::MAX_ITER + TermCriteria::EPS, 1000, 0.01).

/// ## See also

/// setTermCriteria

#[inline]

fn get_term_criteria(&self) -> Result<core::TermCriteria> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getTermCriteria_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// BPROP: Strength of the weight gradient term.

/// The recommended value is about 0.1. Default value is 0.1.

/// ## See also

/// setBackpropWeightScale

#[inline]

fn get_backprop_weight_scale(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getBackpropWeightScale_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// BPROP: Strength of the momentum term (the difference between weights on the 2 previous iterations).

/// This parameter provides some inertia to smooth the random fluctuations of the weights. It can

/// vary from 0 (the feature is disabled) to 1 and beyond. The value 0.1 or so is good enough.

/// Default value is 0.1.

/// ## See also

/// setBackpropMomentumScale

#[inline]

fn get_backprop_momentum_scale(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getBackpropMomentumScale_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Initial value  of update-values .

/// Default value is 0.1.

/// ## See also

/// setRpropDW0

#[inline]

fn get_rprop_dw0(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getRpropDW0_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Increase factor .

/// It must be \>1. Default value is 1.2.

/// ## See also

/// setRpropDWPlus

#[inline]

fn get_rprop_dw_plus(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getRpropDWPlus_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Decrease factor .

/// It must be \<1. Default value is 0.5.

/// ## See also

/// setRpropDWMinus

#[inline]

fn get_rprop_dw_minus(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getRpropDWMinus_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Update-values lower limit .

/// It must be positive. Default value is FLT_EPSILON.

/// ## See also

/// setRpropDWMin

#[inline]

fn get_rprop_dw_min(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getRpropDWMin_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Update-values upper limit .

/// It must be \>1. Default value is 50.

/// ## See also

/// setRpropDWMax

#[inline]

fn get_rprop_dw_max(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getRpropDWMax_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// ANNEAL: Update initial temperature.

/// It must be \>=0. Default value is 10.

/// ## See also

/// setAnnealInitialT

#[inline]

fn get_anneal_initial_t(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getAnnealInitialT_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// ANNEAL: Update final temperature.

/// It must be \>=0 and less than initialT. Default value is 0.1.

/// ## See also

/// setAnnealFinalT

#[inline]

fn get_anneal_final_t(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getAnnealFinalT_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// ANNEAL: Update cooling ratio.

/// It must be \>0 and less than 1. Default value is 0.95.

/// ## See also

/// setAnnealCoolingRatio

#[inline]

fn get_anneal_cooling_ratio(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getAnnealCoolingRatio_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// ANNEAL: Update iteration per step.

/// It must be \>0 . Default value is 10.

/// ## See also

/// setAnnealItePerStep

#[inline]

fn get_anneal_ite_per_step(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getAnnealItePerStep_const(self.as_raw_ANN_MLP(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_weights(&self, layer_idx: i32) -> Result<core::Mat> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_getWeights_const_int(self.as_raw_ANN_MLP(), layer_idx, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Mat::opencv_from_extern(ret) };

Ok(ret)

}

}

/// Mutable methods for [crate::ml::ANN_MLP]

pub trait ANN_MLPTrait: crate::ml::ANN_MLPTraitConst + crate::ml::StatModelTrait {

fn as_raw_mut_ANN_MLP(&mut self) -> *mut c_void;

/// Sets training method and common parameters.

/// ## Parameters

/// * method: Default value is ANN_MLP::RPROP. See ANN_MLP::TrainingMethods.

/// * param1: passed to setRpropDW0 for ANN_MLP::RPROP and to setBackpropWeightScale for ANN_MLP::BACKPROP and to initialT for ANN_MLP::ANNEAL.

/// * param2: passed to setRpropDWMin for ANN_MLP::RPROP and to setBackpropMomentumScale for ANN_MLP::BACKPROP and to finalT for ANN_MLP::ANNEAL.

///

/// ## C++ default parameters

/// * param1: 0

/// * param2: 0

#[inline]

fn set_train_method(&mut self, method: i32, param1: f64, param2: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setTrainMethod_int_double_double(self.as_raw_mut_ANN_MLP(), method, param1, param2, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Initialize the activation function for each neuron.

/// Currently the default and the only fully supported activation function is ANN_MLP::SIGMOID_SYM.

/// ## Parameters

/// * type: The type of activation function. See ANN_MLP::ActivationFunctions.

/// * param1: The first parameter of the activation function, . Default value is 0.

/// * param2: The second parameter of the activation function, . Default value is 0.

///

/// ## C++ default parameters

/// * param1: 0

/// * param2: 0

#[inline]

fn set_activation_function(&mut self, typ: i32, param1: f64, param2: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setActivationFunction_int_double_double(self.as_raw_mut_ANN_MLP(), typ, param1, param2, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Integer vector specifying the number of neurons in each layer including the input and output layers.

/// The very first element specifies the number of elements in the input layer.

/// The last element - number of elements in the output layer. Default value is empty Mat.

/// ## See also

/// getLayerSizes

#[inline]

fn set_layer_sizes(&mut self, _layer_sizes: &impl core::ToInputArray) -> Result<()> {

input_array_arg!(_layer_sizes);

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setLayerSizes_const__InputArrayR(self.as_raw_mut_ANN_MLP(), _layer_sizes.as_raw__InputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Termination criteria of the training algorithm.

/// You can specify the maximum number of iterations (maxCount) and/or how much the error could

/// change between the iterations to make the algorithm continue (epsilon). Default value is

/// TermCriteria(TermCriteria::MAX_ITER + TermCriteria::EPS, 1000, 0.01).

/// ## See also

/// setTermCriteria getTermCriteria

#[inline]

fn set_term_criteria(&mut self, val: core::TermCriteria) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setTermCriteria_TermCriteria(self.as_raw_mut_ANN_MLP(), val.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// BPROP: Strength of the weight gradient term.

/// The recommended value is about 0.1. Default value is 0.1.

/// ## See also

/// setBackpropWeightScale getBackpropWeightScale

#[inline]

fn set_backprop_weight_scale(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setBackpropWeightScale_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// BPROP: Strength of the momentum term (the difference between weights on the 2 previous iterations).

/// This parameter provides some inertia to smooth the random fluctuations of the weights. It can

/// vary from 0 (the feature is disabled) to 1 and beyond. The value 0.1 or so is good enough.

/// Default value is 0.1.

/// ## See also

/// setBackpropMomentumScale getBackpropMomentumScale

#[inline]

fn set_backprop_momentum_scale(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setBackpropMomentumScale_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Initial value  of update-values .

/// Default value is 0.1.

/// ## See also

/// setRpropDW0 getRpropDW0

#[inline]

fn set_rprop_dw0(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setRpropDW0_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Increase factor .

/// It must be \>1. Default value is 1.2.

/// ## See also

/// setRpropDWPlus getRpropDWPlus

#[inline]

fn set_rprop_dw_plus(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setRpropDWPlus_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Decrease factor .

/// It must be \<1. Default value is 0.5.

/// ## See also

/// setRpropDWMinus getRpropDWMinus

#[inline]

fn set_rprop_dw_minus(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setRpropDWMinus_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Update-values lower limit .

/// It must be positive. Default value is FLT_EPSILON.

/// ## See also

/// setRpropDWMin getRpropDWMin

#[inline]

fn set_rprop_dw_min(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setRpropDWMin_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// RPROP: Update-values upper limit .

/// It must be \>1. Default value is 50.

/// ## See also

/// setRpropDWMax getRpropDWMax

#[inline]

fn set_rprop_dw_max(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setRpropDWMax_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// ANNEAL: Update initial temperature.

/// It must be \>=0. Default value is 10.

/// ## See also

/// setAnnealInitialT getAnnealInitialT

#[inline]

fn set_anneal_initial_t(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setAnnealInitialT_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// ANNEAL: Update final temperature.

/// It must be \>=0 and less than initialT. Default value is 0.1.

/// ## See also

/// setAnnealFinalT getAnnealFinalT

#[inline]

fn set_anneal_final_t(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setAnnealFinalT_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// ANNEAL: Update cooling ratio.

/// It must be \>0 and less than 1. Default value is 0.95.

/// ## See also

/// setAnnealCoolingRatio getAnnealCoolingRatio

#[inline]

fn set_anneal_cooling_ratio(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setAnnealCoolingRatio_double(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// ANNEAL: Update iteration per step.

/// It must be \>0 . Default value is 10.

/// ## See also

/// setAnnealItePerStep getAnnealItePerStep

#[inline]

fn set_anneal_ite_per_step(&mut self, val: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setAnnealItePerStep_int(self.as_raw_mut_ANN_MLP(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Set/initialize anneal RNG

#[inline]

fn set_anneal_energy_rng(&mut self, rng: &core::RNG) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_setAnnealEnergyRNG_const_RNGR(self.as_raw_mut_ANN_MLP(), rng.as_raw_RNG(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Artificial Neural Networks - Multi-Layer Perceptrons.

///

/// Unlike many other models in ML that are constructed and trained at once, in the MLP model these

/// steps are separated. First, a network with the specified topology is created using the non-default

/// constructor or the method ANN_MLP::create. All the weights are set to zeros. Then, the network is

/// trained using a set of input and output vectors. The training procedure can be repeated more than

/// once, that is, the weights can be adjusted based on the new training data.

///

/// Additional flags for StatModel::train are available: ANN_MLP::TrainFlags.

/// ## See also

/// [ml_intro_ann]

pub struct ANN_MLP {

ptr: *mut c_void

}

opencv_type_boxed! { ANN_MLP }

impl Drop for ANN_MLP {

#[inline]

fn drop(&mut self) {

extern "C" { fn cv_ANN_MLP_delete(instance: *mut c_void); }

unsafe { cv_ANN_MLP_delete(self.as_raw_mut_ANN_MLP()) };

}

}

unsafe impl Send for ANN_MLP {}

impl core::AlgorithmTraitConst for ANN_MLP {

#[inline] fn as_raw_Algorithm(&self) -> *const c_void { self.as_raw() }

}

impl core::AlgorithmTrait for ANN_MLP {

#[inline] fn as_raw_mut_Algorithm(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl crate::ml::StatModelTraitConst for ANN_MLP {

#[inline] fn as_raw_StatModel(&self) -> *const c_void { self.as_raw() }

}

impl crate::ml::StatModelTrait for ANN_MLP {

#[inline] fn as_raw_mut_StatModel(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl crate::ml::ANN_MLPTraitConst for ANN_MLP {

#[inline] fn as_raw_ANN_MLP(&self) -> *const c_void { self.as_raw() }

}

impl crate::ml::ANN_MLPTrait for ANN_MLP {

#[inline] fn as_raw_mut_ANN_MLP(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl ANN_MLP {

/// Creates empty model

///

/// Use StatModel::train to train the model, Algorithm::load\<ANN_MLP\>(filename) to load the pre-trained model.

/// Note that the train method has optional flags: ANN_MLP::TrainFlags.

#[inline]

pub fn create() -> Result<core::Ptr<crate::ml::ANN_MLP>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_create(ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::ml::ANN_MLP>::opencv_from_extern(ret) };

Ok(ret)

}

/// Loads and creates a serialized ANN from a file

///

/// Use ANN::save to serialize and store an ANN to disk.

/// Load the ANN from this file again, by calling this function with the path to the file.

///

/// ## Parameters

/// * filepath: path to serialized ANN

#[inline]

pub fn load(filepath: &str) -> Result<core::Ptr<crate::ml::ANN_MLP>> {

extern_container_arg!(filepath);

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_ANN_MLP_load_const_StringR(filepath.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::ml::ANN_MLP>::opencv_from_extern(ret) };

Ok(ret)

}

}

boxed_cast_base! { ANN_MLP, core::Algorithm, cv_ANN_MLP_to_Algorithm }

/// Constant methods for [crate::ml::Boost]

pub trait BoostTraitConst: crate::ml::DTreesTraitConst {

fn as_raw_Boost(&self) -> *const c_void;

/// Type of the boosting algorithm.

/// See Boost::Types. Default value is Boost::REAL.

/// ## See also

/// setBoostType

#[inline]

fn get_boost_type(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_Boost_getBoostType_const(self.as_raw_Boost(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// The number of weak classifiers.

/// Default value is 100.

/// ## See also

/// setWeakCount

#[inline]

fn get_weak_count(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_Boost_getWeakCount_const(self.as_raw_Boost(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

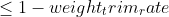

/// A threshold between 0 and 1 used to save computational time.

/// Samples with summary weight  do not participate in the *next*

/// iteration of training. Set this parameter to 0 to turn off this functionality. Default value is 0.95.

/// ## See also

/// setWeightTrimRate

#[inline]

fn get_weight_trim_rate(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_Boost_getWeightTrimRate_const(self.as_raw_Boost(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Mutable methods for [crate::ml::Boost]

pub trait BoostTrait: crate::ml::BoostTraitConst + crate::ml::DTreesTrait {

fn as_raw_mut_Boost(&mut self) -> *mut c_void;

/// Type of the boosting algorithm.

/// See Boost::Types. Default value is Boost::REAL.

/// ## See also

/// setBoostType getBoostType

#[inline]

fn set_boost_type(&mut self, val: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_Boost_setBoostType_int(self.as_raw_mut_Boost(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// The number of weak classifiers.

/// Default value is 100.

/// ## See also

/// setWeakCount getWeakCount

#[inline]

fn set_weak_count(&mut self, val: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_Boost_setWeakCount_int(self.as_raw_mut_Boost(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// A threshold between 0 and 1 used to save computational time.

/// Samples with summary weight  do not participate in the *next*

/// iteration of training. Set this parameter to 0 to turn off this functionality. Default value is 0.95.

/// ## See also

/// setWeightTrimRate getWeightTrimRate

#[inline]

fn set_weight_trim_rate(&mut self, val: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_Boost_setWeightTrimRate_double(self.as_raw_mut_Boost(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Boosted tree classifier derived from DTrees

/// ## See also

/// [ml_intro_boost]

pub struct Boost {

ptr: *mut c_void

}

opencv_type_boxed! { Boost }

impl Drop for Boost {

#[inline]

fn drop(&mut self) {

extern "C" { fn cv_Boost_delete(instance: *mut c_void); }

unsafe { cv_Boost_delete(self.as_raw_mut_Boost()) };

}

}

unsafe impl Send for Boost {}

impl core::AlgorithmTraitConst for Boost {

#[inline] fn as_raw_Algorithm(&self) -> *const c_void { self.as_raw() }

}

impl core::AlgorithmTrait for Boost {

#[inline] fn as_raw_mut_Algorithm(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl crate::ml::DTreesTraitConst for Boost {

#[inline] fn as_raw_DTrees(&self) -> *const c_void { self.as_raw() }

}

impl crate::ml::DTreesTrait for Boost {

#[inline] fn as_raw_mut_DTrees(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl crate::ml::StatModelTraitConst for Boost {

#[inline] fn as_raw_StatModel(&self) -> *const c_void { self.as_raw() }

}

impl crate::ml::StatModelTrait for Boost {

#[inline] fn as_raw_mut_StatModel(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl crate::ml::BoostTraitConst for Boost {

#[inline] fn as_raw_Boost(&self) -> *const c_void { self.as_raw() }

}

impl crate::ml::BoostTrait for Boost {

#[inline] fn as_raw_mut_Boost(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl Boost {

/// Creates the empty model.

/// Use StatModel::train to train the model, Algorithm::load\<Boost\>(filename) to load the pre-trained model.

#[inline]

pub fn create() -> Result<core::Ptr<crate::ml::Boost>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_Boost_create(ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::ml::Boost>::opencv_from_extern(ret) };

Ok(ret)

}

/// Loads and creates a serialized Boost from a file

///

/// Use Boost::save to serialize and store an RTree to disk.

/// Load the Boost from this file again, by calling this function with the path to the file.

/// Optionally specify the node for the file containing the classifier

///

/// ## Parameters

/// * filepath: path to serialized Boost

/// * nodeName: name of node containing the classifier

///

/// ## C++ default parameters

/// * node_name: String()

#[inline]

pub fn load(filepath: &str, node_name: &str) -> Result<core::Ptr<crate::ml::Boost>> {

extern_container_arg!(filepath);

extern_container_arg!(node_name);

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_Boost_load_const_StringR_const_StringR(filepath.opencv_as_extern(), node_name.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::ml::Boost>::opencv_from_extern(ret) };

Ok(ret)

}

}

boxed_cast_base! { Boost, core::Algorithm, cv_Boost_to_Algorithm }

/// Constant methods for [crate::ml::DTrees]

pub trait DTreesTraitConst: crate::ml::StatModelTraitConst {

fn as_raw_DTrees(&self) -> *const c_void;

/// Cluster possible values of a categorical variable into K\<=maxCategories clusters to

/// find a suboptimal split.

/// If a discrete variable, on which the training procedure tries to make a split, takes more than

/// maxCategories values, the precise best subset estimation may take a very long time because the

/// algorithm is exponential. Instead, many decision trees engines (including our implementation)

/// try to find sub-optimal split in this case by clustering all the samples into maxCategories

/// clusters that is some categories are merged together. The clustering is applied only in n \>

/// 2-class classification problems for categorical variables with N \> max_categories possible

/// values. In case of regression and 2-class classification the optimal split can be found

/// efficiently without employing clustering, thus the parameter is not used in these cases.

/// Default value is 10.

/// ## See also

/// setMaxCategories

#[inline]

fn get_max_categories(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getMaxCategories_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// The maximum possible depth of the tree.

/// That is the training algorithms attempts to split a node while its depth is less than maxDepth.

/// The root node has zero depth. The actual depth may be smaller if the other termination criteria

/// are met (see the outline of the training procedure [ml_intro_trees] "here"), and/or if the

/// tree is pruned. Default value is INT_MAX.

/// ## See also

/// setMaxDepth

#[inline]

fn get_max_depth(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getMaxDepth_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If the number of samples in a node is less than this parameter then the node will not be split.

///

/// Default value is 10.

/// ## See also

/// setMinSampleCount

#[inline]

fn get_min_sample_count(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getMinSampleCount_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If CVFolds \> 1 then algorithms prunes the built decision tree using K-fold

/// cross-validation procedure where K is equal to CVFolds.

/// Default value is 10.

/// ## See also

/// setCVFolds

#[inline]

fn get_cv_folds(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getCVFolds_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If true then surrogate splits will be built.

/// These splits allow to work with missing data and compute variable importance correctly.

/// Default value is false.

///

/// Note: currently it's not implemented.

/// ## See also

/// setUseSurrogates

#[inline]

fn get_use_surrogates(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getUseSurrogates_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If true then a pruning will be harsher.

/// This will make a tree more compact and more resistant to the training data noise but a bit less

/// accurate. Default value is true.

/// ## See also

/// setUse1SERule

#[inline]

fn get_use1_se_rule(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getUse1SERule_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If true then pruned branches are physically removed from the tree.

/// Otherwise they are retained and it is possible to get results from the original unpruned (or

/// pruned less aggressively) tree. Default value is true.

/// ## See also

/// setTruncatePrunedTree

#[inline]

fn get_truncate_pruned_tree(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getTruncatePrunedTree_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Termination criteria for regression trees.

/// If all absolute differences between an estimated value in a node and values of train samples

/// in this node are less than this parameter then the node will not be split further. Default

/// value is 0.01f

/// ## See also

/// setRegressionAccuracy

#[inline]

fn get_regression_accuracy(&self) -> Result<f32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getRegressionAccuracy_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// The array of a priori class probabilities, sorted by the class label value.

///

/// The parameter can be used to tune the decision tree preferences toward a certain class. For

/// example, if you want to detect some rare anomaly occurrence, the training base will likely

/// contain much more normal cases than anomalies, so a very good classification performance

/// will be achieved just by considering every case as normal. To avoid this, the priors can be

/// specified, where the anomaly probability is artificially increased (up to 0.5 or even

/// greater), so the weight of the misclassified anomalies becomes much bigger, and the tree is

/// adjusted properly.

///

/// You can also think about this parameter as weights of prediction categories which determine

/// relative weights that you give to misclassification. That is, if the weight of the first

/// category is 1 and the weight of the second category is 10, then each mistake in predicting

/// the second category is equivalent to making 10 mistakes in predicting the first category.

/// Default value is empty Mat.

/// ## See also

/// setPriors

#[inline]

fn get_priors(&self) -> Result<core::Mat> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getPriors_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Mat::opencv_from_extern(ret) };

Ok(ret)

}

/// Returns indices of root nodes

#[inline]

fn get_roots(&self) -> Result<core::Vector<i32>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getRoots_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Vector::<i32>::opencv_from_extern(ret) };

Ok(ret)

}

/// Returns all the nodes

///

/// all the node indices are indices in the returned vector

#[inline]

fn get_nodes(&self) -> Result<core::Vector<crate::ml::DTrees_Node>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getNodes_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Vector::<crate::ml::DTrees_Node>::opencv_from_extern(ret) };

Ok(ret)

}

/// Returns all the splits

///

/// all the split indices are indices in the returned vector

#[inline]

fn get_splits(&self) -> Result<core::Vector<crate::ml::DTrees_Split>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getSplits_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Vector::<crate::ml::DTrees_Split>::opencv_from_extern(ret) };

Ok(ret)

}

/// Returns all the bitsets for categorical splits

///

/// Split::subsetOfs is an offset in the returned vector

#[inline]

fn get_subsets(&self) -> Result<core::Vector<i32>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_getSubsets_const(self.as_raw_DTrees(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Vector::<i32>::opencv_from_extern(ret) };

Ok(ret)

}

}

/// Mutable methods for [crate::ml::DTrees]

pub trait DTreesTrait: crate::ml::DTreesTraitConst + crate::ml::StatModelTrait {

fn as_raw_mut_DTrees(&mut self) -> *mut c_void;

/// Cluster possible values of a categorical variable into K\<=maxCategories clusters to

/// find a suboptimal split.

/// If a discrete variable, on which the training procedure tries to make a split, takes more than

/// maxCategories values, the precise best subset estimation may take a very long time because the

/// algorithm is exponential. Instead, many decision trees engines (including our implementation)

/// try to find sub-optimal split in this case by clustering all the samples into maxCategories

/// clusters that is some categories are merged together. The clustering is applied only in n \>

/// 2-class classification problems for categorical variables with N \> max_categories possible

/// values. In case of regression and 2-class classification the optimal split can be found

/// efficiently without employing clustering, thus the parameter is not used in these cases.

/// Default value is 10.

/// ## See also

/// setMaxCategories getMaxCategories

#[inline]

fn set_max_categories(&mut self, val: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setMaxCategories_int(self.as_raw_mut_DTrees(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// The maximum possible depth of the tree.

/// That is the training algorithms attempts to split a node while its depth is less than maxDepth.

/// The root node has zero depth. The actual depth may be smaller if the other termination criteria

/// are met (see the outline of the training procedure [ml_intro_trees] "here"), and/or if the

/// tree is pruned. Default value is INT_MAX.

/// ## See also

/// setMaxDepth getMaxDepth

#[inline]

fn set_max_depth(&mut self, val: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setMaxDepth_int(self.as_raw_mut_DTrees(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If the number of samples in a node is less than this parameter then the node will not be split.

///

/// Default value is 10.

/// ## See also

/// setMinSampleCount getMinSampleCount

#[inline]

fn set_min_sample_count(&mut self, val: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setMinSampleCount_int(self.as_raw_mut_DTrees(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If CVFolds \> 1 then algorithms prunes the built decision tree using K-fold

/// cross-validation procedure where K is equal to CVFolds.

/// Default value is 10.

/// ## See also

/// setCVFolds getCVFolds

#[inline]

fn set_cv_folds(&mut self, val: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setCVFolds_int(self.as_raw_mut_DTrees(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If true then surrogate splits will be built.

/// These splits allow to work with missing data and compute variable importance correctly.

/// Default value is false.

///

/// Note: currently it's not implemented.

/// ## See also

/// setUseSurrogates getUseSurrogates

#[inline]

fn set_use_surrogates(&mut self, val: bool) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setUseSurrogates_bool(self.as_raw_mut_DTrees(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If true then a pruning will be harsher.

/// This will make a tree more compact and more resistant to the training data noise but a bit less

/// accurate. Default value is true.

/// ## See also

/// setUse1SERule getUse1SERule

#[inline]

fn set_use1_se_rule(&mut self, val: bool) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setUse1SERule_bool(self.as_raw_mut_DTrees(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// If true then pruned branches are physically removed from the tree.

/// Otherwise they are retained and it is possible to get results from the original unpruned (or

/// pruned less aggressively) tree. Default value is true.

/// ## See also

/// setTruncatePrunedTree getTruncatePrunedTree

#[inline]

fn set_truncate_pruned_tree(&mut self, val: bool) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setTruncatePrunedTree_bool(self.as_raw_mut_DTrees(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Termination criteria for regression trees.

/// If all absolute differences between an estimated value in a node and values of train samples

/// in this node are less than this parameter then the node will not be split further. Default

/// value is 0.01f

/// ## See also

/// setRegressionAccuracy getRegressionAccuracy

#[inline]

fn set_regression_accuracy(&mut self, val: f32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setRegressionAccuracy_float(self.as_raw_mut_DTrees(), val, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// The array of a priori class probabilities, sorted by the class label value.

///

/// The parameter can be used to tune the decision tree preferences toward a certain class. For

/// example, if you want to detect some rare anomaly occurrence, the training base will likely

/// contain much more normal cases than anomalies, so a very good classification performance

/// will be achieved just by considering every case as normal. To avoid this, the priors can be

/// specified, where the anomaly probability is artificially increased (up to 0.5 or even

/// greater), so the weight of the misclassified anomalies becomes much bigger, and the tree is

/// adjusted properly.

///

/// You can also think about this parameter as weights of prediction categories which determine

/// relative weights that you give to misclassification. That is, if the weight of the first

/// category is 1 and the weight of the second category is 10, then each mistake in predicting

/// the second category is equivalent to making 10 mistakes in predicting the first category.

/// Default value is empty Mat.

/// ## See also

/// setPriors getPriors

#[inline]

fn set_priors(&mut self, val: &core::Mat) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_setPriors_const_MatR(self.as_raw_mut_DTrees(), val.as_raw_Mat(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// The class represents a single decision tree or a collection of decision trees.

///

/// The current public interface of the class allows user to train only a single decision tree, however

/// the class is capable of storing multiple decision trees and using them for prediction (by summing

/// responses or using a voting schemes), and the derived from DTrees classes (such as RTrees and Boost)

/// use this capability to implement decision tree ensembles.

/// ## See also

/// [ml_intro_trees]

pub struct DTrees {

ptr: *mut c_void

}

opencv_type_boxed! { DTrees }

impl Drop for DTrees {

#[inline]

fn drop(&mut self) {

extern "C" { fn cv_DTrees_delete(instance: *mut c_void); }

unsafe { cv_DTrees_delete(self.as_raw_mut_DTrees()) };

}

}

unsafe impl Send for DTrees {}

impl core::AlgorithmTraitConst for DTrees {

#[inline] fn as_raw_Algorithm(&self) -> *const c_void { self.as_raw() }

}

impl core::AlgorithmTrait for DTrees {

#[inline] fn as_raw_mut_Algorithm(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl crate::ml::StatModelTraitConst for DTrees {

#[inline] fn as_raw_StatModel(&self) -> *const c_void { self.as_raw() }

}

impl crate::ml::StatModelTrait for DTrees {

#[inline] fn as_raw_mut_StatModel(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl crate::ml::DTreesTraitConst for DTrees {

#[inline] fn as_raw_DTrees(&self) -> *const c_void { self.as_raw() }

}

impl crate::ml::DTreesTrait for DTrees {

#[inline] fn as_raw_mut_DTrees(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl DTrees {

/// Creates the empty model

///

/// The static method creates empty decision tree with the specified parameters. It should be then

/// trained using train method (see StatModel::train). Alternatively, you can load the model from

/// file using Algorithm::load\<DTrees\>(filename).

#[inline]

pub fn create() -> Result<core::Ptr<crate::ml::DTrees>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_create(ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::ml::DTrees>::opencv_from_extern(ret) };

Ok(ret)

}

/// Loads and creates a serialized DTrees from a file

///

/// Use DTree::save to serialize and store an DTree to disk.

/// Load the DTree from this file again, by calling this function with the path to the file.

/// Optionally specify the node for the file containing the classifier

///

/// ## Parameters

/// * filepath: path to serialized DTree

/// * nodeName: name of node containing the classifier

///

/// ## C++ default parameters

/// * node_name: String()

#[inline]

pub fn load(filepath: &str, node_name: &str) -> Result<core::Ptr<crate::ml::DTrees>> {

extern_container_arg!(filepath);

extern_container_arg!(node_name);

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_load_const_StringR_const_StringR(filepath.opencv_as_extern(), node_name.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::ml::DTrees>::opencv_from_extern(ret) };

Ok(ret)

}

}

boxed_cast_base! { DTrees, core::Algorithm, cv_DTrees_to_Algorithm }

/// Constant methods for [crate::ml::DTrees_Node]

pub trait DTrees_NodeTraitConst {

fn as_raw_DTrees_Node(&self) -> *const c_void;

/// Value at the node: a class label in case of classification or estimated

/// function value in case of regression.

#[inline]

fn value(&self) -> f64 {

let ret = unsafe { sys::cv_ml_DTrees_Node_getPropValue_const(self.as_raw_DTrees_Node()) };

ret

}

/// Class index normalized to 0..class_count-1 range and assigned to the

/// node. It is used internally in classification trees and tree ensembles.

#[inline]

fn class_idx(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Node_getPropClassIdx_const(self.as_raw_DTrees_Node()) };

ret

}

/// Index of the parent node

#[inline]

fn parent(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Node_getPropParent_const(self.as_raw_DTrees_Node()) };

ret

}

/// Index of the left child node

#[inline]

fn left(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Node_getPropLeft_const(self.as_raw_DTrees_Node()) };

ret

}

/// Index of right child node

#[inline]

fn right(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Node_getPropRight_const(self.as_raw_DTrees_Node()) };

ret

}

/// Default direction where to go (-1: left or +1: right). It helps in the

/// case of missing values.

#[inline]

fn default_dir(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Node_getPropDefaultDir_const(self.as_raw_DTrees_Node()) };

ret

}

/// Index of the first split

#[inline]

fn split(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Node_getPropSplit_const(self.as_raw_DTrees_Node()) };

ret

}

}

/// Mutable methods for [crate::ml::DTrees_Node]

pub trait DTrees_NodeTrait: crate::ml::DTrees_NodeTraitConst {

fn as_raw_mut_DTrees_Node(&mut self) -> *mut c_void;

/// Value at the node: a class label in case of classification or estimated

/// function value in case of regression.

#[inline]

fn set_value(&mut self, val: f64) {

let ret = unsafe { sys::cv_ml_DTrees_Node_setPropValue_double(self.as_raw_mut_DTrees_Node(), val) };

ret

}

/// Class index normalized to 0..class_count-1 range and assigned to the

/// node. It is used internally in classification trees and tree ensembles.

#[inline]

fn set_class_idx(&mut self, val: i32) {

let ret = unsafe { sys::cv_ml_DTrees_Node_setPropClassIdx_int(self.as_raw_mut_DTrees_Node(), val) };

ret

}

/// Index of the parent node

#[inline]

fn set_parent(&mut self, val: i32) {

let ret = unsafe { sys::cv_ml_DTrees_Node_setPropParent_int(self.as_raw_mut_DTrees_Node(), val) };

ret

}

/// Index of the left child node

#[inline]

fn set_left(&mut self, val: i32) {

let ret = unsafe { sys::cv_ml_DTrees_Node_setPropLeft_int(self.as_raw_mut_DTrees_Node(), val) };

ret

}

/// Index of right child node

#[inline]

fn set_right(&mut self, val: i32) {

let ret = unsafe { sys::cv_ml_DTrees_Node_setPropRight_int(self.as_raw_mut_DTrees_Node(), val) };

ret

}

/// Default direction where to go (-1: left or +1: right). It helps in the

/// case of missing values.

#[inline]

fn set_default_dir(&mut self, val: i32) {

let ret = unsafe { sys::cv_ml_DTrees_Node_setPropDefaultDir_int(self.as_raw_mut_DTrees_Node(), val) };

ret

}

/// Index of the first split

#[inline]

fn set_split(&mut self, val: i32) {

let ret = unsafe { sys::cv_ml_DTrees_Node_setPropSplit_int(self.as_raw_mut_DTrees_Node(), val) };

ret

}

}

/// The class represents a decision tree node.

pub struct DTrees_Node {

ptr: *mut c_void

}

opencv_type_boxed! { DTrees_Node }

impl Drop for DTrees_Node {

#[inline]

fn drop(&mut self) {

extern "C" { fn cv_DTrees_Node_delete(instance: *mut c_void); }

unsafe { cv_DTrees_Node_delete(self.as_raw_mut_DTrees_Node()) };

}

}

unsafe impl Send for DTrees_Node {}

impl crate::ml::DTrees_NodeTraitConst for DTrees_Node {

#[inline] fn as_raw_DTrees_Node(&self) -> *const c_void { self.as_raw() }

}

impl crate::ml::DTrees_NodeTrait for DTrees_Node {

#[inline] fn as_raw_mut_DTrees_Node(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl DTrees_Node {

#[inline]

pub fn default() -> Result<crate::ml::DTrees_Node> {

return_send!(via ocvrs_return);

unsafe { sys::cv_ml_DTrees_Node_Node(ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::ml::DTrees_Node::opencv_from_extern(ret) };

Ok(ret)

}

}

/// Constant methods for [crate::ml::DTrees_Split]

pub trait DTrees_SplitTraitConst {

fn as_raw_DTrees_Split(&self) -> *const c_void;

/// Index of variable on which the split is created.

#[inline]

fn var_idx(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Split_getPropVarIdx_const(self.as_raw_DTrees_Split()) };

ret

}

/// If true, then the inverse split rule is used (i.e. left and right

/// branches are exchanged in the rule expressions below).

#[inline]

fn inversed(&self) -> bool {

let ret = unsafe { sys::cv_ml_DTrees_Split_getPropInversed_const(self.as_raw_DTrees_Split()) };

ret

}

/// The split quality, a positive number. It is used to choose the best split.

#[inline]

fn quality(&self) -> f32 {

let ret = unsafe { sys::cv_ml_DTrees_Split_getPropQuality_const(self.as_raw_DTrees_Split()) };

ret

}

/// Index of the next split in the list of splits for the node

#[inline]

fn next(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Split_getPropNext_const(self.as_raw_DTrees_Split()) };

ret

}

/// < The threshold value in case of split on an ordered variable.

/// The rule is:

/// ```C++

/// if var_value < c

/// then next_node <- left

/// else next_node <- right

/// ```

///

#[inline]

fn c(&self) -> f32 {

let ret = unsafe { sys::cv_ml_DTrees_Split_getPropC_const(self.as_raw_DTrees_Split()) };

ret

}

/// < Offset of the bitset used by the split on a categorical variable.

/// The rule is:

/// ```C++

/// if bitset[var_value] == 1

/// then next_node <- left

/// else next_node <- right

/// ```

///

#[inline]

fn subset_ofs(&self) -> i32 {

let ret = unsafe { sys::cv_ml_DTrees_Split_getPropSubsetOfs_const(self.as_raw_DTrees_Split()) };

ret

}

}

/// Mutable methods for [crate::ml::DTrees_Split]

pub trait DTrees_SplitTrait: crate::ml::DTrees_SplitTraitConst {

fn as_raw_mut_DTrees_Split(&mut self) -> *mut c_void;

/// Index of variable on which the split is created.

#[inline]

fn set_var_idx(&mut self, val: i32) {

let ret = unsafe { sys::cv_ml_DTrees_Split_setPropVarIdx_int(self.as_raw_mut_DTrees_Split(), val) };

ret

}

/// If true, then the inverse split rule is used (i.e. left and right

/// branches are exchanged in the rule expressions below).

#[inline]

fn set_inversed(&mut self, val: bool) {

let ret = unsafe { sys::cv_ml_DTrees_Split_setPropInversed_bool(self.as_raw_mut_DTrees_Split(), val) };

ret

}

/// The split quality, a positive number. It is used to choose the best split.

#[inline]

fn set_quality(&mut self, val: f32) {