pub mod imgproc {

//! # Image Processing

//!

//! This module includes image-processing functions.

//! # Image Filtering

//!

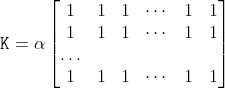

//! Functions and classes described in this section are used to perform various linear or non-linear

//! filtering operations on 2D images (represented as Mat's). It means that for each pixel location

//!  in the source image (normally, rectangular), its neighborhood is considered and used to

//! compute the response. In case of a linear filter, it is a weighted sum of pixel values. In case of

//! morphological operations, it is the minimum or maximum values, and so on. The computed response is

//! stored in the destination image at the same location . It means that the output image

//! will be of the same size as the input image. Normally, the functions support multi-channel arrays,

//! in which case every channel is processed independently. Therefore, the output image will also have

//! the same number of channels as the input one.

//!

//! Another common feature of the functions and classes described in this section is that, unlike

//! simple arithmetic functions, they need to extrapolate values of some non-existing pixels. For

//! example, if you want to smooth an image using a Gaussian  filter, then, when

//! processing the left-most pixels in each row, you need pixels to the left of them, that is, outside

//! of the image. You can let these pixels be the same as the left-most image pixels ("replicated

//! border" extrapolation method), or assume that all the non-existing pixels are zeros ("constant

//! border" extrapolation method), and so on. OpenCV enables you to specify the extrapolation method.

//! For details, see #BorderTypes

//!

//! @anchor filter_depths

//! ### Depth combinations

//! Input depth (src.depth()) | Output depth (ddepth)

//! --------------------------|----------------------

//! CV_8U | -1/CV_16S/CV_32F/CV_64F

//! CV_16U/CV_16S | -1/CV_32F/CV_64F

//! CV_32F | -1/CV_32F

//! CV_64F | -1/CV_64F

//!

//!

//! Note: when ddepth=-1, the output image will have the same depth as the source.

//!

//!

//! Note: if you need double floating-point accuracy and using single floating-point input data

//! (CV_32F input and CV_64F output depth combination), you can use [Mat].convertTo to convert

//! the input data to the desired precision.

//!

//! # Geometric Image Transformations

//!

//! The functions in this section perform various geometrical transformations of 2D images. They do not

//! change the image content but deform the pixel grid and map this deformed grid to the destination

//! image. In fact, to avoid sampling artifacts, the mapping is done in the reverse order, from

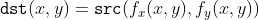

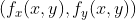

//! destination to the source. That is, for each pixel  of the destination image, the

//! functions compute coordinates of the corresponding "donor" pixel in the source image and copy the

//! pixel value:

//!

//!

//!

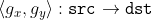

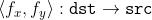

//! In case when you specify the forward mapping , the OpenCV functions first compute the corresponding inverse mapping

//!  and then use the above formula.

//!

//! The actual implementations of the geometrical transformations, from the most generic remap and to

//! the simplest and the fastest resize, need to solve two main problems with the above formula:

//!

//! - Extrapolation of non-existing pixels. Similarly to the filtering functions described in the

//! previous section, for some , either one of , or , or both

//! of them may fall outside of the image. In this case, an extrapolation method needs to be used.

//! OpenCV provides the same selection of extrapolation methods as in the filtering functions. In

//! addition, it provides the method #BORDER_TRANSPARENT. This means that the corresponding pixels in

//! the destination image will not be modified at all.

//!

//! - Interpolation of pixel values. Usually  and  are floating-point

//! numbers. This means that  can be either an affine or perspective

//! transformation, or radial lens distortion correction, and so on. So, a pixel value at fractional

//! coordinates needs to be retrieved. In the simplest case, the coordinates can be just rounded to the

//! nearest integer coordinates and the corresponding pixel can be used. This is called a

//! nearest-neighbor interpolation. However, a better result can be achieved by using more

//! sophisticated [interpolation methods](http://en.wikipedia.org/wiki/Multivariate_interpolation) ,

//! where a polynomial function is fit into some neighborhood of the computed pixel , and then the value of the polynomial at  is taken as the

//! interpolated pixel value. In OpenCV, you can choose between several interpolation methods. See

//! #resize for details.

//!

//!

//! Note: The geometrical transformations do not work with `CV_8S` or `CV_32S` images.

//!

//! # Miscellaneous Image Transformations

//! # Drawing Functions

//!

//! Drawing functions work with matrices/images of arbitrary depth. The boundaries of the shapes can be

//! rendered with antialiasing (implemented only for 8-bit images for now). All the functions include

//! the parameter color that uses an RGB value (that may be constructed with the Scalar constructor )

//! for color images and brightness for grayscale images. For color images, the channel ordering is

//! normally *Blue, Green, Red*. This is what imshow, imread, and imwrite expect. So, if you form a

//! color using the Scalar constructor, it should look like:

//!

//!

//!

//! If you are using your own image rendering and I/O functions, you can use any channel ordering. The

//! drawing functions process each channel independently and do not depend on the channel order or even

//! on the used color space. The whole image can be converted from BGR to RGB or to a different color

//! space using cvtColor .

//!

//! If a drawn figure is partially or completely outside the image, the drawing functions clip it. Also,

//! many drawing functions can handle pixel coordinates specified with sub-pixel accuracy. This means

//! that the coordinates can be passed as fixed-point numbers encoded as integers. The number of

//! fractional bits is specified by the shift parameter and the real point coordinates are calculated as

//!  . This feature is

//! especially effective when rendering antialiased shapes.

//!

//!

//! Note: The functions do not support alpha-transparency when the target image is 4-channel. In this

//! case, the color[3] is simply copied to the repainted pixels. Thus, if you want to paint

//! semi-transparent shapes, you can paint them in a separate buffer and then blend it with the main

//! image.

//!

//! # Color Space Conversions

//! # ColorMaps in OpenCV

//!

//! The human perception isn't built for observing fine changes in grayscale images. Human eyes are more

//! sensitive to observing changes between colors, so you often need to recolor your grayscale images to

//! get a clue about them. OpenCV now comes with various colormaps to enhance the visualization in your

//! computer vision application.

//!

//! In OpenCV you only need applyColorMap to apply a colormap on a given image. The following sample

//! code reads the path to an image from command line, applies a Jet colormap on it and shows the

//! result:

//!

//! @include snippets/imgproc_applyColorMap.cpp

//! ## See also

//! #ColormapTypes

//!

//! # Planar Subdivision

//!

//! The Subdiv2D class described in this section is used to perform various planar subdivision on

//! a set of 2D points (represented as vector of Point2f). OpenCV subdivides a plane into triangles

//! using the Delaunay's algorithm, which corresponds to the dual graph of the Voronoi diagram.

//! In the figure below, the Delaunay's triangulation is marked with black lines and the Voronoi

//! diagram with red lines.

//!

//!

//!

//! The subdivisions can be used for the 3D piece-wise transformation of a plane, morphing, fast

//! location of points on the plane, building special graphs (such as NNG,RNG), and so forth.

//!

//! # Histograms

//! # Structural Analysis and Shape Descriptors

//! # Motion Analysis and Object Tracking

//! # Feature Detection

//! # Object Detection

//! # Image Segmentation

//! # C API

//! # Hardware Acceleration Layer

//! # Functions

//! # Interface

use crate::{mod_prelude::*, core, sys, types};

pub mod prelude {

pub use { super::GeneralizedHoughTraitConst, super::GeneralizedHoughTrait, super::GeneralizedHoughBallardTraitConst, super::GeneralizedHoughBallardTrait, super::GeneralizedHoughGuilTraitConst, super::GeneralizedHoughGuilTrait, super::CLAHETraitConst, super::CLAHETrait, super::Subdiv2DTraitConst, super::Subdiv2DTrait, super::LineSegmentDetectorTraitConst, super::LineSegmentDetectorTrait, super::LineIteratorTraitConst, super::LineIteratorTrait, super::IntelligentScissorsMBTraitConst, super::IntelligentScissorsMBTrait };

}

/// the threshold value  is a weighted sum (cross-correlation with a Gaussian

/// window) of the  neighborhood of

/// minus C . The default sigma (standard deviation) is used for the specified blockSize . See

/// #getGaussianKernel

pub const ADAPTIVE_THRESH_GAUSSIAN_C: i32 = 1;

/// the threshold value  is a mean of the  neighborhood of  minus C

pub const ADAPTIVE_THRESH_MEAN_C: i32 = 0;

/// Same as CCL_GRANA. It is preferable to use the flag with the name of the algorithm (CCL_BBDT) rather than the one with the name of the first author (CCL_GRANA).

pub const CCL_BBDT: i32 = 4;

/// Spaghetti [Bolelli2019](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2019) algorithm for 8-way connectivity, Spaghetti4C [Bolelli2021](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2021) algorithm for 4-way connectivity. The parallel implementation described in [Bolelli2017](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2017) is available for both Spaghetti and Spaghetti4C.

pub const CCL_BOLELLI: i32 = 2;

/// Spaghetti [Bolelli2019](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2019) algorithm for 8-way connectivity, Spaghetti4C [Bolelli2021](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2021) algorithm for 4-way connectivity.

pub const CCL_DEFAULT: i32 = -1;

/// BBDT [Grana2010](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Grana2010) algorithm for 8-way connectivity, SAUF algorithm for 4-way connectivity. The parallel implementation described in [Bolelli2017](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2017) is available for both BBDT and SAUF.

pub const CCL_GRANA: i32 = 1;

/// Same as CCL_WU. It is preferable to use the flag with the name of the algorithm (CCL_SAUF) rather than the one with the name of the first author (CCL_WU).

pub const CCL_SAUF: i32 = 3;

/// Same as CCL_BOLELLI. It is preferable to use the flag with the name of the algorithm (CCL_SPAGHETTI) rather than the one with the name of the first author (CCL_BOLELLI).

pub const CCL_SPAGHETTI: i32 = 5;

/// SAUF [Wu2009](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Wu2009) algorithm for 8-way connectivity, SAUF algorithm for 4-way connectivity. The parallel implementation described in [Bolelli2017](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2017) is available for SAUF.

pub const CCL_WU: i32 = 0;

/// The total area (in pixels) of the connected component

pub const CC_STAT_AREA: i32 = 4;

/// The vertical size of the bounding box

pub const CC_STAT_HEIGHT: i32 = 3;

/// The leftmost (x) coordinate which is the inclusive start of the bounding

/// box in the horizontal direction.

pub const CC_STAT_LEFT: i32 = 0;

/// Max enumeration value. Used internally only for memory allocation

pub const CC_STAT_MAX: i32 = 5;

/// The topmost (y) coordinate which is the inclusive start of the bounding

/// box in the vertical direction.

pub const CC_STAT_TOP: i32 = 1;

/// The horizontal size of the bounding box

pub const CC_STAT_WIDTH: i32 = 2;

/// stores absolutely all the contour points. That is, any 2 subsequent points (x1,y1) and

/// (x2,y2) of the contour will be either horizontal, vertical or diagonal neighbors, that is,

/// max(abs(x1-x2),abs(y2-y1))==1.

pub const CHAIN_APPROX_NONE: i32 = 1;

/// compresses horizontal, vertical, and diagonal segments and leaves only their end points.

/// For example, an up-right rectangular contour is encoded with 4 points.

pub const CHAIN_APPROX_SIMPLE: i32 = 2;

/// applies one of the flavors of the Teh-Chin chain approximation algorithm [TehChin89](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_TehChin89)

pub const CHAIN_APPROX_TC89_KCOS: i32 = 4;

/// applies one of the flavors of the Teh-Chin chain approximation algorithm [TehChin89](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_TehChin89)

pub const CHAIN_APPROX_TC89_L1: i32 = 3;

///

pub const COLORMAP_AUTUMN: i32 = 0;

///

pub const COLORMAP_BONE: i32 = 1;

///

pub const COLORMAP_CIVIDIS: i32 = 17;

///

pub const COLORMAP_COOL: i32 = 8;

///

pub const COLORMAP_DEEPGREEN: i32 = 21;

///

pub const COLORMAP_HOT: i32 = 11;

///

pub const COLORMAP_HSV: i32 = 9;

///

pub const COLORMAP_INFERNO: i32 = 14;

///

pub const COLORMAP_JET: i32 = 2;

///

pub const COLORMAP_MAGMA: i32 = 13;

///

pub const COLORMAP_OCEAN: i32 = 5;

///

pub const COLORMAP_PARULA: i32 = 12;

///

pub const COLORMAP_PINK: i32 = 10;

///

pub const COLORMAP_PLASMA: i32 = 15;

///

pub const COLORMAP_RAINBOW: i32 = 4;

///

pub const COLORMAP_SPRING: i32 = 7;

///

pub const COLORMAP_SUMMER: i32 = 6;

///

pub const COLORMAP_TURBO: i32 = 20;

///

pub const COLORMAP_TWILIGHT: i32 = 18;

///

pub const COLORMAP_TWILIGHT_SHIFTED: i32 = 19;

///

pub const COLORMAP_VIRIDIS: i32 = 16;

///

pub const COLORMAP_WINTER: i32 = 3;

/// convert between RGB/BGR and BGR555 (16-bit images)

pub const COLOR_BGR2BGR555: i32 = 22;

/// convert between RGB/BGR and BGR565 (16-bit images)

pub const COLOR_BGR2BGR565: i32 = 12;

/// add alpha channel to RGB or BGR image

pub const COLOR_BGR2BGRA: i32 = 0;

/// convert between RGB/BGR and grayscale, [color_convert_rgb_gray] "color conversions"

pub const COLOR_BGR2GRAY: i32 = 6;

/// convert RGB/BGR to HLS (hue lightness saturation) with H range 0..180 if 8 bit image, [color_convert_rgb_hls] "color conversions"

pub const COLOR_BGR2HLS: i32 = 52;

/// convert RGB/BGR to HLS (hue lightness saturation) with H range 0..255 if 8 bit image, [color_convert_rgb_hls] "color conversions"

pub const COLOR_BGR2HLS_FULL: i32 = 68;

/// convert RGB/BGR to HSV (hue saturation value) with H range 0..180 if 8 bit image, [color_convert_rgb_hsv] "color conversions"

pub const COLOR_BGR2HSV: i32 = 40;

/// convert RGB/BGR to HSV (hue saturation value) with H range 0..255 if 8 bit image, [color_convert_rgb_hsv] "color conversions"

pub const COLOR_BGR2HSV_FULL: i32 = 66;

/// convert RGB/BGR to CIE Lab, [color_convert_rgb_lab] "color conversions"

pub const COLOR_BGR2Lab: i32 = 44;

/// convert RGB/BGR to CIE Luv, [color_convert_rgb_luv] "color conversions"

pub const COLOR_BGR2Luv: i32 = 50;

pub const COLOR_BGR2RGB: i32 = 4;

/// convert between RGB and BGR color spaces (with or without alpha channel)

pub const COLOR_BGR2RGBA: i32 = 2;

/// convert RGB/BGR to CIE XYZ, [color_convert_rgb_xyz] "color conversions"

pub const COLOR_BGR2XYZ: i32 = 32;

/// convert RGB/BGR to luma-chroma (aka YCC), [color_convert_rgb_ycrcb] "color conversions"

pub const COLOR_BGR2YCrCb: i32 = 36;

/// convert between RGB/BGR and YUV

pub const COLOR_BGR2YUV: i32 = 82;

/// RGB to YUV 4:2:0 family

pub const COLOR_BGR2YUV_I420: i32 = 128;

/// RGB to YUV 4:2:0 family

pub const COLOR_BGR2YUV_IYUV: i32 = 128;

/// RGB to YUV 4:2:0 family

pub const COLOR_BGR2YUV_YV12: i32 = 132;

pub const COLOR_BGR5552BGR: i32 = 24;

pub const COLOR_BGR5552BGRA: i32 = 28;

pub const COLOR_BGR5552GRAY: i32 = 31;

pub const COLOR_BGR5552RGB: i32 = 25;

pub const COLOR_BGR5552RGBA: i32 = 29;

pub const COLOR_BGR5652BGR: i32 = 14;

pub const COLOR_BGR5652BGRA: i32 = 18;

pub const COLOR_BGR5652GRAY: i32 = 21;

pub const COLOR_BGR5652RGB: i32 = 15;

pub const COLOR_BGR5652RGBA: i32 = 19;

/// remove alpha channel from RGB or BGR image

pub const COLOR_BGRA2BGR: i32 = 1;

pub const COLOR_BGRA2BGR555: i32 = 26;

pub const COLOR_BGRA2BGR565: i32 = 16;

pub const COLOR_BGRA2GRAY: i32 = 10;

pub const COLOR_BGRA2RGB: i32 = 3;

pub const COLOR_BGRA2RGBA: i32 = 5;

/// RGB to YUV 4:2:0 family

pub const COLOR_BGRA2YUV_I420: i32 = 130;

/// RGB to YUV 4:2:0 family

pub const COLOR_BGRA2YUV_IYUV: i32 = 130;

/// RGB to YUV 4:2:0 family

pub const COLOR_BGRA2YUV_YV12: i32 = 134;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2BGR: i32 = 46;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2BGRA: i32 = 139;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2BGR_EA: i32 = 135;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2BGR_VNG: i32 = 62;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2GRAY: i32 = 86;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2RGB: i32 = 48;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2RGBA: i32 = 141;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2RGB_EA: i32 = 137;

/// equivalent to RGGB Bayer pattern

pub const COLOR_BayerBG2RGB_VNG: i32 = 64;

pub const COLOR_BayerBGGR2BGR: i32 = 48;

pub const COLOR_BayerBGGR2BGRA: i32 = 141;

pub const COLOR_BayerBGGR2BGR_EA: i32 = 137;

pub const COLOR_BayerBGGR2BGR_VNG: i32 = 64;

pub const COLOR_BayerBGGR2GRAY: i32 = 88;

pub const COLOR_BayerBGGR2RGB: i32 = 46;

pub const COLOR_BayerBGGR2RGBA: i32 = 139;

pub const COLOR_BayerBGGR2RGB_EA: i32 = 135;

pub const COLOR_BayerBGGR2RGB_VNG: i32 = 62;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2BGR: i32 = 47;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2BGRA: i32 = 140;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2BGR_EA: i32 = 136;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2BGR_VNG: i32 = 63;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2GRAY: i32 = 87;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2RGB: i32 = 49;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2RGBA: i32 = 142;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2RGB_EA: i32 = 138;

/// equivalent to GRBG Bayer pattern

pub const COLOR_BayerGB2RGB_VNG: i32 = 65;

pub const COLOR_BayerGBRG2BGR: i32 = 49;

pub const COLOR_BayerGBRG2BGRA: i32 = 142;

pub const COLOR_BayerGBRG2BGR_EA: i32 = 138;

pub const COLOR_BayerGBRG2BGR_VNG: i32 = 65;

pub const COLOR_BayerGBRG2GRAY: i32 = 89;

pub const COLOR_BayerGBRG2RGB: i32 = 47;

pub const COLOR_BayerGBRG2RGBA: i32 = 140;

pub const COLOR_BayerGBRG2RGB_EA: i32 = 136;

pub const COLOR_BayerGBRG2RGB_VNG: i32 = 63;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2BGR: i32 = 49;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2BGRA: i32 = 142;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2BGR_EA: i32 = 138;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2BGR_VNG: i32 = 65;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2GRAY: i32 = 89;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2RGB: i32 = 47;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2RGBA: i32 = 140;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2RGB_EA: i32 = 136;

/// equivalent to GBRG Bayer pattern

pub const COLOR_BayerGR2RGB_VNG: i32 = 63;

pub const COLOR_BayerGRBG2BGR: i32 = 47;

pub const COLOR_BayerGRBG2BGRA: i32 = 140;

pub const COLOR_BayerGRBG2BGR_EA: i32 = 136;

pub const COLOR_BayerGRBG2BGR_VNG: i32 = 63;

pub const COLOR_BayerGRBG2GRAY: i32 = 87;

pub const COLOR_BayerGRBG2RGB: i32 = 49;

pub const COLOR_BayerGRBG2RGBA: i32 = 142;

pub const COLOR_BayerGRBG2RGB_EA: i32 = 138;

pub const COLOR_BayerGRBG2RGB_VNG: i32 = 65;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2BGR: i32 = 48;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2BGRA: i32 = 141;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2BGR_EA: i32 = 137;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2BGR_VNG: i32 = 64;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2GRAY: i32 = 88;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2RGB: i32 = 46;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2RGBA: i32 = 139;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2RGB_EA: i32 = 135;

/// equivalent to BGGR Bayer pattern

pub const COLOR_BayerRG2RGB_VNG: i32 = 62;

pub const COLOR_BayerRGGB2BGR: i32 = 46;

pub const COLOR_BayerRGGB2BGRA: i32 = 139;

pub const COLOR_BayerRGGB2BGR_EA: i32 = 135;

pub const COLOR_BayerRGGB2BGR_VNG: i32 = 62;

pub const COLOR_BayerRGGB2GRAY: i32 = 86;

pub const COLOR_BayerRGGB2RGB: i32 = 48;

pub const COLOR_BayerRGGB2RGBA: i32 = 141;

pub const COLOR_BayerRGGB2RGB_EA: i32 = 137;

pub const COLOR_BayerRGGB2RGB_VNG: i32 = 64;

pub const COLOR_COLORCVT_MAX: i32 = 143;

pub const COLOR_GRAY2BGR: i32 = 8;

/// convert between grayscale and BGR555 (16-bit images)

pub const COLOR_GRAY2BGR555: i32 = 30;

/// convert between grayscale to BGR565 (16-bit images)

pub const COLOR_GRAY2BGR565: i32 = 20;

pub const COLOR_GRAY2BGRA: i32 = 9;

pub const COLOR_GRAY2RGB: i32 = 8;

pub const COLOR_GRAY2RGBA: i32 = 9;

/// backward conversions HLS to RGB/BGR with H range 0..180 if 8 bit image

pub const COLOR_HLS2BGR: i32 = 60;

/// backward conversions HLS to RGB/BGR with H range 0..255 if 8 bit image

pub const COLOR_HLS2BGR_FULL: i32 = 72;

pub const COLOR_HLS2RGB: i32 = 61;

pub const COLOR_HLS2RGB_FULL: i32 = 73;

/// backward conversions HSV to RGB/BGR with H range 0..180 if 8 bit image

pub const COLOR_HSV2BGR: i32 = 54;

/// backward conversions HSV to RGB/BGR with H range 0..255 if 8 bit image

pub const COLOR_HSV2BGR_FULL: i32 = 70;

pub const COLOR_HSV2RGB: i32 = 55;

pub const COLOR_HSV2RGB_FULL: i32 = 71;

pub const COLOR_LBGR2Lab: i32 = 74;

pub const COLOR_LBGR2Luv: i32 = 76;

pub const COLOR_LRGB2Lab: i32 = 75;

pub const COLOR_LRGB2Luv: i32 = 77;

pub const COLOR_Lab2BGR: i32 = 56;

pub const COLOR_Lab2LBGR: i32 = 78;

pub const COLOR_Lab2LRGB: i32 = 79;

pub const COLOR_Lab2RGB: i32 = 57;

pub const COLOR_Luv2BGR: i32 = 58;

pub const COLOR_Luv2LBGR: i32 = 80;

pub const COLOR_Luv2LRGB: i32 = 81;

pub const COLOR_Luv2RGB: i32 = 59;

pub const COLOR_RGB2BGR: i32 = 4;

pub const COLOR_RGB2BGR555: i32 = 23;

pub const COLOR_RGB2BGR565: i32 = 13;

pub const COLOR_RGB2BGRA: i32 = 2;

pub const COLOR_RGB2GRAY: i32 = 7;

pub const COLOR_RGB2HLS: i32 = 53;

pub const COLOR_RGB2HLS_FULL: i32 = 69;

pub const COLOR_RGB2HSV: i32 = 41;

pub const COLOR_RGB2HSV_FULL: i32 = 67;

pub const COLOR_RGB2Lab: i32 = 45;

pub const COLOR_RGB2Luv: i32 = 51;

pub const COLOR_RGB2RGBA: i32 = 0;

pub const COLOR_RGB2XYZ: i32 = 33;

pub const COLOR_RGB2YCrCb: i32 = 37;

pub const COLOR_RGB2YUV: i32 = 83;

/// RGB to YUV 4:2:0 family

pub const COLOR_RGB2YUV_I420: i32 = 127;

/// RGB to YUV 4:2:0 family

pub const COLOR_RGB2YUV_IYUV: i32 = 127;

/// RGB to YUV 4:2:0 family

pub const COLOR_RGB2YUV_YV12: i32 = 131;

pub const COLOR_RGBA2BGR: i32 = 3;

pub const COLOR_RGBA2BGR555: i32 = 27;

pub const COLOR_RGBA2BGR565: i32 = 17;

pub const COLOR_RGBA2BGRA: i32 = 5;

pub const COLOR_RGBA2GRAY: i32 = 11;

pub const COLOR_RGBA2RGB: i32 = 1;

/// RGB to YUV 4:2:0 family

pub const COLOR_RGBA2YUV_I420: i32 = 129;

/// RGB to YUV 4:2:0 family

pub const COLOR_RGBA2YUV_IYUV: i32 = 129;

/// RGB to YUV 4:2:0 family

pub const COLOR_RGBA2YUV_YV12: i32 = 133;

/// alpha premultiplication

pub const COLOR_RGBA2mRGBA: i32 = 125;

pub const COLOR_XYZ2BGR: i32 = 34;

pub const COLOR_XYZ2RGB: i32 = 35;

pub const COLOR_YCrCb2BGR: i32 = 38;

pub const COLOR_YCrCb2RGB: i32 = 39;

pub const COLOR_YUV2BGR: i32 = 84;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGRA_I420: i32 = 105;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGRA_IYUV: i32 = 105;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGRA_NV12: i32 = 95;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGRA_NV21: i32 = 97;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGRA_UYNV: i32 = 112;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGRA_UYVY: i32 = 112;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGRA_Y422: i32 = 112;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGRA_YUNV: i32 = 120;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGRA_YUY2: i32 = 120;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGRA_YUYV: i32 = 120;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGRA_YV12: i32 = 103;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGRA_YVYU: i32 = 122;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGR_I420: i32 = 101;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGR_IYUV: i32 = 101;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGR_NV12: i32 = 91;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGR_NV21: i32 = 93;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGR_UYNV: i32 = 108;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGR_UYVY: i32 = 108;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGR_Y422: i32 = 108;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGR_YUNV: i32 = 116;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGR_YUY2: i32 = 116;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGR_YUYV: i32 = 116;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2BGR_YV12: i32 = 99;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2BGR_YVYU: i32 = 118;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2GRAY_420: i32 = 106;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2GRAY_I420: i32 = 106;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2GRAY_IYUV: i32 = 106;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2GRAY_NV12: i32 = 106;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2GRAY_NV21: i32 = 106;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2GRAY_UYNV: i32 = 123;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2GRAY_UYVY: i32 = 123;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2GRAY_Y422: i32 = 123;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2GRAY_YUNV: i32 = 124;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2GRAY_YUY2: i32 = 124;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2GRAY_YUYV: i32 = 124;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2GRAY_YV12: i32 = 106;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2GRAY_YVYU: i32 = 124;

pub const COLOR_YUV2RGB: i32 = 85;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGBA_I420: i32 = 104;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGBA_IYUV: i32 = 104;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGBA_NV12: i32 = 94;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGBA_NV21: i32 = 96;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGBA_UYNV: i32 = 111;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGBA_UYVY: i32 = 111;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGBA_Y422: i32 = 111;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGBA_YUNV: i32 = 119;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGBA_YUY2: i32 = 119;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGBA_YUYV: i32 = 119;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGBA_YV12: i32 = 102;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGBA_YVYU: i32 = 121;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGB_I420: i32 = 100;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGB_IYUV: i32 = 100;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGB_NV12: i32 = 90;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGB_NV21: i32 = 92;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGB_UYNV: i32 = 107;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGB_UYVY: i32 = 107;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGB_Y422: i32 = 107;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGB_YUNV: i32 = 115;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGB_YUY2: i32 = 115;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGB_YUYV: i32 = 115;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV2RGB_YV12: i32 = 98;

/// YUV 4:2:2 family to RGB

pub const COLOR_YUV2RGB_YVYU: i32 = 117;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420p2BGR: i32 = 99;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420p2BGRA: i32 = 103;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420p2GRAY: i32 = 106;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420p2RGB: i32 = 98;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420p2RGBA: i32 = 102;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420sp2BGR: i32 = 93;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420sp2BGRA: i32 = 97;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420sp2GRAY: i32 = 106;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420sp2RGB: i32 = 92;

/// YUV 4:2:0 family to RGB

pub const COLOR_YUV420sp2RGBA: i32 = 96;

/// alpha premultiplication

pub const COLOR_mRGBA2RGBA: i32 = 126;

///

pub const CONTOURS_MATCH_I1: i32 = 1;

///

pub const CONTOURS_MATCH_I2: i32 = 2;

///

pub const CONTOURS_MATCH_I3: i32 = 3;

/// distance = max(|x1-x2|,|y1-y2|)

pub const DIST_C: i32 = 3;

/// distance = c^2(|x|/c-log(1+|x|/c)), c = 1.3998

pub const DIST_FAIR: i32 = 5;

/// distance = |x|<c ? x^2/2 : c(|x|-c/2), c=1.345

pub const DIST_HUBER: i32 = 7;

/// distance = |x1-x2| + |y1-y2|

pub const DIST_L1: i32 = 1;

/// L1-L2 metric: distance = 2(sqrt(1+x*x/2) - 1))

pub const DIST_L12: i32 = 4;

/// the simple euclidean distance

pub const DIST_L2: i32 = 2;

/// each connected component of zeros in src (as well as all the non-zero pixels closest to the

/// connected component) will be assigned the same label

pub const DIST_LABEL_CCOMP: i32 = 0;

/// each zero pixel (and all the non-zero pixels closest to it) gets its own label.

pub const DIST_LABEL_PIXEL: i32 = 1;

/// mask=3

pub const DIST_MASK_3: i32 = 3;

/// mask=5

pub const DIST_MASK_5: i32 = 5;

pub const DIST_MASK_PRECISE: i32 = 0;

/// User defined distance

pub const DIST_USER: i32 = -1;

/// distance = c^2/2(1-exp(-(x/c)^2)), c = 2.9846

pub const DIST_WELSCH: i32 = 6;

pub const FILLED: i32 = -1;

pub const FILTER_SCHARR: i32 = -1;

/// If set, the difference between the current pixel and seed pixel is considered. Otherwise,

/// the difference between neighbor pixels is considered (that is, the range is floating).

pub const FLOODFILL_FIXED_RANGE: i32 = 65536;

/// If set, the function does not change the image ( newVal is ignored), and only fills the

/// mask with the value specified in bits 8-16 of flags as described above. This option only make

/// sense in function variants that have the mask parameter.

pub const FLOODFILL_MASK_ONLY: i32 = 131072;

/// normal size serif font

pub const FONT_HERSHEY_COMPLEX: i32 = 3;

/// smaller version of FONT_HERSHEY_COMPLEX

pub const FONT_HERSHEY_COMPLEX_SMALL: i32 = 5;

/// normal size sans-serif font (more complex than FONT_HERSHEY_SIMPLEX)

pub const FONT_HERSHEY_DUPLEX: i32 = 2;

/// small size sans-serif font

pub const FONT_HERSHEY_PLAIN: i32 = 1;

/// more complex variant of FONT_HERSHEY_SCRIPT_SIMPLEX

pub const FONT_HERSHEY_SCRIPT_COMPLEX: i32 = 7;

/// hand-writing style font

pub const FONT_HERSHEY_SCRIPT_SIMPLEX: i32 = 6;

/// normal size sans-serif font

pub const FONT_HERSHEY_SIMPLEX: i32 = 0;

/// normal size serif font (more complex than FONT_HERSHEY_COMPLEX)

pub const FONT_HERSHEY_TRIPLEX: i32 = 4;

/// flag for italic font

pub const FONT_ITALIC: i32 = 16;

/// an obvious background pixels

pub const GC_BGD: i32 = 0;

/// The value means that the algorithm should just resume.

pub const GC_EVAL: i32 = 2;

/// The value means that the algorithm should just run the grabCut algorithm (a single iteration) with the fixed model

pub const GC_EVAL_FREEZE_MODEL: i32 = 3;

/// an obvious foreground (object) pixel

pub const GC_FGD: i32 = 1;

/// The function initializes the state using the provided mask. Note that GC_INIT_WITH_RECT

/// and GC_INIT_WITH_MASK can be combined. Then, all the pixels outside of the ROI are

/// automatically initialized with GC_BGD .

pub const GC_INIT_WITH_MASK: i32 = 1;

/// The function initializes the state and the mask using the provided rectangle. After that it

/// runs iterCount iterations of the algorithm.

pub const GC_INIT_WITH_RECT: i32 = 0;

/// a possible background pixel

pub const GC_PR_BGD: i32 = 2;

/// a possible foreground pixel

pub const GC_PR_FGD: i32 = 3;

/// Bhattacharyya distance

/// (In fact, OpenCV computes Hellinger distance, which is related to Bhattacharyya coefficient.)

///

pub const HISTCMP_BHATTACHARYYA: i32 = 3;

/// Chi-Square

///

pub const HISTCMP_CHISQR: i32 = 1;

/// Alternative Chi-Square

///

/// This alternative formula is regularly used for texture comparison. See e.g. [Puzicha1997](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Puzicha1997)

pub const HISTCMP_CHISQR_ALT: i32 = 4;

/// Correlation

///

/// where

///

/// and  is a total number of histogram bins.

pub const HISTCMP_CORREL: i32 = 0;

/// Synonym for HISTCMP_BHATTACHARYYA

pub const HISTCMP_HELLINGER: i32 = 3;

/// Intersection

///

pub const HISTCMP_INTERSECT: i32 = 2;

/// Kullback-Leibler divergence

///

pub const HISTCMP_KL_DIV: i32 = 5;

/// basically *21HT*, described in [Yuen90](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Yuen90)

pub const HOUGH_GRADIENT: i32 = 3;

/// variation of HOUGH_GRADIENT to get better accuracy

pub const HOUGH_GRADIENT_ALT: i32 = 4;

/// multi-scale variant of the classical Hough transform. The lines are encoded the same way as

/// HOUGH_STANDARD.

pub const HOUGH_MULTI_SCALE: i32 = 2;

/// probabilistic Hough transform (more efficient in case if the picture contains a few long

/// linear segments). It returns line segments rather than the whole line. Each segment is

/// represented by starting and ending points, and the matrix must be (the created sequence will

/// be) of the CV_32SC4 type.

pub const HOUGH_PROBABILISTIC: i32 = 1;

/// classical or standard Hough transform. Every line is represented by two floating-point

/// numbers  , where  is a distance between (0,0) point and the line,

/// and  is the angle between x-axis and the normal to the line. Thus, the matrix must

/// be (the created sequence will be) of CV_32FC2 type

pub const HOUGH_STANDARD: i32 = 0;

/// One of the rectangle is fully enclosed in the other

pub const INTERSECT_FULL: i32 = 2;

/// No intersection

pub const INTERSECT_NONE: i32 = 0;

/// There is a partial intersection

pub const INTERSECT_PARTIAL: i32 = 1;

/// resampling using pixel area relation. It may be a preferred method for image decimation, as

/// it gives moire'-free results. But when the image is zoomed, it is similar to the INTER_NEAREST

/// method.

pub const INTER_AREA: i32 = 3;

pub const INTER_BITS: i32 = 5;

pub const INTER_BITS2: i32 = 10;

/// bicubic interpolation

pub const INTER_CUBIC: i32 = 2;

/// Lanczos interpolation over 8x8 neighborhood

pub const INTER_LANCZOS4: i32 = 4;

/// bilinear interpolation

pub const INTER_LINEAR: i32 = 1;

/// Bit exact bilinear interpolation

pub const INTER_LINEAR_EXACT: i32 = 5;

/// mask for interpolation codes

pub const INTER_MAX: i32 = 7;

/// nearest neighbor interpolation

pub const INTER_NEAREST: i32 = 0;

/// Bit exact nearest neighbor interpolation. This will produce same results as

/// the nearest neighbor method in PIL, scikit-image or Matlab.

pub const INTER_NEAREST_EXACT: i32 = 6;

pub const INTER_TAB_SIZE: i32 = 32;

pub const INTER_TAB_SIZE2: i32 = 1024;

/// 4-connected line

pub const LINE_4: i32 = 4;

/// 8-connected line

pub const LINE_8: i32 = 8;

/// antialiased line

pub const LINE_AA: i32 = 16;

/// Advanced refinement. Number of false alarms is calculated, lines are

/// refined through increase of precision, decrement in size, etc.

pub const LSD_REFINE_ADV: i32 = 2;

/// No refinement applied

pub const LSD_REFINE_NONE: i32 = 0;

/// Standard refinement is applied. E.g. breaking arches into smaller straighter line approximations.

pub const LSD_REFINE_STD: i32 = 1;

/// A crosshair marker shape

pub const MARKER_CROSS: i32 = 0;

/// A diamond marker shape

pub const MARKER_DIAMOND: i32 = 3;

/// A square marker shape

pub const MARKER_SQUARE: i32 = 4;

/// A star marker shape, combination of cross and tilted cross

pub const MARKER_STAR: i32 = 2;

/// A 45 degree tilted crosshair marker shape

pub const MARKER_TILTED_CROSS: i32 = 1;

/// A downwards pointing triangle marker shape

pub const MARKER_TRIANGLE_DOWN: i32 = 6;

/// An upwards pointing triangle marker shape

pub const MARKER_TRIANGLE_UP: i32 = 5;

/// "black hat"

///

pub const MORPH_BLACKHAT: i32 = 6;

/// a closing operation

///

pub const MORPH_CLOSE: i32 = 3;

/// a cross-shaped structuring element:

///

pub const MORPH_CROSS: i32 = 1;

/// see #dilate

pub const MORPH_DILATE: i32 = 1;

/// an elliptic structuring element, that is, a filled ellipse inscribed

/// into the rectangle Rect(0, 0, esize.width, 0.esize.height)

pub const MORPH_ELLIPSE: i32 = 2;

/// see #erode

pub const MORPH_ERODE: i32 = 0;

/// a morphological gradient

///

pub const MORPH_GRADIENT: i32 = 4;

/// "hit or miss"

/// .- Only supported for CV_8UC1 binary images. A tutorial can be found in the documentation

pub const MORPH_HITMISS: i32 = 7;

/// an opening operation

///

pub const MORPH_OPEN: i32 = 2;

/// a rectangular structuring element:

pub const MORPH_RECT: i32 = 0;

/// "top hat"

///

pub const MORPH_TOPHAT: i32 = 5;

/// retrieves all of the contours and organizes them into a two-level hierarchy. At the top

/// level, there are external boundaries of the components. At the second level, there are

/// boundaries of the holes. If there is another contour inside a hole of a connected component, it

/// is still put at the top level.

pub const RETR_CCOMP: i32 = 2;

/// retrieves only the extreme outer contours. It sets `hierarchy[i][2]=hierarchy[i][3]=-1` for

/// all the contours.

pub const RETR_EXTERNAL: i32 = 0;

pub const RETR_FLOODFILL: i32 = 4;

/// retrieves all of the contours without establishing any hierarchical relationships.

pub const RETR_LIST: i32 = 1;

/// retrieves all of the contours and reconstructs a full hierarchy of nested contours.

pub const RETR_TREE: i32 = 3;

pub const Subdiv2D_NEXT_AROUND_DST: i32 = 34;

pub const Subdiv2D_NEXT_AROUND_LEFT: i32 = 19;

pub const Subdiv2D_NEXT_AROUND_ORG: i32 = 0;

pub const Subdiv2D_NEXT_AROUND_RIGHT: i32 = 49;

pub const Subdiv2D_PREV_AROUND_DST: i32 = 51;

pub const Subdiv2D_PREV_AROUND_LEFT: i32 = 32;

pub const Subdiv2D_PREV_AROUND_ORG: i32 = 17;

pub const Subdiv2D_PREV_AROUND_RIGHT: i32 = 2;

/// Point location error

pub const Subdiv2D_PTLOC_ERROR: i32 = -2;

/// Point inside some facet

pub const Subdiv2D_PTLOC_INSIDE: i32 = 0;

/// Point on some edge

pub const Subdiv2D_PTLOC_ON_EDGE: i32 = 2;

/// Point outside the subdivision bounding rect

pub const Subdiv2D_PTLOC_OUTSIDE_RECT: i32 = -1;

/// Point coincides with one of the subdivision vertices

pub const Subdiv2D_PTLOC_VERTEX: i32 = 1;

///

pub const THRESH_BINARY: i32 = 0;

///

pub const THRESH_BINARY_INV: i32 = 1;

pub const THRESH_MASK: i32 = 7;

/// flag, use Otsu algorithm to choose the optimal threshold value

pub const THRESH_OTSU: i32 = 8;

///

pub const THRESH_TOZERO: i32 = 3;

///

pub const THRESH_TOZERO_INV: i32 = 4;

/// flag, use Triangle algorithm to choose the optimal threshold value

pub const THRESH_TRIANGLE: i32 = 16;

///

pub const THRESH_TRUNC: i32 = 2;

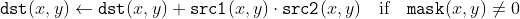

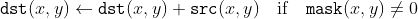

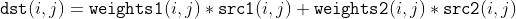

/// !<

/// where

///

/// with mask:

///

pub const TM_CCOEFF: i32 = 4;

/// !<

pub const TM_CCOEFF_NORMED: i32 = 5;

/// !<

/// with mask:

///

pub const TM_CCORR: i32 = 2;

/// !<

/// with mask:

///

pub const TM_CCORR_NORMED: i32 = 3;

/// !<

/// with mask:

///

pub const TM_SQDIFF: i32 = 0;

/// !<

/// with mask:

///

pub const TM_SQDIFF_NORMED: i32 = 1;

/// flag, fills all of the destination image pixels. If some of them correspond to outliers in the

/// source image, they are set to zero

pub const WARP_FILL_OUTLIERS: i32 = 8;

/// flag, inverse transformation

///

/// For example, #linearPolar or #logPolar transforms:

/// - flag is __not__ set:

/// - flag is set:

pub const WARP_INVERSE_MAP: i32 = 16;

/// Remaps an image to/from polar space.

pub const WARP_POLAR_LINEAR: i32 = 0;

/// Remaps an image to/from semilog-polar space.

pub const WARP_POLAR_LOG: i32 = 256;

/// adaptive threshold algorithm

/// ## See also

/// adaptiveThreshold

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum AdaptiveThresholdTypes {

/// the threshold value  is a mean of the  neighborhood of  minus C

ADAPTIVE_THRESH_MEAN_C = 0,

/// the threshold value  is a weighted sum (cross-correlation with a Gaussian

/// window) of the  neighborhood of

/// minus C . The default sigma (standard deviation) is used for the specified blockSize . See

/// #getGaussianKernel

ADAPTIVE_THRESH_GAUSSIAN_C = 1,

}

opencv_type_enum! { crate::imgproc::AdaptiveThresholdTypes }

/// the color conversion codes

/// ## See also

/// [imgproc_color_conversions]

/// @ingroup imgproc_color_conversions

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ColorConversionCodes {

/// add alpha channel to RGB or BGR image

COLOR_BGR2BGRA = 0,

// Duplicate, use COLOR_BGR2BGRA instead

// COLOR_RGB2RGBA = 0,

/// remove alpha channel from RGB or BGR image

COLOR_BGRA2BGR = 1,

// Duplicate, use COLOR_BGRA2BGR instead

// COLOR_RGBA2RGB = 1,

/// convert between RGB and BGR color spaces (with or without alpha channel)

COLOR_BGR2RGBA = 2,

// Duplicate, use COLOR_BGR2RGBA instead

// COLOR_RGB2BGRA = 2,

COLOR_RGBA2BGR = 3,

// Duplicate, use COLOR_RGBA2BGR instead

// COLOR_BGRA2RGB = 3,

COLOR_BGR2RGB = 4,

// Duplicate, use COLOR_BGR2RGB instead

// COLOR_RGB2BGR = 4,

COLOR_BGRA2RGBA = 5,

// Duplicate, use COLOR_BGRA2RGBA instead

// COLOR_RGBA2BGRA = 5,

/// convert between RGB/BGR and grayscale, [color_convert_rgb_gray] "color conversions"

COLOR_BGR2GRAY = 6,

COLOR_RGB2GRAY = 7,

COLOR_GRAY2BGR = 8,

// Duplicate, use COLOR_GRAY2BGR instead

// COLOR_GRAY2RGB = 8,

COLOR_GRAY2BGRA = 9,

// Duplicate, use COLOR_GRAY2BGRA instead

// COLOR_GRAY2RGBA = 9,

COLOR_BGRA2GRAY = 10,

COLOR_RGBA2GRAY = 11,

/// convert between RGB/BGR and BGR565 (16-bit images)

COLOR_BGR2BGR565 = 12,

COLOR_RGB2BGR565 = 13,

COLOR_BGR5652BGR = 14,

COLOR_BGR5652RGB = 15,

COLOR_BGRA2BGR565 = 16,

COLOR_RGBA2BGR565 = 17,

COLOR_BGR5652BGRA = 18,

COLOR_BGR5652RGBA = 19,

/// convert between grayscale to BGR565 (16-bit images)

COLOR_GRAY2BGR565 = 20,

COLOR_BGR5652GRAY = 21,

/// convert between RGB/BGR and BGR555 (16-bit images)

COLOR_BGR2BGR555 = 22,

COLOR_RGB2BGR555 = 23,

COLOR_BGR5552BGR = 24,

COLOR_BGR5552RGB = 25,

COLOR_BGRA2BGR555 = 26,

COLOR_RGBA2BGR555 = 27,

COLOR_BGR5552BGRA = 28,

COLOR_BGR5552RGBA = 29,

/// convert between grayscale and BGR555 (16-bit images)

COLOR_GRAY2BGR555 = 30,

COLOR_BGR5552GRAY = 31,

/// convert RGB/BGR to CIE XYZ, [color_convert_rgb_xyz] "color conversions"

COLOR_BGR2XYZ = 32,

COLOR_RGB2XYZ = 33,

COLOR_XYZ2BGR = 34,

COLOR_XYZ2RGB = 35,

/// convert RGB/BGR to luma-chroma (aka YCC), [color_convert_rgb_ycrcb] "color conversions"

COLOR_BGR2YCrCb = 36,

COLOR_RGB2YCrCb = 37,

COLOR_YCrCb2BGR = 38,

COLOR_YCrCb2RGB = 39,

/// convert RGB/BGR to HSV (hue saturation value) with H range 0..180 if 8 bit image, [color_convert_rgb_hsv] "color conversions"

COLOR_BGR2HSV = 40,

COLOR_RGB2HSV = 41,

/// convert RGB/BGR to CIE Lab, [color_convert_rgb_lab] "color conversions"

COLOR_BGR2Lab = 44,

COLOR_RGB2Lab = 45,

/// convert RGB/BGR to CIE Luv, [color_convert_rgb_luv] "color conversions"

COLOR_BGR2Luv = 50,

COLOR_RGB2Luv = 51,

/// convert RGB/BGR to HLS (hue lightness saturation) with H range 0..180 if 8 bit image, [color_convert_rgb_hls] "color conversions"

COLOR_BGR2HLS = 52,

COLOR_RGB2HLS = 53,

/// backward conversions HSV to RGB/BGR with H range 0..180 if 8 bit image

COLOR_HSV2BGR = 54,

COLOR_HSV2RGB = 55,

COLOR_Lab2BGR = 56,

COLOR_Lab2RGB = 57,

COLOR_Luv2BGR = 58,

COLOR_Luv2RGB = 59,

/// backward conversions HLS to RGB/BGR with H range 0..180 if 8 bit image

COLOR_HLS2BGR = 60,

COLOR_HLS2RGB = 61,

/// convert RGB/BGR to HSV (hue saturation value) with H range 0..255 if 8 bit image, [color_convert_rgb_hsv] "color conversions"

COLOR_BGR2HSV_FULL = 66,

COLOR_RGB2HSV_FULL = 67,

/// convert RGB/BGR to HLS (hue lightness saturation) with H range 0..255 if 8 bit image, [color_convert_rgb_hls] "color conversions"

COLOR_BGR2HLS_FULL = 68,

COLOR_RGB2HLS_FULL = 69,

/// backward conversions HSV to RGB/BGR with H range 0..255 if 8 bit image

COLOR_HSV2BGR_FULL = 70,

COLOR_HSV2RGB_FULL = 71,

/// backward conversions HLS to RGB/BGR with H range 0..255 if 8 bit image

COLOR_HLS2BGR_FULL = 72,

COLOR_HLS2RGB_FULL = 73,

COLOR_LBGR2Lab = 74,

COLOR_LRGB2Lab = 75,

COLOR_LBGR2Luv = 76,

COLOR_LRGB2Luv = 77,

COLOR_Lab2LBGR = 78,

COLOR_Lab2LRGB = 79,

COLOR_Luv2LBGR = 80,

COLOR_Luv2LRGB = 81,

/// convert between RGB/BGR and YUV

COLOR_BGR2YUV = 82,

COLOR_RGB2YUV = 83,

COLOR_YUV2BGR = 84,

COLOR_YUV2RGB = 85,

/// YUV 4:2:0 family to RGB

COLOR_YUV2RGB_NV12 = 90,

/// YUV 4:2:0 family to RGB

COLOR_YUV2BGR_NV12 = 91,

/// YUV 4:2:0 family to RGB

COLOR_YUV2RGB_NV21 = 92,

/// YUV 4:2:0 family to RGB

COLOR_YUV2BGR_NV21 = 93,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2RGB_NV21 instead

// COLOR_YUV420sp2RGB = 92,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2BGR_NV21 instead

// COLOR_YUV420sp2BGR = 93,

/// YUV 4:2:0 family to RGB

COLOR_YUV2RGBA_NV12 = 94,

/// YUV 4:2:0 family to RGB

COLOR_YUV2BGRA_NV12 = 95,

/// YUV 4:2:0 family to RGB

COLOR_YUV2RGBA_NV21 = 96,

/// YUV 4:2:0 family to RGB

COLOR_YUV2BGRA_NV21 = 97,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2RGBA_NV21 instead

// COLOR_YUV420sp2RGBA = 96,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2BGRA_NV21 instead

// COLOR_YUV420sp2BGRA = 97,

/// YUV 4:2:0 family to RGB

COLOR_YUV2RGB_YV12 = 98,

/// YUV 4:2:0 family to RGB

COLOR_YUV2BGR_YV12 = 99,

/// YUV 4:2:0 family to RGB

COLOR_YUV2RGB_IYUV = 100,

/// YUV 4:2:0 family to RGB

COLOR_YUV2BGR_IYUV = 101,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2RGB_IYUV instead

// COLOR_YUV2RGB_I420 = 100,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2BGR_IYUV instead

// COLOR_YUV2BGR_I420 = 101,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2RGB_YV12 instead

// COLOR_YUV420p2RGB = 98,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2BGR_YV12 instead

// COLOR_YUV420p2BGR = 99,

/// YUV 4:2:0 family to RGB

COLOR_YUV2RGBA_YV12 = 102,

/// YUV 4:2:0 family to RGB

COLOR_YUV2BGRA_YV12 = 103,

/// YUV 4:2:0 family to RGB

COLOR_YUV2RGBA_IYUV = 104,

/// YUV 4:2:0 family to RGB

COLOR_YUV2BGRA_IYUV = 105,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2RGBA_IYUV instead

// COLOR_YUV2RGBA_I420 = 104,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2BGRA_IYUV instead

// COLOR_YUV2BGRA_I420 = 105,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2RGBA_YV12 instead

// COLOR_YUV420p2RGBA = 102,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2BGRA_YV12 instead

// COLOR_YUV420p2BGRA = 103,

/// YUV 4:2:0 family to RGB

COLOR_YUV2GRAY_420 = 106,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2GRAY_420 instead

// COLOR_YUV2GRAY_NV21 = 106,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2GRAY_NV21 instead

// COLOR_YUV2GRAY_NV12 = 106,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2GRAY_NV12 instead

// COLOR_YUV2GRAY_YV12 = 106,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2GRAY_YV12 instead

// COLOR_YUV2GRAY_IYUV = 106,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2GRAY_IYUV instead

// COLOR_YUV2GRAY_I420 = 106,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV2GRAY_I420 instead

// COLOR_YUV420sp2GRAY = 106,

// YUV 4:2:0 family to RGB

// Duplicate, use COLOR_YUV420sp2GRAY instead

// COLOR_YUV420p2GRAY = 106,

/// YUV 4:2:2 family to RGB

COLOR_YUV2RGB_UYVY = 107,

/// YUV 4:2:2 family to RGB

COLOR_YUV2BGR_UYVY = 108,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2RGB_UYVY instead

// COLOR_YUV2RGB_Y422 = 107,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2BGR_UYVY instead

// COLOR_YUV2BGR_Y422 = 108,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2RGB_Y422 instead

// COLOR_YUV2RGB_UYNV = 107,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2BGR_Y422 instead

// COLOR_YUV2BGR_UYNV = 108,

/// YUV 4:2:2 family to RGB

COLOR_YUV2RGBA_UYVY = 111,

/// YUV 4:2:2 family to RGB

COLOR_YUV2BGRA_UYVY = 112,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2RGBA_UYVY instead

// COLOR_YUV2RGBA_Y422 = 111,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2BGRA_UYVY instead

// COLOR_YUV2BGRA_Y422 = 112,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2RGBA_Y422 instead

// COLOR_YUV2RGBA_UYNV = 111,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2BGRA_Y422 instead

// COLOR_YUV2BGRA_UYNV = 112,

/// YUV 4:2:2 family to RGB

COLOR_YUV2RGB_YUY2 = 115,

/// YUV 4:2:2 family to RGB

COLOR_YUV2BGR_YUY2 = 116,

/// YUV 4:2:2 family to RGB

COLOR_YUV2RGB_YVYU = 117,

/// YUV 4:2:2 family to RGB

COLOR_YUV2BGR_YVYU = 118,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2RGB_YUY2 instead

// COLOR_YUV2RGB_YUYV = 115,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2BGR_YUY2 instead

// COLOR_YUV2BGR_YUYV = 116,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2RGB_YUYV instead

// COLOR_YUV2RGB_YUNV = 115,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2BGR_YUYV instead

// COLOR_YUV2BGR_YUNV = 116,

/// YUV 4:2:2 family to RGB

COLOR_YUV2RGBA_YUY2 = 119,

/// YUV 4:2:2 family to RGB

COLOR_YUV2BGRA_YUY2 = 120,

/// YUV 4:2:2 family to RGB

COLOR_YUV2RGBA_YVYU = 121,

/// YUV 4:2:2 family to RGB

COLOR_YUV2BGRA_YVYU = 122,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2RGBA_YUY2 instead

// COLOR_YUV2RGBA_YUYV = 119,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2BGRA_YUY2 instead

// COLOR_YUV2BGRA_YUYV = 120,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2RGBA_YUYV instead

// COLOR_YUV2RGBA_YUNV = 119,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2BGRA_YUYV instead

// COLOR_YUV2BGRA_YUNV = 120,

/// YUV 4:2:2 family to RGB

COLOR_YUV2GRAY_UYVY = 123,

/// YUV 4:2:2 family to RGB

COLOR_YUV2GRAY_YUY2 = 124,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2GRAY_UYVY instead

// COLOR_YUV2GRAY_Y422 = 123,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2GRAY_Y422 instead

// COLOR_YUV2GRAY_UYNV = 123,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2GRAY_YUY2 instead

// COLOR_YUV2GRAY_YVYU = 124,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2GRAY_YVYU instead

// COLOR_YUV2GRAY_YUYV = 124,

// YUV 4:2:2 family to RGB

// Duplicate, use COLOR_YUV2GRAY_YUYV instead

// COLOR_YUV2GRAY_YUNV = 124,

/// alpha premultiplication

COLOR_RGBA2mRGBA = 125,

/// alpha premultiplication

COLOR_mRGBA2RGBA = 126,

/// RGB to YUV 4:2:0 family

COLOR_RGB2YUV_I420 = 127,

/// RGB to YUV 4:2:0 family

COLOR_BGR2YUV_I420 = 128,

// RGB to YUV 4:2:0 family

// Duplicate, use COLOR_RGB2YUV_I420 instead

// COLOR_RGB2YUV_IYUV = 127,

// RGB to YUV 4:2:0 family

// Duplicate, use COLOR_BGR2YUV_I420 instead

// COLOR_BGR2YUV_IYUV = 128,

/// RGB to YUV 4:2:0 family

COLOR_RGBA2YUV_I420 = 129,

/// RGB to YUV 4:2:0 family

COLOR_BGRA2YUV_I420 = 130,

// RGB to YUV 4:2:0 family

// Duplicate, use COLOR_RGBA2YUV_I420 instead

// COLOR_RGBA2YUV_IYUV = 129,

// RGB to YUV 4:2:0 family

// Duplicate, use COLOR_BGRA2YUV_I420 instead

// COLOR_BGRA2YUV_IYUV = 130,

/// RGB to YUV 4:2:0 family

COLOR_RGB2YUV_YV12 = 131,

/// RGB to YUV 4:2:0 family

COLOR_BGR2YUV_YV12 = 132,

/// RGB to YUV 4:2:0 family

COLOR_RGBA2YUV_YV12 = 133,

/// RGB to YUV 4:2:0 family

COLOR_BGRA2YUV_YV12 = 134,

/// equivalent to RGGB Bayer pattern

COLOR_BayerBG2BGR = 46,

/// equivalent to GRBG Bayer pattern

COLOR_BayerGB2BGR = 47,

/// equivalent to BGGR Bayer pattern

COLOR_BayerRG2BGR = 48,

/// equivalent to GBRG Bayer pattern

COLOR_BayerGR2BGR = 49,

// Duplicate, use COLOR_BayerBG2BGR instead

// COLOR_BayerRGGB2BGR = 46,

// Duplicate, use COLOR_BayerGB2BGR instead

// COLOR_BayerGRBG2BGR = 47,

// Duplicate, use COLOR_BayerRG2BGR instead

// COLOR_BayerBGGR2BGR = 48,

// Duplicate, use COLOR_BayerGR2BGR instead

// COLOR_BayerGBRG2BGR = 49,

// Duplicate, use COLOR_BayerBGGR2BGR instead

// COLOR_BayerRGGB2RGB = 48,

// Duplicate, use COLOR_BayerGBRG2BGR instead

// COLOR_BayerGRBG2RGB = 49,

// Duplicate, use COLOR_BayerRGGB2BGR instead

// COLOR_BayerBGGR2RGB = 46,

// Duplicate, use COLOR_BayerGRBG2BGR instead

// COLOR_BayerGBRG2RGB = 47,

// equivalent to RGGB Bayer pattern

// Duplicate, use COLOR_BayerRGGB2RGB instead

// COLOR_BayerBG2RGB = 48,

// equivalent to GRBG Bayer pattern

// Duplicate, use COLOR_BayerGRBG2RGB instead

// COLOR_BayerGB2RGB = 49,

// equivalent to BGGR Bayer pattern

// Duplicate, use COLOR_BayerBGGR2RGB instead

// COLOR_BayerRG2RGB = 46,

// equivalent to GBRG Bayer pattern

// Duplicate, use COLOR_BayerGBRG2RGB instead

// COLOR_BayerGR2RGB = 47,

/// equivalent to RGGB Bayer pattern

COLOR_BayerBG2GRAY = 86,

/// equivalent to GRBG Bayer pattern

COLOR_BayerGB2GRAY = 87,

/// equivalent to BGGR Bayer pattern

COLOR_BayerRG2GRAY = 88,

/// equivalent to GBRG Bayer pattern

COLOR_BayerGR2GRAY = 89,

// Duplicate, use COLOR_BayerBG2GRAY instead

// COLOR_BayerRGGB2GRAY = 86,

// Duplicate, use COLOR_BayerGB2GRAY instead

// COLOR_BayerGRBG2GRAY = 87,

// Duplicate, use COLOR_BayerRG2GRAY instead

// COLOR_BayerBGGR2GRAY = 88,

// Duplicate, use COLOR_BayerGR2GRAY instead

// COLOR_BayerGBRG2GRAY = 89,

/// equivalent to RGGB Bayer pattern

COLOR_BayerBG2BGR_VNG = 62,

/// equivalent to GRBG Bayer pattern

COLOR_BayerGB2BGR_VNG = 63,

/// equivalent to BGGR Bayer pattern

COLOR_BayerRG2BGR_VNG = 64,

/// equivalent to GBRG Bayer pattern

COLOR_BayerGR2BGR_VNG = 65,

// Duplicate, use COLOR_BayerBG2BGR_VNG instead

// COLOR_BayerRGGB2BGR_VNG = 62,

// Duplicate, use COLOR_BayerGB2BGR_VNG instead

// COLOR_BayerGRBG2BGR_VNG = 63,

// Duplicate, use COLOR_BayerRG2BGR_VNG instead

// COLOR_BayerBGGR2BGR_VNG = 64,

// Duplicate, use COLOR_BayerGR2BGR_VNG instead

// COLOR_BayerGBRG2BGR_VNG = 65,

// Duplicate, use COLOR_BayerBGGR2BGR_VNG instead

// COLOR_BayerRGGB2RGB_VNG = 64,

// Duplicate, use COLOR_BayerGBRG2BGR_VNG instead

// COLOR_BayerGRBG2RGB_VNG = 65,

// Duplicate, use COLOR_BayerRGGB2BGR_VNG instead

// COLOR_BayerBGGR2RGB_VNG = 62,

// Duplicate, use COLOR_BayerGRBG2BGR_VNG instead

// COLOR_BayerGBRG2RGB_VNG = 63,

// equivalent to RGGB Bayer pattern

// Duplicate, use COLOR_BayerRGGB2RGB_VNG instead

// COLOR_BayerBG2RGB_VNG = 64,

// equivalent to GRBG Bayer pattern

// Duplicate, use COLOR_BayerGRBG2RGB_VNG instead

// COLOR_BayerGB2RGB_VNG = 65,

// equivalent to BGGR Bayer pattern

// Duplicate, use COLOR_BayerBGGR2RGB_VNG instead

// COLOR_BayerRG2RGB_VNG = 62,

// equivalent to GBRG Bayer pattern

// Duplicate, use COLOR_BayerGBRG2RGB_VNG instead

// COLOR_BayerGR2RGB_VNG = 63,

/// equivalent to RGGB Bayer pattern

COLOR_BayerBG2BGR_EA = 135,

/// equivalent to GRBG Bayer pattern

COLOR_BayerGB2BGR_EA = 136,

/// equivalent to BGGR Bayer pattern

COLOR_BayerRG2BGR_EA = 137,

/// equivalent to GBRG Bayer pattern

COLOR_BayerGR2BGR_EA = 138,

// Duplicate, use COLOR_BayerBG2BGR_EA instead

// COLOR_BayerRGGB2BGR_EA = 135,

// Duplicate, use COLOR_BayerGB2BGR_EA instead

// COLOR_BayerGRBG2BGR_EA = 136,

// Duplicate, use COLOR_BayerRG2BGR_EA instead

// COLOR_BayerBGGR2BGR_EA = 137,

// Duplicate, use COLOR_BayerGR2BGR_EA instead

// COLOR_BayerGBRG2BGR_EA = 138,

// Duplicate, use COLOR_BayerBGGR2BGR_EA instead

// COLOR_BayerRGGB2RGB_EA = 137,

// Duplicate, use COLOR_BayerGBRG2BGR_EA instead

// COLOR_BayerGRBG2RGB_EA = 138,

// Duplicate, use COLOR_BayerRGGB2BGR_EA instead

// COLOR_BayerBGGR2RGB_EA = 135,

// Duplicate, use COLOR_BayerGRBG2BGR_EA instead

// COLOR_BayerGBRG2RGB_EA = 136,

// equivalent to RGGB Bayer pattern

// Duplicate, use COLOR_BayerRGGB2RGB_EA instead

// COLOR_BayerBG2RGB_EA = 137,

// equivalent to GRBG Bayer pattern

// Duplicate, use COLOR_BayerGRBG2RGB_EA instead

// COLOR_BayerGB2RGB_EA = 138,

// equivalent to BGGR Bayer pattern

// Duplicate, use COLOR_BayerBGGR2RGB_EA instead

// COLOR_BayerRG2RGB_EA = 135,

// equivalent to GBRG Bayer pattern

// Duplicate, use COLOR_BayerGBRG2RGB_EA instead

// COLOR_BayerGR2RGB_EA = 136,

/// equivalent to RGGB Bayer pattern

COLOR_BayerBG2BGRA = 139,

/// equivalent to GRBG Bayer pattern

COLOR_BayerGB2BGRA = 140,

/// equivalent to BGGR Bayer pattern

COLOR_BayerRG2BGRA = 141,

/// equivalent to GBRG Bayer pattern

COLOR_BayerGR2BGRA = 142,

// Duplicate, use COLOR_BayerBG2BGRA instead

// COLOR_BayerRGGB2BGRA = 139,

// Duplicate, use COLOR_BayerGB2BGRA instead

// COLOR_BayerGRBG2BGRA = 140,

// Duplicate, use COLOR_BayerRG2BGRA instead

// COLOR_BayerBGGR2BGRA = 141,

// Duplicate, use COLOR_BayerGR2BGRA instead

// COLOR_BayerGBRG2BGRA = 142,

// Duplicate, use COLOR_BayerBGGR2BGRA instead

// COLOR_BayerRGGB2RGBA = 141,

// Duplicate, use COLOR_BayerGBRG2BGRA instead

// COLOR_BayerGRBG2RGBA = 142,

// Duplicate, use COLOR_BayerRGGB2BGRA instead

// COLOR_BayerBGGR2RGBA = 139,

// Duplicate, use COLOR_BayerGRBG2BGRA instead

// COLOR_BayerGBRG2RGBA = 140,

// equivalent to RGGB Bayer pattern

// Duplicate, use COLOR_BayerRGGB2RGBA instead

// COLOR_BayerBG2RGBA = 141,

// equivalent to GRBG Bayer pattern

// Duplicate, use COLOR_BayerGRBG2RGBA instead

// COLOR_BayerGB2RGBA = 142,

// equivalent to BGGR Bayer pattern

// Duplicate, use COLOR_BayerBGGR2RGBA instead

// COLOR_BayerRG2RGBA = 139,

// equivalent to GBRG Bayer pattern

// Duplicate, use COLOR_BayerGBRG2RGBA instead

// COLOR_BayerGR2RGBA = 140,

COLOR_COLORCVT_MAX = 143,

}

opencv_type_enum! { crate::imgproc::ColorConversionCodes }

/// GNU Octave/MATLAB equivalent colormaps

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ColormapTypes {

///

COLORMAP_AUTUMN = 0,

///

COLORMAP_BONE = 1,

///

COLORMAP_JET = 2,

///

COLORMAP_WINTER = 3,

///

COLORMAP_RAINBOW = 4,

///

COLORMAP_OCEAN = 5,

///

COLORMAP_SUMMER = 6,

///

COLORMAP_SPRING = 7,

///

COLORMAP_COOL = 8,

///

COLORMAP_HSV = 9,

///

COLORMAP_PINK = 10,

///

COLORMAP_HOT = 11,

///

COLORMAP_PARULA = 12,

///

COLORMAP_MAGMA = 13,

///

COLORMAP_INFERNO = 14,

///

COLORMAP_PLASMA = 15,

///

COLORMAP_VIRIDIS = 16,

///

COLORMAP_CIVIDIS = 17,

///

COLORMAP_TWILIGHT = 18,

///

COLORMAP_TWILIGHT_SHIFTED = 19,

///

COLORMAP_TURBO = 20,

///

COLORMAP_DEEPGREEN = 21,

}

opencv_type_enum! { crate::imgproc::ColormapTypes }

/// connected components algorithm

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ConnectedComponentsAlgorithmsTypes {

/// Spaghetti [Bolelli2019](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2019) algorithm for 8-way connectivity, Spaghetti4C [Bolelli2021](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2021) algorithm for 4-way connectivity.

CCL_DEFAULT = -1,

/// SAUF [Wu2009](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Wu2009) algorithm for 8-way connectivity, SAUF algorithm for 4-way connectivity. The parallel implementation described in [Bolelli2017](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2017) is available for SAUF.

CCL_WU = 0,

/// BBDT [Grana2010](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Grana2010) algorithm for 8-way connectivity, SAUF algorithm for 4-way connectivity. The parallel implementation described in [Bolelli2017](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2017) is available for both BBDT and SAUF.

CCL_GRANA = 1,

/// Spaghetti [Bolelli2019](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2019) algorithm for 8-way connectivity, Spaghetti4C [Bolelli2021](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2021) algorithm for 4-way connectivity. The parallel implementation described in [Bolelli2017](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Bolelli2017) is available for both Spaghetti and Spaghetti4C.

CCL_BOLELLI = 2,

/// Same as CCL_WU. It is preferable to use the flag with the name of the algorithm (CCL_SAUF) rather than the one with the name of the first author (CCL_WU).

CCL_SAUF = 3,

/// Same as CCL_GRANA. It is preferable to use the flag with the name of the algorithm (CCL_BBDT) rather than the one with the name of the first author (CCL_GRANA).

CCL_BBDT = 4,

/// Same as CCL_BOLELLI. It is preferable to use the flag with the name of the algorithm (CCL_SPAGHETTI) rather than the one with the name of the first author (CCL_BOLELLI).

CCL_SPAGHETTI = 5,

}

opencv_type_enum! { crate::imgproc::ConnectedComponentsAlgorithmsTypes }

/// connected components statistics

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ConnectedComponentsTypes {

/// The leftmost (x) coordinate which is the inclusive start of the bounding

/// box in the horizontal direction.

CC_STAT_LEFT = 0,

/// The topmost (y) coordinate which is the inclusive start of the bounding

/// box in the vertical direction.

CC_STAT_TOP = 1,

/// The horizontal size of the bounding box

CC_STAT_WIDTH = 2,

/// The vertical size of the bounding box

CC_STAT_HEIGHT = 3,

/// The total area (in pixels) of the connected component

CC_STAT_AREA = 4,

/// Max enumeration value. Used internally only for memory allocation

CC_STAT_MAX = 5,

}

opencv_type_enum! { crate::imgproc::ConnectedComponentsTypes }

/// the contour approximation algorithm

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ContourApproximationModes {

/// stores absolutely all the contour points. That is, any 2 subsequent points (x1,y1) and

/// (x2,y2) of the contour will be either horizontal, vertical or diagonal neighbors, that is,

/// max(abs(x1-x2),abs(y2-y1))==1.

CHAIN_APPROX_NONE = 1,

/// compresses horizontal, vertical, and diagonal segments and leaves only their end points.

/// For example, an up-right rectangular contour is encoded with 4 points.

CHAIN_APPROX_SIMPLE = 2,

/// applies one of the flavors of the Teh-Chin chain approximation algorithm [TehChin89](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_TehChin89)

CHAIN_APPROX_TC89_L1 = 3,

/// applies one of the flavors of the Teh-Chin chain approximation algorithm [TehChin89](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_TehChin89)

CHAIN_APPROX_TC89_KCOS = 4,

}

opencv_type_enum! { crate::imgproc::ContourApproximationModes }

/// distanceTransform algorithm flags

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum DistanceTransformLabelTypes {

/// each connected component of zeros in src (as well as all the non-zero pixels closest to the

/// connected component) will be assigned the same label

DIST_LABEL_CCOMP = 0,

/// each zero pixel (and all the non-zero pixels closest to it) gets its own label.

DIST_LABEL_PIXEL = 1,

}

opencv_type_enum! { crate::imgproc::DistanceTransformLabelTypes }

/// Mask size for distance transform

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum DistanceTransformMasks {

/// mask=3

DIST_MASK_3 = 3,

/// mask=5

DIST_MASK_5 = 5,

DIST_MASK_PRECISE = 0,

}

opencv_type_enum! { crate::imgproc::DistanceTransformMasks }

/// Distance types for Distance Transform and M-estimators

/// ## See also

/// distanceTransform, fitLine

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum DistanceTypes {

/// User defined distance

DIST_USER = -1,

/// distance = |x1-x2| + |y1-y2|

DIST_L1 = 1,

/// the simple euclidean distance

DIST_L2 = 2,

/// distance = max(|x1-x2|,|y1-y2|)

DIST_C = 3,

/// L1-L2 metric: distance = 2(sqrt(1+x*x/2) - 1))

DIST_L12 = 4,

/// distance = c^2(|x|/c-log(1+|x|/c)), c = 1.3998

DIST_FAIR = 5,

/// distance = c^2/2(1-exp(-(x/c)^2)), c = 2.9846

DIST_WELSCH = 6,

/// distance = |x|<c ? x^2/2 : c(|x|-c/2), c=1.345

DIST_HUBER = 7,

}

opencv_type_enum! { crate::imgproc::DistanceTypes }

/// floodfill algorithm flags

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum FloodFillFlags {

/// If set, the difference between the current pixel and seed pixel is considered. Otherwise,

/// the difference between neighbor pixels is considered (that is, the range is floating).

FLOODFILL_FIXED_RANGE = 65536,

/// If set, the function does not change the image ( newVal is ignored), and only fills the

/// mask with the value specified in bits 8-16 of flags as described above. This option only make

/// sense in function variants that have the mask parameter.

FLOODFILL_MASK_ONLY = 131072,

}

opencv_type_enum! { crate::imgproc::FloodFillFlags }

/// class of the pixel in GrabCut algorithm

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum GrabCutClasses {

/// an obvious background pixels

GC_BGD = 0,

/// an obvious foreground (object) pixel

GC_FGD = 1,

/// a possible background pixel

GC_PR_BGD = 2,

/// a possible foreground pixel

GC_PR_FGD = 3,

}

opencv_type_enum! { crate::imgproc::GrabCutClasses }

/// GrabCut algorithm flags

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum GrabCutModes {

/// The function initializes the state and the mask using the provided rectangle. After that it

/// runs iterCount iterations of the algorithm.

GC_INIT_WITH_RECT = 0,

/// The function initializes the state using the provided mask. Note that GC_INIT_WITH_RECT

/// and GC_INIT_WITH_MASK can be combined. Then, all the pixels outside of the ROI are

/// automatically initialized with GC_BGD .

GC_INIT_WITH_MASK = 1,

/// The value means that the algorithm should just resume.

GC_EVAL = 2,

/// The value means that the algorithm should just run the grabCut algorithm (a single iteration) with the fixed model

GC_EVAL_FREEZE_MODEL = 3,

}

opencv_type_enum! { crate::imgproc::GrabCutModes }

/// Only a subset of Hershey fonts <https://en.wikipedia.org/wiki/Hershey_fonts> are supported

/// @ingroup imgproc_draw

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum HersheyFonts {

/// normal size sans-serif font

FONT_HERSHEY_SIMPLEX = 0,

/// small size sans-serif font

FONT_HERSHEY_PLAIN = 1,

/// normal size sans-serif font (more complex than FONT_HERSHEY_SIMPLEX)

FONT_HERSHEY_DUPLEX = 2,

/// normal size serif font

FONT_HERSHEY_COMPLEX = 3,

/// normal size serif font (more complex than FONT_HERSHEY_COMPLEX)

FONT_HERSHEY_TRIPLEX = 4,

/// smaller version of FONT_HERSHEY_COMPLEX

FONT_HERSHEY_COMPLEX_SMALL = 5,

/// hand-writing style font

FONT_HERSHEY_SCRIPT_SIMPLEX = 6,

/// more complex variant of FONT_HERSHEY_SCRIPT_SIMPLEX

FONT_HERSHEY_SCRIPT_COMPLEX = 7,

/// flag for italic font

FONT_ITALIC = 16,

}

opencv_type_enum! { crate::imgproc::HersheyFonts }

/// Histogram comparison methods

/// @ingroup imgproc_hist

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum HistCompMethods {

/// Correlation

///

/// where

///

/// and  is a total number of histogram bins.

HISTCMP_CORREL = 0,

/// Chi-Square

///

HISTCMP_CHISQR = 1,

/// Intersection

///

HISTCMP_INTERSECT = 2,

/// Bhattacharyya distance

/// (In fact, OpenCV computes Hellinger distance, which is related to Bhattacharyya coefficient.)

///

HISTCMP_BHATTACHARYYA = 3,

// Synonym for HISTCMP_BHATTACHARYYA

// Duplicate, use HISTCMP_BHATTACHARYYA instead

// HISTCMP_HELLINGER = 3,

/// Alternative Chi-Square

///

/// This alternative formula is regularly used for texture comparison. See e.g. [Puzicha1997](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Puzicha1997)

HISTCMP_CHISQR_ALT = 4,

/// Kullback-Leibler divergence

///

HISTCMP_KL_DIV = 5,

}

opencv_type_enum! { crate::imgproc::HistCompMethods }

/// Variants of a Hough transform

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum HoughModes {

/// classical or standard Hough transform. Every line is represented by two floating-point

/// numbers  , where  is a distance between (0,0) point and the line,

/// and  is the angle between x-axis and the normal to the line. Thus, the matrix must

/// be (the created sequence will be) of CV_32FC2 type

HOUGH_STANDARD = 0,

/// probabilistic Hough transform (more efficient in case if the picture contains a few long

/// linear segments). It returns line segments rather than the whole line. Each segment is

/// represented by starting and ending points, and the matrix must be (the created sequence will

/// be) of the CV_32SC4 type.

HOUGH_PROBABILISTIC = 1,

/// multi-scale variant of the classical Hough transform. The lines are encoded the same way as

/// HOUGH_STANDARD.

HOUGH_MULTI_SCALE = 2,

/// basically *21HT*, described in [Yuen90](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Yuen90)

HOUGH_GRADIENT = 3,

/// variation of HOUGH_GRADIENT to get better accuracy

HOUGH_GRADIENT_ALT = 4,

}

opencv_type_enum! { crate::imgproc::HoughModes }

/// interpolation algorithm

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum InterpolationFlags {

/// nearest neighbor interpolation

INTER_NEAREST = 0,

/// bilinear interpolation

INTER_LINEAR = 1,

/// bicubic interpolation

INTER_CUBIC = 2,

/// resampling using pixel area relation. It may be a preferred method for image decimation, as

/// it gives moire'-free results. But when the image is zoomed, it is similar to the INTER_NEAREST

/// method.

INTER_AREA = 3,

/// Lanczos interpolation over 8x8 neighborhood

INTER_LANCZOS4 = 4,

/// Bit exact bilinear interpolation

INTER_LINEAR_EXACT = 5,

/// Bit exact nearest neighbor interpolation. This will produce same results as

/// the nearest neighbor method in PIL, scikit-image or Matlab.

INTER_NEAREST_EXACT = 6,

/// mask for interpolation codes

INTER_MAX = 7,