pub mod video {

//! # Video Analysis

//! # Motion Analysis

//! # Object Tracking

use crate::mod_prelude::*;

use crate::{core, sys, types};

pub mod prelude {

pub use super::{BackgroundSubtractorKNNTrait, BackgroundSubtractorKNNTraitConst, BackgroundSubtractorMOG2Trait, BackgroundSubtractorMOG2TraitConst, BackgroundSubtractorTrait, BackgroundSubtractorTraitConst, DISOpticalFlowTrait, DISOpticalFlowTraitConst, DenseOpticalFlowTrait, DenseOpticalFlowTraitConst, FarnebackOpticalFlowTrait, FarnebackOpticalFlowTraitConst, KalmanFilterTrait, KalmanFilterTraitConst, SparseOpticalFlowTrait, SparseOpticalFlowTraitConst, SparsePyrLKOpticalFlowTrait, SparsePyrLKOpticalFlowTraitConst, TrackerDaSiamRPNTrait, TrackerDaSiamRPNTraitConst, TrackerDaSiamRPN_ParamsTrait, TrackerDaSiamRPN_ParamsTraitConst, TrackerGOTURNTrait, TrackerGOTURNTraitConst, TrackerGOTURN_ParamsTrait, TrackerGOTURN_ParamsTraitConst, TrackerMILTrait, TrackerMILTraitConst, TrackerNanoTrait, TrackerNanoTraitConst, TrackerNano_ParamsTrait, TrackerNano_ParamsTraitConst, TrackerTrait, TrackerTraitConst, TrackerVitTrait, TrackerVitTraitConst, TrackerVit_ParamsTrait, TrackerVit_ParamsTraitConst, VariationalRefinementTrait, VariationalRefinementTraitConst};

}

pub const DISOpticalFlow_PRESET_FAST: i32 = 1;

pub const DISOpticalFlow_PRESET_MEDIUM: i32 = 2;

pub const DISOpticalFlow_PRESET_ULTRAFAST: i32 = 0;

pub const MOTION_AFFINE: i32 = 2;

pub const MOTION_EUCLIDEAN: i32 = 1;

pub const MOTION_HOMOGRAPHY: i32 = 3;

pub const MOTION_TRANSLATION: i32 = 0;

pub const OPTFLOW_FARNEBACK_GAUSSIAN: i32 = 256;

pub const OPTFLOW_LK_GET_MIN_EIGENVALS: i32 = 8;

pub const OPTFLOW_USE_INITIAL_FLOW: i32 = 4;

/// Finds an object center, size, and orientation.

///

/// ## Parameters

/// * probImage: Back projection of the object histogram. See calcBackProject.

/// * window: Initial search window.

/// * criteria: Stop criteria for the underlying meanShift.

/// returns

/// (in old interfaces) Number of iterations CAMSHIFT took to converge

/// The function implements the CAMSHIFT object tracking algorithm [Bradski98](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Bradski98) . First, it finds an

/// object center using meanShift and then adjusts the window size and finds the optimal rotation. The

/// function returns the rotated rectangle structure that includes the object position, size, and

/// orientation. The next position of the search window can be obtained with RotatedRect::boundingRect()

///

/// See the OpenCV sample camshiftdemo.c that tracks colored objects.

///

///

/// Note:

/// * (Python) A sample explaining the camshift tracking algorithm can be found at

/// opencv_source_code/samples/python/camshift.py

#[inline]

pub fn cam_shift(prob_image: &impl ToInputArray, window: &mut core::Rect, criteria: core::TermCriteria) -> Result<core::RotatedRect> {

input_array_arg!(prob_image);

return_send!(via ocvrs_return);

unsafe { sys::cv_CamShift_const__InputArrayR_RectR_TermCriteria(prob_image.as_raw__InputArray(), window, &criteria, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Constructs the image pyramid which can be passed to calcOpticalFlowPyrLK.

///

/// ## Parameters

/// * img: 8-bit input image.

/// * pyramid: output pyramid.

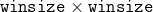

/// * winSize: window size of optical flow algorithm. Must be not less than winSize argument of

/// calcOpticalFlowPyrLK. It is needed to calculate required padding for pyramid levels.

/// * maxLevel: 0-based maximal pyramid level number.

/// * withDerivatives: set to precompute gradients for the every pyramid level. If pyramid is

/// constructed without the gradients then calcOpticalFlowPyrLK will calculate them internally.

/// * pyrBorder: the border mode for pyramid layers.

/// * derivBorder: the border mode for gradients.

/// * tryReuseInputImage: put ROI of input image into the pyramid if possible. You can pass false

/// to force data copying.

/// ## Returns

/// number of levels in constructed pyramid. Can be less than maxLevel.

///

/// ## Note

/// This alternative version of [build_optical_flow_pyramid] function uses the following default values for its arguments:

/// * with_derivatives: true

/// * pyr_border: BORDER_REFLECT_101

/// * deriv_border: BORDER_CONSTANT

/// * try_reuse_input_image: true

#[inline]

pub fn build_optical_flow_pyramid_def(img: &impl ToInputArray, pyramid: &mut impl ToOutputArray, win_size: core::Size, max_level: i32) -> Result<i32> {

input_array_arg!(img);

output_array_arg!(pyramid);

return_send!(via ocvrs_return);

unsafe { sys::cv_buildOpticalFlowPyramid_const__InputArrayR_const__OutputArrayR_Size_int(img.as_raw__InputArray(), pyramid.as_raw__OutputArray(), &win_size, max_level, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Constructs the image pyramid which can be passed to calcOpticalFlowPyrLK.

///

/// ## Parameters

/// * img: 8-bit input image.

/// * pyramid: output pyramid.

/// * winSize: window size of optical flow algorithm. Must be not less than winSize argument of

/// calcOpticalFlowPyrLK. It is needed to calculate required padding for pyramid levels.

/// * maxLevel: 0-based maximal pyramid level number.

/// * withDerivatives: set to precompute gradients for the every pyramid level. If pyramid is

/// constructed without the gradients then calcOpticalFlowPyrLK will calculate them internally.

/// * pyrBorder: the border mode for pyramid layers.

/// * derivBorder: the border mode for gradients.

/// * tryReuseInputImage: put ROI of input image into the pyramid if possible. You can pass false

/// to force data copying.

/// ## Returns

/// number of levels in constructed pyramid. Can be less than maxLevel.

///

/// ## C++ default parameters

/// * with_derivatives: true

/// * pyr_border: BORDER_REFLECT_101

/// * deriv_border: BORDER_CONSTANT

/// * try_reuse_input_image: true

#[inline]

pub fn build_optical_flow_pyramid(img: &impl ToInputArray, pyramid: &mut impl ToOutputArray, win_size: core::Size, max_level: i32, with_derivatives: bool, pyr_border: i32, deriv_border: i32, try_reuse_input_image: bool) -> Result<i32> {

input_array_arg!(img);

output_array_arg!(pyramid);

return_send!(via ocvrs_return);

unsafe { sys::cv_buildOpticalFlowPyramid_const__InputArrayR_const__OutputArrayR_Size_int_bool_int_int_bool(img.as_raw__InputArray(), pyramid.as_raw__OutputArray(), &win_size, max_level, with_derivatives, pyr_border, deriv_border, try_reuse_input_image, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Computes a dense optical flow using the Gunnar Farneback's algorithm.

///

/// ## Parameters

/// * prev: first 8-bit single-channel input image.

/// * next: second input image of the same size and the same type as prev.

/// * flow: computed flow image that has the same size as prev and type CV_32FC2.

/// * pyr_scale: parameter, specifying the image scale (\<1) to build pyramids for each image;

/// pyr_scale=0.5 means a classical pyramid, where each next layer is twice smaller than the previous

/// one.

/// * levels: number of pyramid layers including the initial image; levels=1 means that no extra

/// layers are created and only the original images are used.

/// * winsize: averaging window size; larger values increase the algorithm robustness to image

/// noise and give more chances for fast motion detection, but yield more blurred motion field.

/// * iterations: number of iterations the algorithm does at each pyramid level.

/// * poly_n: size of the pixel neighborhood used to find polynomial expansion in each pixel;

/// larger values mean that the image will be approximated with smoother surfaces, yielding more

/// robust algorithm and more blurred motion field, typically poly_n =5 or 7.

/// * poly_sigma: standard deviation of the Gaussian that is used to smooth derivatives used as a

/// basis for the polynomial expansion; for poly_n=5, you can set poly_sigma=1.1, for poly_n=7, a

/// good value would be poly_sigma=1.5.

/// * flags: operation flags that can be a combination of the following:

/// * **OPTFLOW_USE_INITIAL_FLOW** uses the input flow as an initial flow approximation.

/// * **OPTFLOW_FARNEBACK_GAUSSIAN** uses the Gaussian

/// filter instead of a box filter of the same size for optical flow estimation; usually, this

/// option gives z more accurate flow than with a box filter, at the cost of lower speed;

/// normally, winsize for a Gaussian window should be set to a larger value to achieve the same

/// level of robustness.

///

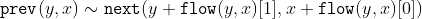

/// The function finds an optical flow for each prev pixel using the [Farneback2003](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Farneback2003) algorithm so that

///

///

///

///

/// Note: Some examples:

///

/// * An example using the optical flow algorithm described by Gunnar Farneback can be found at

/// opencv_source_code/samples/cpp/fback.cpp

/// * (Python) An example using the optical flow algorithm described by Gunnar Farneback can be

/// found at opencv_source_code/samples/python/opt_flow.py

#[inline]

pub fn calc_optical_flow_farneback(prev: &impl ToInputArray, next: &impl ToInputArray, flow: &mut impl ToInputOutputArray, pyr_scale: f64, levels: i32, winsize: i32, iterations: i32, poly_n: i32, poly_sigma: f64, flags: i32) -> Result<()> {

input_array_arg!(prev);

input_array_arg!(next);

input_output_array_arg!(flow);

return_send!(via ocvrs_return);

unsafe { sys::cv_calcOpticalFlowFarneback_const__InputArrayR_const__InputArrayR_const__InputOutputArrayR_double_int_int_int_int_double_int(prev.as_raw__InputArray(), next.as_raw__InputArray(), flow.as_raw__InputOutputArray(), pyr_scale, levels, winsize, iterations, poly_n, poly_sigma, flags, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Calculates an optical flow for a sparse feature set using the iterative Lucas-Kanade method with

/// pyramids.

///

/// ## Parameters

/// * prevImg: first 8-bit input image or pyramid constructed by buildOpticalFlowPyramid.

/// * nextImg: second input image or pyramid of the same size and the same type as prevImg.

/// * prevPts: vector of 2D points for which the flow needs to be found; point coordinates must be

/// single-precision floating-point numbers.

/// * nextPts: output vector of 2D points (with single-precision floating-point coordinates)

/// containing the calculated new positions of input features in the second image; when

/// OPTFLOW_USE_INITIAL_FLOW flag is passed, the vector must have the same size as in the input.

/// * status: output status vector (of unsigned chars); each element of the vector is set to 1 if

/// the flow for the corresponding features has been found, otherwise, it is set to 0.

/// * err: output vector of errors; each element of the vector is set to an error for the

/// corresponding feature, type of the error measure can be set in flags parameter; if the flow wasn't

/// found then the error is not defined (use the status parameter to find such cases).

/// * winSize: size of the search window at each pyramid level.

/// * maxLevel: 0-based maximal pyramid level number; if set to 0, pyramids are not used (single

/// level), if set to 1, two levels are used, and so on; if pyramids are passed to input then

/// algorithm will use as many levels as pyramids have but no more than maxLevel.

/// * criteria: parameter, specifying the termination criteria of the iterative search algorithm

/// (after the specified maximum number of iterations criteria.maxCount or when the search window

/// moves by less than criteria.epsilon.

/// * flags: operation flags:

/// * **OPTFLOW_USE_INITIAL_FLOW** uses initial estimations, stored in nextPts; if the flag is

/// not set, then prevPts is copied to nextPts and is considered the initial estimate.

/// * **OPTFLOW_LK_GET_MIN_EIGENVALS** use minimum eigen values as an error measure (see

/// minEigThreshold description); if the flag is not set, then L1 distance between patches

/// around the original and a moved point, divided by number of pixels in a window, is used as a

/// error measure.

/// * minEigThreshold: the algorithm calculates the minimum eigen value of a 2x2 normal matrix of

/// optical flow equations (this matrix is called a spatial gradient matrix in [Bouguet00](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Bouguet00)), divided

/// by number of pixels in a window; if this value is less than minEigThreshold, then a corresponding

/// feature is filtered out and its flow is not processed, so it allows to remove bad points and get a

/// performance boost.

///

/// The function implements a sparse iterative version of the Lucas-Kanade optical flow in pyramids. See

/// [Bouguet00](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Bouguet00) . The function is parallelized with the TBB library.

///

///

/// Note: Some examples:

///

/// * An example using the Lucas-Kanade optical flow algorithm can be found at

/// opencv_source_code/samples/cpp/lkdemo.cpp

/// * (Python) An example using the Lucas-Kanade optical flow algorithm can be found at

/// opencv_source_code/samples/python/lk_track.py

/// * (Python) An example using the Lucas-Kanade tracker for homography matching can be found at

/// opencv_source_code/samples/python/lk_homography.py

///

/// ## Note

/// This alternative version of [calc_optical_flow_pyr_lk] function uses the following default values for its arguments:

/// * win_size: Size(21,21)

/// * max_level: 3

/// * criteria: TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,30,0.01)

/// * flags: 0

/// * min_eig_threshold: 1e-4

#[inline]

pub fn calc_optical_flow_pyr_lk_def(prev_img: &impl ToInputArray, next_img: &impl ToInputArray, prev_pts: &impl ToInputArray, next_pts: &mut impl ToInputOutputArray, status: &mut impl ToOutputArray, err: &mut impl ToOutputArray) -> Result<()> {

input_array_arg!(prev_img);

input_array_arg!(next_img);

input_array_arg!(prev_pts);

input_output_array_arg!(next_pts);

output_array_arg!(status);

output_array_arg!(err);

return_send!(via ocvrs_return);

unsafe { sys::cv_calcOpticalFlowPyrLK_const__InputArrayR_const__InputArrayR_const__InputArrayR_const__InputOutputArrayR_const__OutputArrayR_const__OutputArrayR(prev_img.as_raw__InputArray(), next_img.as_raw__InputArray(), prev_pts.as_raw__InputArray(), next_pts.as_raw__InputOutputArray(), status.as_raw__OutputArray(), err.as_raw__OutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Calculates an optical flow for a sparse feature set using the iterative Lucas-Kanade method with

/// pyramids.

///

/// ## Parameters

/// * prevImg: first 8-bit input image or pyramid constructed by buildOpticalFlowPyramid.

/// * nextImg: second input image or pyramid of the same size and the same type as prevImg.

/// * prevPts: vector of 2D points for which the flow needs to be found; point coordinates must be

/// single-precision floating-point numbers.

/// * nextPts: output vector of 2D points (with single-precision floating-point coordinates)

/// containing the calculated new positions of input features in the second image; when

/// OPTFLOW_USE_INITIAL_FLOW flag is passed, the vector must have the same size as in the input.

/// * status: output status vector (of unsigned chars); each element of the vector is set to 1 if

/// the flow for the corresponding features has been found, otherwise, it is set to 0.

/// * err: output vector of errors; each element of the vector is set to an error for the

/// corresponding feature, type of the error measure can be set in flags parameter; if the flow wasn't

/// found then the error is not defined (use the status parameter to find such cases).

/// * winSize: size of the search window at each pyramid level.

/// * maxLevel: 0-based maximal pyramid level number; if set to 0, pyramids are not used (single

/// level), if set to 1, two levels are used, and so on; if pyramids are passed to input then

/// algorithm will use as many levels as pyramids have but no more than maxLevel.

/// * criteria: parameter, specifying the termination criteria of the iterative search algorithm

/// (after the specified maximum number of iterations criteria.maxCount or when the search window

/// moves by less than criteria.epsilon.

/// * flags: operation flags:

/// * **OPTFLOW_USE_INITIAL_FLOW** uses initial estimations, stored in nextPts; if the flag is

/// not set, then prevPts is copied to nextPts and is considered the initial estimate.

/// * **OPTFLOW_LK_GET_MIN_EIGENVALS** use minimum eigen values as an error measure (see

/// minEigThreshold description); if the flag is not set, then L1 distance between patches

/// around the original and a moved point, divided by number of pixels in a window, is used as a

/// error measure.

/// * minEigThreshold: the algorithm calculates the minimum eigen value of a 2x2 normal matrix of

/// optical flow equations (this matrix is called a spatial gradient matrix in [Bouguet00](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Bouguet00)), divided

/// by number of pixels in a window; if this value is less than minEigThreshold, then a corresponding

/// feature is filtered out and its flow is not processed, so it allows to remove bad points and get a

/// performance boost.

///

/// The function implements a sparse iterative version of the Lucas-Kanade optical flow in pyramids. See

/// [Bouguet00](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Bouguet00) . The function is parallelized with the TBB library.

///

///

/// Note: Some examples:

///

/// * An example using the Lucas-Kanade optical flow algorithm can be found at

/// opencv_source_code/samples/cpp/lkdemo.cpp

/// * (Python) An example using the Lucas-Kanade optical flow algorithm can be found at

/// opencv_source_code/samples/python/lk_track.py

/// * (Python) An example using the Lucas-Kanade tracker for homography matching can be found at

/// opencv_source_code/samples/python/lk_homography.py

///

/// ## C++ default parameters

/// * win_size: Size(21,21)

/// * max_level: 3

/// * criteria: TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,30,0.01)

/// * flags: 0

/// * min_eig_threshold: 1e-4

#[inline]

pub fn calc_optical_flow_pyr_lk(prev_img: &impl ToInputArray, next_img: &impl ToInputArray, prev_pts: &impl ToInputArray, next_pts: &mut impl ToInputOutputArray, status: &mut impl ToOutputArray, err: &mut impl ToOutputArray, win_size: core::Size, max_level: i32, criteria: core::TermCriteria, flags: i32, min_eig_threshold: f64) -> Result<()> {

input_array_arg!(prev_img);

input_array_arg!(next_img);

input_array_arg!(prev_pts);

input_output_array_arg!(next_pts);

output_array_arg!(status);

output_array_arg!(err);

return_send!(via ocvrs_return);

unsafe { sys::cv_calcOpticalFlowPyrLK_const__InputArrayR_const__InputArrayR_const__InputArrayR_const__InputOutputArrayR_const__OutputArrayR_const__OutputArrayR_Size_int_TermCriteria_int_double(prev_img.as_raw__InputArray(), next_img.as_raw__InputArray(), prev_pts.as_raw__InputArray(), next_pts.as_raw__InputOutputArray(), status.as_raw__OutputArray(), err.as_raw__OutputArray(), &win_size, max_level, &criteria, flags, min_eig_threshold, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Computes the Enhanced Correlation Coefficient value between two images [EP08](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_EP08) .

///

/// ## Parameters

/// * templateImage: single-channel template image; CV_8U or CV_32F array.

/// * inputImage: single-channel input image to be warped to provide an image similar to

/// templateImage, same type as templateImage.

/// * inputMask: An optional mask to indicate valid values of inputImage.

/// ## See also

/// findTransformECC

///

/// ## Note

/// This alternative version of [compute_ecc] function uses the following default values for its arguments:

/// * input_mask: noArray()

#[inline]

pub fn compute_ecc_def(template_image: &impl ToInputArray, input_image: &impl ToInputArray) -> Result<f64> {

input_array_arg!(template_image);

input_array_arg!(input_image);

return_send!(via ocvrs_return);

unsafe { sys::cv_computeECC_const__InputArrayR_const__InputArrayR(template_image.as_raw__InputArray(), input_image.as_raw__InputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Computes the Enhanced Correlation Coefficient value between two images [EP08](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_EP08) .

///

/// ## Parameters

/// * templateImage: single-channel template image; CV_8U or CV_32F array.

/// * inputImage: single-channel input image to be warped to provide an image similar to

/// templateImage, same type as templateImage.

/// * inputMask: An optional mask to indicate valid values of inputImage.

/// ## See also

/// findTransformECC

///

/// ## C++ default parameters

/// * input_mask: noArray()

#[inline]

pub fn compute_ecc(template_image: &impl ToInputArray, input_image: &impl ToInputArray, input_mask: &impl ToInputArray) -> Result<f64> {

input_array_arg!(template_image);

input_array_arg!(input_image);

input_array_arg!(input_mask);

return_send!(via ocvrs_return);

unsafe { sys::cv_computeECC_const__InputArrayR_const__InputArrayR_const__InputArrayR(template_image.as_raw__InputArray(), input_image.as_raw__InputArray(), input_mask.as_raw__InputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Creates KNN Background Subtractor

///

/// ## Parameters

/// * history: Length of the history.

/// * dist2Threshold: Threshold on the squared distance between the pixel and the sample to decide

/// whether a pixel is close to that sample. This parameter does not affect the background update.

/// * detectShadows: If true, the algorithm will detect shadows and mark them. It decreases the

/// speed a bit, so if you do not need this feature, set the parameter to false.

///

/// ## Note

/// This alternative version of [create_background_subtractor_knn] function uses the following default values for its arguments:

/// * history: 500

/// * dist2_threshold: 400.0

/// * detect_shadows: true

#[inline]

pub fn create_background_subtractor_knn_def() -> Result<core::Ptr<crate::video::BackgroundSubtractorKNN>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_createBackgroundSubtractorKNN(ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::video::BackgroundSubtractorKNN>::opencv_from_extern(ret) };

Ok(ret)

}

/// Creates KNN Background Subtractor

///

/// ## Parameters

/// * history: Length of the history.

/// * dist2Threshold: Threshold on the squared distance between the pixel and the sample to decide

/// whether a pixel is close to that sample. This parameter does not affect the background update.

/// * detectShadows: If true, the algorithm will detect shadows and mark them. It decreases the

/// speed a bit, so if you do not need this feature, set the parameter to false.

///

/// ## C++ default parameters

/// * history: 500

/// * dist2_threshold: 400.0

/// * detect_shadows: true

#[inline]

pub fn create_background_subtractor_knn(history: i32, dist2_threshold: f64, detect_shadows: bool) -> Result<core::Ptr<crate::video::BackgroundSubtractorKNN>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_createBackgroundSubtractorKNN_int_double_bool(history, dist2_threshold, detect_shadows, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::video::BackgroundSubtractorKNN>::opencv_from_extern(ret) };

Ok(ret)

}

/// Creates MOG2 Background Subtractor

///

/// ## Parameters

/// * history: Length of the history.

/// * varThreshold: Threshold on the squared Mahalanobis distance between the pixel and the model

/// to decide whether a pixel is well described by the background model. This parameter does not

/// affect the background update.

/// * detectShadows: If true, the algorithm will detect shadows and mark them. It decreases the

/// speed a bit, so if you do not need this feature, set the parameter to false.

///

/// ## Note

/// This alternative version of [create_background_subtractor_mog2] function uses the following default values for its arguments:

/// * history: 500

/// * var_threshold: 16

/// * detect_shadows: true

#[inline]

pub fn create_background_subtractor_mog2_def() -> Result<core::Ptr<crate::video::BackgroundSubtractorMOG2>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_createBackgroundSubtractorMOG2(ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::video::BackgroundSubtractorMOG2>::opencv_from_extern(ret) };

Ok(ret)

}

/// Creates MOG2 Background Subtractor

///

/// ## Parameters

/// * history: Length of the history.

/// * varThreshold: Threshold on the squared Mahalanobis distance between the pixel and the model

/// to decide whether a pixel is well described by the background model. This parameter does not

/// affect the background update.

/// * detectShadows: If true, the algorithm will detect shadows and mark them. It decreases the

/// speed a bit, so if you do not need this feature, set the parameter to false.

///

/// ## C++ default parameters

/// * history: 500

/// * var_threshold: 16

/// * detect_shadows: true

#[inline]

pub fn create_background_subtractor_mog2(history: i32, var_threshold: f64, detect_shadows: bool) -> Result<core::Ptr<crate::video::BackgroundSubtractorMOG2>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_createBackgroundSubtractorMOG2_int_double_bool(history, var_threshold, detect_shadows, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<crate::video::BackgroundSubtractorMOG2>::opencv_from_extern(ret) };

Ok(ret)

}

/// Computes an optimal affine transformation between two 2D point sets.

///

/// ## Parameters

/// * src: First input 2D point set stored in std::vector or Mat, or an image stored in Mat.

/// * dst: Second input 2D point set of the same size and the same type as A, or another image.

/// * fullAffine: If true, the function finds an optimal affine transformation with no additional

/// restrictions (6 degrees of freedom). Otherwise, the class of transformations to choose from is

/// limited to combinations of translation, rotation, and uniform scaling (4 degrees of freedom).

///

/// The function finds an optimal affine transform *[A|b]* (a 2 x 3 floating-point matrix) that

/// approximates best the affine transformation between:

///

/// * Two point sets

/// * Two raster images. In this case, the function first finds some features in the src image and

/// finds the corresponding features in dst image. After that, the problem is reduced to the first

/// case.

/// In case of point sets, the problem is formulated as follows: you need to find a 2x2 matrix *A* and

/// 2x1 vector *b* so that:

///

///

/// where src[i] and dst[i] are the i-th points in src and dst, respectively

///  can be either arbitrary (when fullAffine=true ) or have a form of

///

/// when fullAffine=false.

///

///

/// **Deprecated**: Use cv::estimateAffine2D, cv::estimateAffinePartial2D instead. If you are using this function

/// with images, extract points using cv::calcOpticalFlowPyrLK and then use the estimation functions.

/// ## See also

/// estimateAffine2D, estimateAffinePartial2D, getAffineTransform, getPerspectiveTransform, findHomography

#[deprecated = "Use cv::estimateAffine2D, cv::estimateAffinePartial2D instead. If you are using this function"]

#[inline]

pub fn estimate_rigid_transform(src: &impl ToInputArray, dst: &impl ToInputArray, full_affine: bool) -> Result<core::Mat> {

input_array_arg!(src);

input_array_arg!(dst);

return_send!(via ocvrs_return);

unsafe { sys::cv_estimateRigidTransform_const__InputArrayR_const__InputArrayR_bool(src.as_raw__InputArray(), dst.as_raw__InputArray(), full_affine, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Mat::opencv_from_extern(ret) };

Ok(ret)

}

/// @overload

///

/// ## Note

/// This alternative version of [find_transform_ecc_1] function uses the following default values for its arguments:

/// * motion_type: MOTION_AFFINE

/// * criteria: TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,50,0.001)

/// * input_mask: noArray()

#[inline]

pub fn find_transform_ecc_1_def(template_image: &impl ToInputArray, input_image: &impl ToInputArray, warp_matrix: &mut impl ToInputOutputArray) -> Result<f64> {

input_array_arg!(template_image);

input_array_arg!(input_image);

input_output_array_arg!(warp_matrix);

return_send!(via ocvrs_return);

unsafe { sys::cv_findTransformECC_const__InputArrayR_const__InputArrayR_const__InputOutputArrayR(template_image.as_raw__InputArray(), input_image.as_raw__InputArray(), warp_matrix.as_raw__InputOutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Finds the geometric transform (warp) between two images in terms of the ECC criterion [EP08](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_EP08) .

///

/// ## Parameters

/// * templateImage: single-channel template image; CV_8U or CV_32F array.

/// * inputImage: single-channel input image which should be warped with the final warpMatrix in

/// order to provide an image similar to templateImage, same type as templateImage.

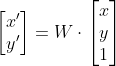

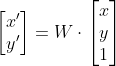

/// * warpMatrix: floating-point  or  mapping matrix (warp).

/// * motionType: parameter, specifying the type of motion:

/// * **MOTION_TRANSLATION** sets a translational motion model; warpMatrix is  with

/// the first  part being the unity matrix and the rest two parameters being

/// estimated.

/// * **MOTION_EUCLIDEAN** sets a Euclidean (rigid) transformation as motion model; three

/// parameters are estimated; warpMatrix is .

/// * **MOTION_AFFINE** sets an affine motion model (DEFAULT); six parameters are estimated;

/// warpMatrix is .

/// * **MOTION_HOMOGRAPHY** sets a homography as a motion model; eight parameters are

/// estimated;\`warpMatrix\` is .

/// * criteria: parameter, specifying the termination criteria of the ECC algorithm;

/// criteria.epsilon defines the threshold of the increment in the correlation coefficient between two

/// iterations (a negative criteria.epsilon makes criteria.maxcount the only termination criterion).

/// Default values are shown in the declaration above.

/// * inputMask: An optional mask to indicate valid values of inputImage.

/// * gaussFiltSize: An optional value indicating size of gaussian blur filter; (DEFAULT: 5)

///

/// The function estimates the optimum transformation (warpMatrix) with respect to ECC criterion

/// ([EP08](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_EP08)), that is

///

///

///

/// where

///

///

///

/// (the equation holds with homogeneous coordinates for homography). It returns the final enhanced

/// correlation coefficient, that is the correlation coefficient between the template image and the

/// final warped input image. When a  matrix is given with motionType =0, 1 or 2, the third

/// row is ignored.

///

/// Unlike findHomography and estimateRigidTransform, the function findTransformECC implements an

/// area-based alignment that builds on intensity similarities. In essence, the function updates the

/// initial transformation that roughly aligns the images. If this information is missing, the identity

/// warp (unity matrix) is used as an initialization. Note that if images undergo strong

/// displacements/rotations, an initial transformation that roughly aligns the images is necessary

/// (e.g., a simple euclidean/similarity transform that allows for the images showing the same image

/// content approximately). Use inverse warping in the second image to take an image close to the first

/// one, i.e. use the flag WARP_INVERSE_MAP with warpAffine or warpPerspective. See also the OpenCV

/// sample image_alignment.cpp that demonstrates the use of the function. Note that the function throws

/// an exception if algorithm does not converges.

/// ## See also

/// computeECC, estimateAffine2D, estimateAffinePartial2D, findHomography

///

/// ## Overloaded parameters

///

/// ## C++ default parameters

/// * motion_type: MOTION_AFFINE

/// * criteria: TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,50,0.001)

/// * input_mask: noArray()

#[inline]

pub fn find_transform_ecc_1(template_image: &impl ToInputArray, input_image: &impl ToInputArray, warp_matrix: &mut impl ToInputOutputArray, motion_type: i32, criteria: core::TermCriteria, input_mask: &impl ToInputArray) -> Result<f64> {

input_array_arg!(template_image);

input_array_arg!(input_image);

input_output_array_arg!(warp_matrix);

input_array_arg!(input_mask);

return_send!(via ocvrs_return);

unsafe { sys::cv_findTransformECC_const__InputArrayR_const__InputArrayR_const__InputOutputArrayR_int_TermCriteria_const__InputArrayR(template_image.as_raw__InputArray(), input_image.as_raw__InputArray(), warp_matrix.as_raw__InputOutputArray(), motion_type, &criteria, input_mask.as_raw__InputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Finds the geometric transform (warp) between two images in terms of the ECC criterion [EP08](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_EP08) .

///

/// ## Parameters

/// * templateImage: single-channel template image; CV_8U or CV_32F array.

/// * inputImage: single-channel input image which should be warped with the final warpMatrix in

/// order to provide an image similar to templateImage, same type as templateImage.

/// * warpMatrix: floating-point  or  mapping matrix (warp).

/// * motionType: parameter, specifying the type of motion:

/// * **MOTION_TRANSLATION** sets a translational motion model; warpMatrix is  with

/// the first  part being the unity matrix and the rest two parameters being

/// estimated.

/// * **MOTION_EUCLIDEAN** sets a Euclidean (rigid) transformation as motion model; three

/// parameters are estimated; warpMatrix is .

/// * **MOTION_AFFINE** sets an affine motion model (DEFAULT); six parameters are estimated;

/// warpMatrix is .

/// * **MOTION_HOMOGRAPHY** sets a homography as a motion model; eight parameters are

/// estimated;\`warpMatrix\` is .

/// * criteria: parameter, specifying the termination criteria of the ECC algorithm;

/// criteria.epsilon defines the threshold of the increment in the correlation coefficient between two

/// iterations (a negative criteria.epsilon makes criteria.maxcount the only termination criterion).

/// Default values are shown in the declaration above.

/// * inputMask: An optional mask to indicate valid values of inputImage.

/// * gaussFiltSize: An optional value indicating size of gaussian blur filter; (DEFAULT: 5)

///

/// The function estimates the optimum transformation (warpMatrix) with respect to ECC criterion

/// ([EP08](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_EP08)), that is

///

///

///

/// where

///

///

///

/// (the equation holds with homogeneous coordinates for homography). It returns the final enhanced

/// correlation coefficient, that is the correlation coefficient between the template image and the

/// final warped input image. When a  matrix is given with motionType =0, 1 or 2, the third

/// row is ignored.

///

/// Unlike findHomography and estimateRigidTransform, the function findTransformECC implements an

/// area-based alignment that builds on intensity similarities. In essence, the function updates the

/// initial transformation that roughly aligns the images. If this information is missing, the identity

/// warp (unity matrix) is used as an initialization. Note that if images undergo strong

/// displacements/rotations, an initial transformation that roughly aligns the images is necessary

/// (e.g., a simple euclidean/similarity transform that allows for the images showing the same image

/// content approximately). Use inverse warping in the second image to take an image close to the first

/// one, i.e. use the flag WARP_INVERSE_MAP with warpAffine or warpPerspective. See also the OpenCV

/// sample image_alignment.cpp that demonstrates the use of the function. Note that the function throws

/// an exception if algorithm does not converges.

/// ## See also

/// computeECC, estimateAffine2D, estimateAffinePartial2D, findHomography

#[inline]

pub fn find_transform_ecc(template_image: &impl ToInputArray, input_image: &impl ToInputArray, warp_matrix: &mut impl ToInputOutputArray, motion_type: i32, criteria: core::TermCriteria, input_mask: &impl ToInputArray, gauss_filt_size: i32) -> Result<f64> {

input_array_arg!(template_image);

input_array_arg!(input_image);

input_output_array_arg!(warp_matrix);

input_array_arg!(input_mask);

return_send!(via ocvrs_return);

unsafe { sys::cv_findTransformECC_const__InputArrayR_const__InputArrayR_const__InputOutputArrayR_int_TermCriteria_const__InputArrayR_int(template_image.as_raw__InputArray(), input_image.as_raw__InputArray(), warp_matrix.as_raw__InputOutputArray(), motion_type, &criteria, input_mask.as_raw__InputArray(), gauss_filt_size, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Finds an object on a back projection image.

///

/// ## Parameters

/// * probImage: Back projection of the object histogram. See calcBackProject for details.

/// * window: Initial search window.

/// * criteria: Stop criteria for the iterative search algorithm.

/// returns

/// : Number of iterations CAMSHIFT took to converge.

/// The function implements the iterative object search algorithm. It takes the input back projection of

/// an object and the initial position. The mass center in window of the back projection image is

/// computed and the search window center shifts to the mass center. The procedure is repeated until the

/// specified number of iterations criteria.maxCount is done or until the window center shifts by less

/// than criteria.epsilon. The algorithm is used inside CamShift and, unlike CamShift , the search

/// window size or orientation do not change during the search. You can simply pass the output of

/// calcBackProject to this function. But better results can be obtained if you pre-filter the back

/// projection and remove the noise. For example, you can do this by retrieving connected components

/// with findContours , throwing away contours with small area ( contourArea ), and rendering the

/// remaining contours with drawContours.

#[inline]

pub fn mean_shift(prob_image: &impl ToInputArray, window: &mut core::Rect, criteria: core::TermCriteria) -> Result<i32> {

input_array_arg!(prob_image);

return_send!(via ocvrs_return);

unsafe { sys::cv_meanShift_const__InputArrayR_RectR_TermCriteria(prob_image.as_raw__InputArray(), window, &criteria, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Read a .flo file

///

/// ## Parameters

/// * path: Path to the file to be loaded

///

/// The function readOpticalFlow loads a flow field from a file and returns it as a single matrix.

/// Resulting Mat has a type CV_32FC2 - floating-point, 2-channel. First channel corresponds to the

/// flow in the horizontal direction (u), second - vertical (v).

#[inline]

pub fn read_optical_flow(path: &str) -> Result<core::Mat> {

extern_container_arg!(path);

return_send!(via ocvrs_return);

unsafe { sys::cv_readOpticalFlow_const_StringR(path.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Mat::opencv_from_extern(ret) };

Ok(ret)

}

/// Write a .flo to disk

///

/// ## Parameters

/// * path: Path to the file to be written

/// * flow: Flow field to be stored

///

/// The function stores a flow field in a file, returns true on success, false otherwise.

/// The flow field must be a 2-channel, floating-point matrix (CV_32FC2). First channel corresponds

/// to the flow in the horizontal direction (u), second - vertical (v).

#[inline]

pub fn write_optical_flow(path: &str, flow: &impl ToInputArray) -> Result<bool> {

extern_container_arg!(path);

input_array_arg!(flow);

return_send!(via ocvrs_return);

unsafe { sys::cv_writeOpticalFlow_const_StringR_const__InputArrayR(path.opencv_as_extern(), flow.as_raw__InputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Base class for background/foreground segmentation. :

///

/// The class is only used to define the common interface for the whole family of background/foreground

/// segmentation algorithms.

pub struct BackgroundSubtractor {

ptr: *mut c_void,

}

opencv_type_boxed! { BackgroundSubtractor }

impl Drop for BackgroundSubtractor {

#[inline]

fn drop(&mut self) {

unsafe { sys::cv_BackgroundSubtractor_delete(self.as_raw_mut_BackgroundSubtractor()) };

}

}

unsafe impl Send for BackgroundSubtractor {}

/// Constant methods for [crate::video::BackgroundSubtractor]

pub trait BackgroundSubtractorTraitConst: core::AlgorithmTraitConst {

fn as_raw_BackgroundSubtractor(&self) -> *const c_void;

/// Computes a background image.

///

/// ## Parameters

/// * backgroundImage: The output background image.

///

///

/// Note: Sometimes the background image can be very blurry, as it contain the average background

/// statistics.

#[inline]

fn get_background_image(&self, background_image: &mut impl ToOutputArray) -> Result<()> {

output_array_arg!(background_image);

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractor_getBackgroundImage_const_const__OutputArrayR(self.as_raw_BackgroundSubtractor(), background_image.as_raw__OutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Mutable methods for [crate::video::BackgroundSubtractor]

pub trait BackgroundSubtractorTrait: core::AlgorithmTrait + crate::video::BackgroundSubtractorTraitConst {

fn as_raw_mut_BackgroundSubtractor(&mut self) -> *mut c_void;

/// Computes a foreground mask.

///

/// ## Parameters

/// * image: Next video frame.

/// * fgmask: The output foreground mask as an 8-bit binary image.

/// * learningRate: The value between 0 and 1 that indicates how fast the background model is

/// learnt. Negative parameter value makes the algorithm to use some automatically chosen learning

/// rate. 0 means that the background model is not updated at all, 1 means that the background model

/// is completely reinitialized from the last frame.

///

/// ## C++ default parameters

/// * learning_rate: -1

#[inline]

fn apply(&mut self, image: &impl ToInputArray, fgmask: &mut impl ToOutputArray, learning_rate: f64) -> Result<()> {

input_array_arg!(image);

output_array_arg!(fgmask);

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractor_apply_const__InputArrayR_const__OutputArrayR_double(self.as_raw_mut_BackgroundSubtractor(), image.as_raw__InputArray(), fgmask.as_raw__OutputArray(), learning_rate, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Computes a foreground mask.

///

/// ## Parameters

/// * image: Next video frame.

/// * fgmask: The output foreground mask as an 8-bit binary image.

/// * learningRate: The value between 0 and 1 that indicates how fast the background model is

/// learnt. Negative parameter value makes the algorithm to use some automatically chosen learning

/// rate. 0 means that the background model is not updated at all, 1 means that the background model

/// is completely reinitialized from the last frame.

///

/// ## Note

/// This alternative version of [BackgroundSubtractorTrait::apply] function uses the following default values for its arguments:

/// * learning_rate: -1

#[inline]

fn apply_def(&mut self, image: &impl ToInputArray, fgmask: &mut impl ToOutputArray) -> Result<()> {

input_array_arg!(image);

output_array_arg!(fgmask);

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractor_apply_const__InputArrayR_const__OutputArrayR(self.as_raw_mut_BackgroundSubtractor(), image.as_raw__InputArray(), fgmask.as_raw__OutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

impl std::fmt::Debug for BackgroundSubtractor {

#[inline]

fn fmt(&self, f: &mut std::fmt::Formatter) -> std::fmt::Result {

f.debug_struct("BackgroundSubtractor")

.finish()

}

}

boxed_cast_base! { BackgroundSubtractor, core::Algorithm, cv_BackgroundSubtractor_to_Algorithm }

boxed_cast_descendant! { BackgroundSubtractor, crate::video::BackgroundSubtractorKNN, cv_BackgroundSubtractor_to_BackgroundSubtractorKNN }

boxed_cast_descendant! { BackgroundSubtractor, crate::video::BackgroundSubtractorMOG2, cv_BackgroundSubtractor_to_BackgroundSubtractorMOG2 }

impl core::AlgorithmTraitConst for BackgroundSubtractor {

#[inline] fn as_raw_Algorithm(&self) -> *const c_void { self.as_raw() }

}

impl core::AlgorithmTrait for BackgroundSubtractor {

#[inline] fn as_raw_mut_Algorithm(&mut self) -> *mut c_void { self.as_raw_mut() }

}

boxed_ref! { BackgroundSubtractor, core::AlgorithmTraitConst, as_raw_Algorithm, core::AlgorithmTrait, as_raw_mut_Algorithm }

impl crate::video::BackgroundSubtractorTraitConst for BackgroundSubtractor {

#[inline] fn as_raw_BackgroundSubtractor(&self) -> *const c_void { self.as_raw() }

}

impl crate::video::BackgroundSubtractorTrait for BackgroundSubtractor {

#[inline] fn as_raw_mut_BackgroundSubtractor(&mut self) -> *mut c_void { self.as_raw_mut() }

}

boxed_ref! { BackgroundSubtractor, crate::video::BackgroundSubtractorTraitConst, as_raw_BackgroundSubtractor, crate::video::BackgroundSubtractorTrait, as_raw_mut_BackgroundSubtractor }

/// K-nearest neighbours - based Background/Foreground Segmentation Algorithm.

///

/// The class implements the K-nearest neighbours background subtraction described in [Zivkovic2006](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Zivkovic2006) .

/// Very efficient if number of foreground pixels is low.

pub struct BackgroundSubtractorKNN {

ptr: *mut c_void,

}

opencv_type_boxed! { BackgroundSubtractorKNN }

impl Drop for BackgroundSubtractorKNN {

#[inline]

fn drop(&mut self) {

unsafe { sys::cv_BackgroundSubtractorKNN_delete(self.as_raw_mut_BackgroundSubtractorKNN()) };

}

}

unsafe impl Send for BackgroundSubtractorKNN {}

/// Constant methods for [crate::video::BackgroundSubtractorKNN]

pub trait BackgroundSubtractorKNNTraitConst: crate::video::BackgroundSubtractorTraitConst {

fn as_raw_BackgroundSubtractorKNN(&self) -> *const c_void;

/// Returns the number of last frames that affect the background model

#[inline]

fn get_history(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_getHistory_const(self.as_raw_BackgroundSubtractorKNN(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the number of data samples in the background model

#[inline]

fn get_n_samples(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_getNSamples_const(self.as_raw_BackgroundSubtractorKNN(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the threshold on the squared distance between the pixel and the sample

///

/// The threshold on the squared distance between the pixel and the sample to decide whether a pixel is

/// close to a data sample.

#[inline]

fn get_dist2_threshold(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_getDist2Threshold_const(self.as_raw_BackgroundSubtractorKNN(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the number of neighbours, the k in the kNN.

///

/// K is the number of samples that need to be within dist2Threshold in order to decide that that

/// pixel is matching the kNN background model.

#[inline]

fn getk_nn_samples(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_getkNNSamples_const(self.as_raw_BackgroundSubtractorKNN(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the shadow detection flag

///

/// If true, the algorithm detects shadows and marks them. See createBackgroundSubtractorKNN for

/// details.

#[inline]

fn get_detect_shadows(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_getDetectShadows_const(self.as_raw_BackgroundSubtractorKNN(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the shadow value

///

/// Shadow value is the value used to mark shadows in the foreground mask. Default value is 127. Value 0

/// in the mask always means background, 255 means foreground.

#[inline]

fn get_shadow_value(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_getShadowValue_const(self.as_raw_BackgroundSubtractorKNN(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the shadow threshold

///

/// A shadow is detected if pixel is a darker version of the background. The shadow threshold (Tau in

/// the paper) is a threshold defining how much darker the shadow can be. Tau= 0.5 means that if a pixel

/// is more than twice darker then it is not shadow. See Prati, Mikic, Trivedi and Cucchiara,

/// *Detecting Moving Shadows...*, IEEE PAMI,2003.

#[inline]

fn get_shadow_threshold(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_getShadowThreshold_const(self.as_raw_BackgroundSubtractorKNN(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Mutable methods for [crate::video::BackgroundSubtractorKNN]

pub trait BackgroundSubtractorKNNTrait: crate::video::BackgroundSubtractorKNNTraitConst + crate::video::BackgroundSubtractorTrait {

fn as_raw_mut_BackgroundSubtractorKNN(&mut self) -> *mut c_void;

/// Sets the number of last frames that affect the background model

#[inline]

fn set_history(&mut self, history: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_setHistory_int(self.as_raw_mut_BackgroundSubtractorKNN(), history, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the number of data samples in the background model.

///

/// The model needs to be reinitalized to reserve memory.

#[inline]

fn set_n_samples(&mut self, _n_n: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_setNSamples_int(self.as_raw_mut_BackgroundSubtractorKNN(), _n_n, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the threshold on the squared distance

#[inline]

fn set_dist2_threshold(&mut self, _dist2_threshold: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_setDist2Threshold_double(self.as_raw_mut_BackgroundSubtractorKNN(), _dist2_threshold, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the k in the kNN. How many nearest neighbours need to match.

#[inline]

fn setk_nn_samples(&mut self, _nk_nn: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_setkNNSamples_int(self.as_raw_mut_BackgroundSubtractorKNN(), _nk_nn, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Enables or disables shadow detection

#[inline]

fn set_detect_shadows(&mut self, detect_shadows: bool) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_setDetectShadows_bool(self.as_raw_mut_BackgroundSubtractorKNN(), detect_shadows, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the shadow value

#[inline]

fn set_shadow_value(&mut self, value: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_setShadowValue_int(self.as_raw_mut_BackgroundSubtractorKNN(), value, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the shadow threshold

#[inline]

fn set_shadow_threshold(&mut self, threshold: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorKNN_setShadowThreshold_double(self.as_raw_mut_BackgroundSubtractorKNN(), threshold, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

impl std::fmt::Debug for BackgroundSubtractorKNN {

#[inline]

fn fmt(&self, f: &mut std::fmt::Formatter) -> std::fmt::Result {

f.debug_struct("BackgroundSubtractorKNN")

.finish()

}

}

boxed_cast_base! { BackgroundSubtractorKNN, core::Algorithm, cv_BackgroundSubtractorKNN_to_Algorithm }

boxed_cast_base! { BackgroundSubtractorKNN, crate::video::BackgroundSubtractor, cv_BackgroundSubtractorKNN_to_BackgroundSubtractor }

impl core::AlgorithmTraitConst for BackgroundSubtractorKNN {

#[inline] fn as_raw_Algorithm(&self) -> *const c_void { self.as_raw() }

}

impl core::AlgorithmTrait for BackgroundSubtractorKNN {

#[inline] fn as_raw_mut_Algorithm(&mut self) -> *mut c_void { self.as_raw_mut() }

}

boxed_ref! { BackgroundSubtractorKNN, core::AlgorithmTraitConst, as_raw_Algorithm, core::AlgorithmTrait, as_raw_mut_Algorithm }

impl crate::video::BackgroundSubtractorTraitConst for BackgroundSubtractorKNN {

#[inline] fn as_raw_BackgroundSubtractor(&self) -> *const c_void { self.as_raw() }

}

impl crate::video::BackgroundSubtractorTrait for BackgroundSubtractorKNN {

#[inline] fn as_raw_mut_BackgroundSubtractor(&mut self) -> *mut c_void { self.as_raw_mut() }

}

boxed_ref! { BackgroundSubtractorKNN, crate::video::BackgroundSubtractorTraitConst, as_raw_BackgroundSubtractor, crate::video::BackgroundSubtractorTrait, as_raw_mut_BackgroundSubtractor }

impl crate::video::BackgroundSubtractorKNNTraitConst for BackgroundSubtractorKNN {

#[inline] fn as_raw_BackgroundSubtractorKNN(&self) -> *const c_void { self.as_raw() }

}

impl crate::video::BackgroundSubtractorKNNTrait for BackgroundSubtractorKNN {

#[inline] fn as_raw_mut_BackgroundSubtractorKNN(&mut self) -> *mut c_void { self.as_raw_mut() }

}

boxed_ref! { BackgroundSubtractorKNN, crate::video::BackgroundSubtractorKNNTraitConst, as_raw_BackgroundSubtractorKNN, crate::video::BackgroundSubtractorKNNTrait, as_raw_mut_BackgroundSubtractorKNN }

/// Gaussian Mixture-based Background/Foreground Segmentation Algorithm.

///

/// The class implements the Gaussian mixture model background subtraction described in [Zivkovic2004](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Zivkovic2004)

/// and [Zivkovic2006](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Zivkovic2006) .

pub struct BackgroundSubtractorMOG2 {

ptr: *mut c_void,

}

opencv_type_boxed! { BackgroundSubtractorMOG2 }

impl Drop for BackgroundSubtractorMOG2 {

#[inline]

fn drop(&mut self) {

unsafe { sys::cv_BackgroundSubtractorMOG2_delete(self.as_raw_mut_BackgroundSubtractorMOG2()) };

}

}

unsafe impl Send for BackgroundSubtractorMOG2 {}

/// Constant methods for [crate::video::BackgroundSubtractorMOG2]

pub trait BackgroundSubtractorMOG2TraitConst: crate::video::BackgroundSubtractorTraitConst {

fn as_raw_BackgroundSubtractorMOG2(&self) -> *const c_void;

/// Returns the number of last frames that affect the background model

#[inline]

fn get_history(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getHistory_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the number of gaussian components in the background model

#[inline]

fn get_n_mixtures(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getNMixtures_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the "background ratio" parameter of the algorithm

///

/// If a foreground pixel keeps semi-constant value for about backgroundRatio\*history frames, it's

/// considered background and added to the model as a center of a new component. It corresponds to TB

/// parameter in the paper.

#[inline]

fn get_background_ratio(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getBackgroundRatio_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the variance threshold for the pixel-model match

///

/// The main threshold on the squared Mahalanobis distance to decide if the sample is well described by

/// the background model or not. Related to Cthr from the paper.

#[inline]

fn get_var_threshold(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getVarThreshold_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the variance threshold for the pixel-model match used for new mixture component generation

///

/// Threshold for the squared Mahalanobis distance that helps decide when a sample is close to the

/// existing components (corresponds to Tg in the paper). If a pixel is not close to any component, it

/// is considered foreground or added as a new component. 3 sigma =\> Tg=3\*3=9 is default. A smaller Tg

/// value generates more components. A higher Tg value may result in a small number of components but

/// they can grow too large.

#[inline]

fn get_var_threshold_gen(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getVarThresholdGen_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the initial variance of each gaussian component

#[inline]

fn get_var_init(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getVarInit_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_var_min(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getVarMin_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_var_max(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getVarMax_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the complexity reduction threshold

///

/// This parameter defines the number of samples needed to accept to prove the component exists. CT=0.05

/// is a default value for all the samples. By setting CT=0 you get an algorithm very similar to the

/// standard Stauffer&Grimson algorithm.

#[inline]

fn get_complexity_reduction_threshold(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getComplexityReductionThreshold_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the shadow detection flag

///

/// If true, the algorithm detects shadows and marks them. See createBackgroundSubtractorMOG2 for

/// details.

#[inline]

fn get_detect_shadows(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getDetectShadows_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the shadow value

///

/// Shadow value is the value used to mark shadows in the foreground mask. Default value is 127. Value 0

/// in the mask always means background, 255 means foreground.

#[inline]

fn get_shadow_value(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getShadowValue_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns the shadow threshold

///

/// A shadow is detected if pixel is a darker version of the background. The shadow threshold (Tau in

/// the paper) is a threshold defining how much darker the shadow can be. Tau= 0.5 means that if a pixel

/// is more than twice darker then it is not shadow. See Prati, Mikic, Trivedi and Cucchiara,

/// *Detecting Moving Shadows...*, IEEE PAMI,2003.

#[inline]

fn get_shadow_threshold(&self) -> Result<f64> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_getShadowThreshold_const(self.as_raw_BackgroundSubtractorMOG2(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Mutable methods for [crate::video::BackgroundSubtractorMOG2]

pub trait BackgroundSubtractorMOG2Trait: crate::video::BackgroundSubtractorMOG2TraitConst + crate::video::BackgroundSubtractorTrait {

fn as_raw_mut_BackgroundSubtractorMOG2(&mut self) -> *mut c_void;

/// Sets the number of last frames that affect the background model

#[inline]

fn set_history(&mut self, history: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setHistory_int(self.as_raw_mut_BackgroundSubtractorMOG2(), history, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the number of gaussian components in the background model.

///

/// The model needs to be reinitalized to reserve memory.

#[inline]

fn set_n_mixtures(&mut self, nmixtures: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setNMixtures_int(self.as_raw_mut_BackgroundSubtractorMOG2(), nmixtures, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the "background ratio" parameter of the algorithm

#[inline]

fn set_background_ratio(&mut self, ratio: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setBackgroundRatio_double(self.as_raw_mut_BackgroundSubtractorMOG2(), ratio, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the variance threshold for the pixel-model match

#[inline]

fn set_var_threshold(&mut self, var_threshold: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setVarThreshold_double(self.as_raw_mut_BackgroundSubtractorMOG2(), var_threshold, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the variance threshold for the pixel-model match used for new mixture component generation

#[inline]

fn set_var_threshold_gen(&mut self, var_threshold_gen: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setVarThresholdGen_double(self.as_raw_mut_BackgroundSubtractorMOG2(), var_threshold_gen, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the initial variance of each gaussian component

#[inline]

fn set_var_init(&mut self, var_init: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setVarInit_double(self.as_raw_mut_BackgroundSubtractorMOG2(), var_init, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_var_min(&mut self, var_min: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setVarMin_double(self.as_raw_mut_BackgroundSubtractorMOG2(), var_min, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_var_max(&mut self, var_max: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setVarMax_double(self.as_raw_mut_BackgroundSubtractorMOG2(), var_max, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the complexity reduction threshold

#[inline]

fn set_complexity_reduction_threshold(&mut self, ct: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setComplexityReductionThreshold_double(self.as_raw_mut_BackgroundSubtractorMOG2(), ct, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Enables or disables shadow detection

#[inline]

fn set_detect_shadows(&mut self, detect_shadows: bool) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setDetectShadows_bool(self.as_raw_mut_BackgroundSubtractorMOG2(), detect_shadows, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the shadow value

#[inline]

fn set_shadow_value(&mut self, value: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setShadowValue_int(self.as_raw_mut_BackgroundSubtractorMOG2(), value, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Sets the shadow threshold

#[inline]

fn set_shadow_threshold(&mut self, threshold: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_setShadowThreshold_double(self.as_raw_mut_BackgroundSubtractorMOG2(), threshold, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Computes a foreground mask.

///

/// ## Parameters

/// * image: Next video frame. Floating point frame will be used without scaling and should be in range .

/// * fgmask: The output foreground mask as an 8-bit binary image.

/// * learningRate: The value between 0 and 1 that indicates how fast the background model is

/// learnt. Negative parameter value makes the algorithm to use some automatically chosen learning

/// rate. 0 means that the background model is not updated at all, 1 means that the background model

/// is completely reinitialized from the last frame.

///

/// ## C++ default parameters

/// * learning_rate: -1

#[inline]

fn apply(&mut self, image: &impl ToInputArray, fgmask: &mut impl ToOutputArray, learning_rate: f64) -> Result<()> {

input_array_arg!(image);

output_array_arg!(fgmask);

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_apply_const__InputArrayR_const__OutputArrayR_double(self.as_raw_mut_BackgroundSubtractorMOG2(), image.as_raw__InputArray(), fgmask.as_raw__OutputArray(), learning_rate, ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Computes a foreground mask.

///

/// ## Parameters

/// * image: Next video frame. Floating point frame will be used without scaling and should be in range .

/// * fgmask: The output foreground mask as an 8-bit binary image.

/// * learningRate: The value between 0 and 1 that indicates how fast the background model is

/// learnt. Negative parameter value makes the algorithm to use some automatically chosen learning

/// rate. 0 means that the background model is not updated at all, 1 means that the background model

/// is completely reinitialized from the last frame.

///

/// ## Note

/// This alternative version of [BackgroundSubtractorMOG2Trait::apply] function uses the following default values for its arguments:

/// * learning_rate: -1

#[inline]

fn apply_def(&mut self, image: &impl ToInputArray, fgmask: &mut impl ToOutputArray) -> Result<()> {

input_array_arg!(image);

output_array_arg!(fgmask);

return_send!(via ocvrs_return);

unsafe { sys::cv_BackgroundSubtractorMOG2_apply_const__InputArrayR_const__OutputArrayR(self.as_raw_mut_BackgroundSubtractorMOG2(), image.as_raw__InputArray(), fgmask.as_raw__OutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

impl std::fmt::Debug for BackgroundSubtractorMOG2 {

#[inline]

fn fmt(&self, f: &mut std::fmt::Formatter) -> std::fmt::Result {

f.debug_struct("BackgroundSubtractorMOG2")

.finish()

}

}

boxed_cast_base! { BackgroundSubtractorMOG2, core::Algorithm, cv_BackgroundSubtractorMOG2_to_Algorithm }

boxed_cast_base! { BackgroundSubtractorMOG2, crate::video::BackgroundSubtractor, cv_BackgroundSubtractorMOG2_to_BackgroundSubtractor }

impl core::AlgorithmTraitConst for BackgroundSubtractorMOG2 {

#[inline] fn as_raw_Algorithm(&self) -> *const c_void { self.as_raw() }

}

impl core::AlgorithmTrait for BackgroundSubtractorMOG2 {

#[inline] fn as_raw_mut_Algorithm(&mut self) -> *mut c_void { self.as_raw_mut() }

}

boxed_ref! { BackgroundSubtractorMOG2, core::AlgorithmTraitConst, as_raw_Algorithm, core::AlgorithmTrait, as_raw_mut_Algorithm }

impl crate::video::BackgroundSubtractorTraitConst for BackgroundSubtractorMOG2 {

#[inline] fn as_raw_BackgroundSubtractor(&self) -> *const c_void { self.as_raw() }

}

impl crate::video::BackgroundSubtractorTrait for BackgroundSubtractorMOG2 {

#[inline] fn as_raw_mut_BackgroundSubtractor(&mut self) -> *mut c_void { self.as_raw_mut() }

}

boxed_ref! { BackgroundSubtractorMOG2, crate::video::BackgroundSubtractorTraitConst, as_raw_BackgroundSubtractor, crate::video::BackgroundSubtractorTrait, as_raw_mut_BackgroundSubtractor }

impl crate::video::BackgroundSubtractorMOG2TraitConst for BackgroundSubtractorMOG2 {

#[inline] fn as_raw_BackgroundSubtractorMOG2(&self) -> *const c_void { self.as_raw() }

}

impl crate::video::BackgroundSubtractorMOG2Trait for BackgroundSubtractorMOG2 {

#[inline] fn as_raw_mut_BackgroundSubtractorMOG2(&mut self) -> *mut c_void { self.as_raw_mut() }

}

boxed_ref! { BackgroundSubtractorMOG2, crate::video::BackgroundSubtractorMOG2TraitConst, as_raw_BackgroundSubtractorMOG2, crate::video::BackgroundSubtractorMOG2Trait, as_raw_mut_BackgroundSubtractorMOG2 }

/// DIS optical flow algorithm.

///

/// This class implements the Dense Inverse Search (DIS) optical flow algorithm. More

/// details about the algorithm can be found at [Kroeger2016](https://docs.opencv.org/4.11.0/d0/de3/citelist.html#CITEREF_Kroeger2016) . Includes three presets with preselected

/// parameters to provide reasonable trade-off between speed and quality. However, even the slowest preset is

/// still relatively fast, use DeepFlow if you need better quality and don't care about speed.

///

/// This implementation includes several additional features compared to the algorithm described in the paper,