#![allow(

unused_parens,

clippy::excessive_precision,

clippy::missing_safety_doc,

clippy::should_implement_trait,

clippy::too_many_arguments,

clippy::unused_unit,

clippy::let_unit_value,

clippy::derive_partial_eq_without_eq,

)]

//! # Object Detection

//! # Cascade Classifier for Object Detection

//!

//! The object detector described below has been initially proposed by Paul Viola [Viola01](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Viola01) and

//! improved by Rainer Lienhart [Lienhart02](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Lienhart02) .

//!

//! First, a classifier (namely a *cascade of boosted classifiers working with haar-like features*) is

//! trained with a few hundred sample views of a particular object (i.e., a face or a car), called

//! positive examples, that are scaled to the same size (say, 20x20), and negative examples - arbitrary

//! images of the same size.

//!

//! After a classifier is trained, it can be applied to a region of interest (of the same size as used

//! during the training) in an input image. The classifier outputs a "1" if the region is likely to show

//! the object (i.e., face/car), and "0" otherwise. To search for the object in the whole image one can

//! move the search window across the image and check every location using the classifier. The

//! classifier is designed so that it can be easily "resized" in order to be able to find the objects of

//! interest at different sizes, which is more efficient than resizing the image itself. So, to find an

//! object of an unknown size in the image the scan procedure should be done several times at different

//! scales.

//!

//! The word "cascade" in the classifier name means that the resultant classifier consists of several

//! simpler classifiers (*stages*) that are applied subsequently to a region of interest until at some

//! stage the candidate is rejected or all the stages are passed. The word "boosted" means that the

//! classifiers at every stage of the cascade are complex themselves and they are built out of basic

//! classifiers using one of four different boosting techniques (weighted voting). Currently Discrete

//! Adaboost, Real Adaboost, Gentle Adaboost and Logitboost are supported. The basic classifiers are

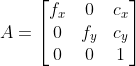

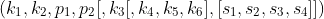

//! decision-tree classifiers with at least 2 leaves. Haar-like features are the input to the basic

//! classifiers, and are calculated as described below. The current algorithm uses the following

//! Haar-like features:

//!

//!

//!

//! The feature used in a particular classifier is specified by its shape (1a, 2b etc.), position within

//! the region of interest and the scale (this scale is not the same as the scale used at the detection

//! stage, though these two scales are multiplied). For example, in the case of the third line feature

//! (2c) the response is calculated as the difference between the sum of image pixels under the

//! rectangle covering the whole feature (including the two white stripes and the black stripe in the

//! middle) and the sum of the image pixels under the black stripe multiplied by 3 in order to

//! compensate for the differences in the size of areas. The sums of pixel values over a rectangular

//! regions are calculated rapidly using integral images (see below and the integral description).

//!

//! Check [tutorial_cascade_classifier] "the corresponding tutorial" for more details.

//!

//! The following reference is for the detection part only. There is a separate application called

//! opencv_traincascade that can train a cascade of boosted classifiers from a set of samples.

//!

//!

//! Note: In the new C++ interface it is also possible to use LBP (local binary pattern) features in

//! addition to Haar-like features. .. [Viola01] Paul Viola and Michael J. Jones. Rapid Object Detection

//! using a Boosted Cascade of Simple Features. IEEE CVPR, 2001. The paper is available online at

//! <https://github.com/SvHey/thesis/blob/master/Literature/ObjectDetection/violaJones_CVPR2001.pdf>

//!

//! # HOG (Histogram of Oriented Gradients) descriptor and object detector

//! # QRCode detection and encoding

//! # DNN-based face detection and recognition

//! Check [tutorial_dnn_face] "the corresponding tutorial" for more details.

//! # Common functions and classes

//! # ArUco markers and boards detection for robust camera pose estimation

//! ArUco Marker Detection

//! Square fiducial markers (also known as Augmented Reality Markers) are useful for easy,

//! fast and robust camera pose estimation.

//!

//! The main functionality of ArucoDetector class is detection of markers in an image. If the markers are grouped

//! as a board, then you can try to recover the missing markers with ArucoDetector::refineDetectedMarkers().

//! ArUco markers can also be used for advanced chessboard corner finding. To do this, group the markers in the

//! CharucoBoard and find the corners of the chessboard with the CharucoDetector::detectBoard().

//!

//! The implementation is based on the ArUco Library by R. Muñoz-Salinas and S. Garrido-Jurado [Aruco2014](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Aruco2014).

//!

//! Markers can also be detected based on the AprilTag 2 [wang2016iros](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_wang2016iros) fiducial detection method.

//! ## See also

//! [Aruco2014](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Aruco2014)

//! This code has been originally developed by Sergio Garrido-Jurado as a project

//! for Google Summer of Code 2015 (GSoC 15).

use crate::{mod_prelude::*, core, sys, types};

pub mod prelude {

pub use { super::SimilarRectsTraitConst, super::SimilarRectsTrait, super::BaseCascadeClassifier_MaskGeneratorConst, super::BaseCascadeClassifier_MaskGenerator, super::BaseCascadeClassifierConst, super::BaseCascadeClassifier, super::CascadeClassifierTraitConst, super::CascadeClassifierTrait, super::DetectionROITraitConst, super::DetectionROITrait, super::HOGDescriptorTraitConst, super::HOGDescriptorTrait, super::QRCodeEncoderConst, super::QRCodeEncoder, super::QRCodeDetectorTraitConst, super::QRCodeDetectorTrait, super::DetectionBasedTracker_ParametersTraitConst, super::DetectionBasedTracker_ParametersTrait, super::DetectionBasedTracker_IDetectorConst, super::DetectionBasedTracker_IDetector, super::DetectionBasedTracker_ExtObjectTraitConst, super::DetectionBasedTracker_ExtObjectTrait, super::DetectionBasedTrackerTraitConst, super::DetectionBasedTrackerTrait, super::FaceDetectorYNConst, super::FaceDetectorYN, super::FaceRecognizerSFConst, super::FaceRecognizerSF, super::DictionaryTraitConst, super::DictionaryTrait, super::BoardTraitConst, super::BoardTrait, super::GridBoardTraitConst, super::GridBoardTrait, super::CharucoBoardTraitConst, super::CharucoBoardTrait, super::DetectorParametersTraitConst, super::DetectorParametersTrait, super::ArucoDetectorTraitConst, super::ArucoDetectorTrait, super::CharucoParametersTraitConst, super::CharucoParametersTrait, super::CharucoDetectorTraitConst, super::CharucoDetectorTrait };

}

pub const CASCADE_DO_CANNY_PRUNING: i32 = 1;

pub const CASCADE_DO_ROUGH_SEARCH: i32 = 8;

pub const CASCADE_FIND_BIGGEST_OBJECT: i32 = 4;

pub const CASCADE_SCALE_IMAGE: i32 = 2;

/// Tag and corners detection based on the AprilTag 2 approach [wang2016iros](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_wang2016iros)

pub const CORNER_REFINE_APRILTAG: i32 = 3;

/// ArUco approach and refine the corners locations using the contour-points line fitting

pub const CORNER_REFINE_CONTOUR: i32 = 2;

/// Tag and corners detection based on the ArUco approach

pub const CORNER_REFINE_NONE: i32 = 0;

/// ArUco approach and refine the corners locations using corner subpixel accuracy

pub const CORNER_REFINE_SUBPIX: i32 = 1;

/// 4x4 bits, minimum hamming distance between any two codes = 3, 100 codes

pub const DICT_4X4_100: i32 = 1;

/// 4x4 bits, minimum hamming distance between any two codes = 2, 1000 codes

pub const DICT_4X4_1000: i32 = 3;

/// 4x4 bits, minimum hamming distance between any two codes = 3, 250 codes

pub const DICT_4X4_250: i32 = 2;

/// 4x4 bits, minimum hamming distance between any two codes = 4, 50 codes

pub const DICT_4X4_50: i32 = 0;

/// 5x5 bits, minimum hamming distance between any two codes = 7, 100 codes

pub const DICT_5X5_100: i32 = 5;

/// 5x5 bits, minimum hamming distance between any two codes = 5, 1000 codes

pub const DICT_5X5_1000: i32 = 7;

/// 5x5 bits, minimum hamming distance between any two codes = 6, 250 codes

pub const DICT_5X5_250: i32 = 6;

/// 5x5 bits, minimum hamming distance between any two codes = 8, 50 codes

pub const DICT_5X5_50: i32 = 4;

/// 6x6 bits, minimum hamming distance between any two codes = 12, 100 codes

pub const DICT_6X6_100: i32 = 9;

/// 6x6 bits, minimum hamming distance between any two codes = 9, 1000 codes

pub const DICT_6X6_1000: i32 = 11;

/// 6x6 bits, minimum hamming distance between any two codes = 11, 250 codes

pub const DICT_6X6_250: i32 = 10;

/// 6x6 bits, minimum hamming distance between any two codes = 13, 50 codes

pub const DICT_6X6_50: i32 = 8;

/// 7x7 bits, minimum hamming distance between any two codes = 18, 100 codes

pub const DICT_7X7_100: i32 = 13;

/// 7x7 bits, minimum hamming distance between any two codes = 14, 1000 codes

pub const DICT_7X7_1000: i32 = 15;

/// 7x7 bits, minimum hamming distance between any two codes = 17, 250 codes

pub const DICT_7X7_250: i32 = 14;

/// 7x7 bits, minimum hamming distance between any two codes = 19, 50 codes

pub const DICT_7X7_50: i32 = 12;

/// 4x4 bits, minimum hamming distance between any two codes = 5, 30 codes

pub const DICT_APRILTAG_16h5: i32 = 17;

/// 5x5 bits, minimum hamming distance between any two codes = 9, 35 codes

pub const DICT_APRILTAG_25h9: i32 = 18;

/// 6x6 bits, minimum hamming distance between any two codes = 10, 2320 codes

pub const DICT_APRILTAG_36h10: i32 = 19;

/// 6x6 bits, minimum hamming distance between any two codes = 11, 587 codes

pub const DICT_APRILTAG_36h11: i32 = 20;

/// 6x6 bits, minimum hamming distance between any two codes = 3, 1024 codes

pub const DICT_ARUCO_ORIGINAL: i32 = 16;

/// Default nlevels value.

pub const HOGDescriptor_DEFAULT_NLEVELS: i32 = 64;

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum CornerRefineMethod {

/// Tag and corners detection based on the ArUco approach

CORNER_REFINE_NONE = 0,

/// ArUco approach and refine the corners locations using corner subpixel accuracy

CORNER_REFINE_SUBPIX = 1,

/// ArUco approach and refine the corners locations using the contour-points line fitting

CORNER_REFINE_CONTOUR = 2,

/// Tag and corners detection based on the AprilTag 2 approach [wang2016iros](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_wang2016iros)

CORNER_REFINE_APRILTAG = 3,

}

opencv_type_enum! { crate::objdetect::CornerRefineMethod }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum DetectionBasedTracker_ObjectStatus {

DETECTED_NOT_SHOWN_YET = 0,

DETECTED = 1,

DETECTED_TEMPORARY_LOST = 2,

WRONG_OBJECT = 3,

}

opencv_type_enum! { crate::objdetect::DetectionBasedTracker_ObjectStatus }

/// Definition of distance used for calculating the distance between two face features

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum FaceRecognizerSF_DisType {

FR_COSINE = 0,

FR_NORM_L2 = 1,

}

opencv_type_enum! { crate::objdetect::FaceRecognizerSF_DisType }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum HOGDescriptor_DescriptorStorageFormat {

DESCR_FORMAT_COL_BY_COL = 0,

DESCR_FORMAT_ROW_BY_ROW = 1,

}

opencv_type_enum! { crate::objdetect::HOGDescriptor_DescriptorStorageFormat }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum HOGDescriptor_HistogramNormType {

/// Default histogramNormType

L2Hys = 0,

}

opencv_type_enum! { crate::objdetect::HOGDescriptor_HistogramNormType }

/// Predefined markers dictionaries/sets

///

/// Each dictionary indicates the number of bits and the number of markers contained

/// - DICT_ARUCO_ORIGINAL: standard ArUco Library Markers. 1024 markers, 5x5 bits, 0 minimum

/// distance

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum PredefinedDictionaryType {

/// 4x4 bits, minimum hamming distance between any two codes = 4, 50 codes

DICT_4X4_50 = 0,

/// 4x4 bits, minimum hamming distance between any two codes = 3, 100 codes

DICT_4X4_100 = 1,

/// 4x4 bits, minimum hamming distance between any two codes = 3, 250 codes

DICT_4X4_250 = 2,

/// 4x4 bits, minimum hamming distance between any two codes = 2, 1000 codes

DICT_4X4_1000 = 3,

/// 5x5 bits, minimum hamming distance between any two codes = 8, 50 codes

DICT_5X5_50 = 4,

/// 5x5 bits, minimum hamming distance between any two codes = 7, 100 codes

DICT_5X5_100 = 5,

/// 5x5 bits, minimum hamming distance between any two codes = 6, 250 codes

DICT_5X5_250 = 6,

/// 5x5 bits, minimum hamming distance between any two codes = 5, 1000 codes

DICT_5X5_1000 = 7,

/// 6x6 bits, minimum hamming distance between any two codes = 13, 50 codes

DICT_6X6_50 = 8,

/// 6x6 bits, minimum hamming distance between any two codes = 12, 100 codes

DICT_6X6_100 = 9,

/// 6x6 bits, minimum hamming distance between any two codes = 11, 250 codes

DICT_6X6_250 = 10,

/// 6x6 bits, minimum hamming distance between any two codes = 9, 1000 codes

DICT_6X6_1000 = 11,

/// 7x7 bits, minimum hamming distance between any two codes = 19, 50 codes

DICT_7X7_50 = 12,

/// 7x7 bits, minimum hamming distance between any two codes = 18, 100 codes

DICT_7X7_100 = 13,

/// 7x7 bits, minimum hamming distance between any two codes = 17, 250 codes

DICT_7X7_250 = 14,

/// 7x7 bits, minimum hamming distance between any two codes = 14, 1000 codes

DICT_7X7_1000 = 15,

/// 6x6 bits, minimum hamming distance between any two codes = 3, 1024 codes

DICT_ARUCO_ORIGINAL = 16,

/// 4x4 bits, minimum hamming distance between any two codes = 5, 30 codes

DICT_APRILTAG_16h5 = 17,

/// 5x5 bits, minimum hamming distance between any two codes = 9, 35 codes

DICT_APRILTAG_25h9 = 18,

/// 6x6 bits, minimum hamming distance between any two codes = 10, 2320 codes

DICT_APRILTAG_36h10 = 19,

/// 6x6 bits, minimum hamming distance between any two codes = 11, 587 codes

DICT_APRILTAG_36h11 = 20,

}

opencv_type_enum! { crate::objdetect::PredefinedDictionaryType }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum QRCodeEncoder_CorrectionLevel {

CORRECT_LEVEL_L = 0,

CORRECT_LEVEL_M = 1,

CORRECT_LEVEL_Q = 2,

CORRECT_LEVEL_H = 3,

}

opencv_type_enum! { crate::objdetect::QRCodeEncoder_CorrectionLevel }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum QRCodeEncoder_ECIEncodings {

ECI_UTF8 = 26,

}

opencv_type_enum! { crate::objdetect::QRCodeEncoder_ECIEncodings }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum QRCodeEncoder_EncodeMode {

MODE_AUTO = -1,

MODE_NUMERIC = 1,

MODE_ALPHANUMERIC = 2,

MODE_BYTE = 4,

MODE_ECI = 7,

MODE_KANJI = 8,

MODE_STRUCTURED_APPEND = 3,

}

opencv_type_enum! { crate::objdetect::QRCodeEncoder_EncodeMode }

pub type DetectionBasedTracker_Object = core::Tuple<(core::Rect, i32)>;

/// Draws a set of Charuco corners

/// ## Parameters

/// * image: input/output image. It must have 1 or 3 channels. The number of channels is not

/// altered.

/// * charucoCorners: vector of detected charuco corners

/// * charucoIds: list of identifiers for each corner in charucoCorners

/// * cornerColor: color of the square surrounding each corner

///

/// This function draws a set of detected Charuco corners. If identifiers vector is provided, it also

/// draws the id of each corner.

///

/// ## C++ default parameters

/// * charuco_ids: noArray()

/// * corner_color: Scalar(255,0,0)

#[inline]

pub fn draw_detected_corners_charuco(image: &mut dyn core::ToInputOutputArray, charuco_corners: &dyn core::ToInputArray, charuco_ids: &dyn core::ToInputArray, corner_color: core::Scalar) -> Result<()> {

extern_container_arg!(image);

extern_container_arg!(charuco_corners);

extern_container_arg!(charuco_ids);

return_send!(via ocvrs_return);

unsafe { sys::cv_aruco_drawDetectedCornersCharuco_const__InputOutputArrayR_const__InputArrayR_const__InputArrayR_Scalar(image.as_raw__InputOutputArray(), charuco_corners.as_raw__InputArray(), charuco_ids.as_raw__InputArray(), corner_color.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Draw a set of detected ChArUco Diamond markers

///

/// ## Parameters

/// * image: input/output image. It must have 1 or 3 channels. The number of channels is not

/// altered.

/// * diamondCorners: positions of diamond corners in the same format returned by

/// detectCharucoDiamond(). (e.g std::vector<std::vector<cv::Point2f> > ). For N detected markers,

/// the dimensions of this array should be Nx4. The order of the corners should be clockwise.

/// * diamondIds: vector of identifiers for diamonds in diamondCorners, in the same format

/// returned by detectCharucoDiamond() (e.g. std::vector<Vec4i>).

/// Optional, if not provided, ids are not painted.

/// * borderColor: color of marker borders. Rest of colors (text color and first corner color)

/// are calculated based on this one.

///

/// Given an array of detected diamonds, this functions draws them in the image. The marker borders

/// are painted and the markers identifiers if provided.

/// Useful for debugging purposes.

///

/// ## C++ default parameters

/// * diamond_ids: noArray()

/// * border_color: Scalar(0,0,255)

#[inline]

pub fn draw_detected_diamonds(image: &mut dyn core::ToInputOutputArray, diamond_corners: &dyn core::ToInputArray, diamond_ids: &dyn core::ToInputArray, border_color: core::Scalar) -> Result<()> {

extern_container_arg!(image);

extern_container_arg!(diamond_corners);

extern_container_arg!(diamond_ids);

return_send!(via ocvrs_return);

unsafe { sys::cv_aruco_drawDetectedDiamonds_const__InputOutputArrayR_const__InputArrayR_const__InputArrayR_Scalar(image.as_raw__InputOutputArray(), diamond_corners.as_raw__InputArray(), diamond_ids.as_raw__InputArray(), border_color.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Draw detected markers in image

///

/// ## Parameters

/// * image: input/output image. It must have 1 or 3 channels. The number of channels is not altered.

/// * corners: positions of marker corners on input image.

/// (e.g std::vector<std::vector<cv::Point2f> > ). For N detected markers, the dimensions of

/// this array should be Nx4. The order of the corners should be clockwise.

/// * ids: vector of identifiers for markers in markersCorners .

/// Optional, if not provided, ids are not painted.

/// * borderColor: color of marker borders. Rest of colors (text color and first corner color)

/// are calculated based on this one to improve visualization.

///

/// Given an array of detected marker corners and its corresponding ids, this functions draws

/// the markers in the image. The marker borders are painted and the markers identifiers if provided.

/// Useful for debugging purposes.

///

/// ## C++ default parameters

/// * ids: noArray()

/// * border_color: Scalar(0,255,0)

#[inline]

pub fn draw_detected_markers(image: &mut dyn core::ToInputOutputArray, corners: &dyn core::ToInputArray, ids: &dyn core::ToInputArray, border_color: core::Scalar) -> Result<()> {

extern_container_arg!(image);

extern_container_arg!(corners);

extern_container_arg!(ids);

return_send!(via ocvrs_return);

unsafe { sys::cv_aruco_drawDetectedMarkers_const__InputOutputArrayR_const__InputArrayR_const__InputArrayR_Scalar(image.as_raw__InputOutputArray(), corners.as_raw__InputArray(), ids.as_raw__InputArray(), border_color.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Extend base dictionary by new nMarkers

///

/// ## Parameters

/// * nMarkers: number of markers in the dictionary

/// * markerSize: number of bits per dimension of each markers

/// * baseDictionary: Include the markers in this dictionary at the beginning (optional)

/// * randomSeed: a user supplied seed for theRNG()

///

/// This function creates a new dictionary composed by nMarkers markers and each markers composed

/// by markerSize x markerSize bits. If baseDictionary is provided, its markers are directly

/// included and the rest are generated based on them. If the size of baseDictionary is higher

/// than nMarkers, only the first nMarkers in baseDictionary are taken and no new marker is added.

///

/// ## C++ default parameters

/// * base_dictionary: Dictionary()

/// * random_seed: 0

#[inline]

pub fn extend_dictionary(n_markers: i32, marker_size: i32, base_dictionary: &crate::objdetect::Dictionary, random_seed: i32) -> Result<crate::objdetect::Dictionary> {

return_send!(via ocvrs_return);

unsafe { sys::cv_aruco_extendDictionary_int_int_const_DictionaryR_int(n_markers, marker_size, base_dictionary.as_raw_Dictionary(), random_seed, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::Dictionary::opencv_from_extern(ret) };

Ok(ret)

}

/// Generate a canonical marker image

///

/// ## Parameters

/// * dictionary: dictionary of markers indicating the type of markers

/// * id: identifier of the marker that will be returned. It has to be a valid id in the specified dictionary.

/// * sidePixels: size of the image in pixels

/// * img: output image with the marker

/// * borderBits: width of the marker border.

///

/// This function returns a marker image in its canonical form (i.e. ready to be printed)

///

/// ## C++ default parameters

/// * border_bits: 1

#[inline]

pub fn generate_image_marker(dictionary: &crate::objdetect::Dictionary, id: i32, side_pixels: i32, img: &mut dyn core::ToOutputArray, border_bits: i32) -> Result<()> {

extern_container_arg!(img);

return_send!(via ocvrs_return);

unsafe { sys::cv_aruco_generateImageMarker_const_DictionaryR_int_int_const__OutputArrayR_int(dictionary.as_raw_Dictionary(), id, side_pixels, img.as_raw__OutputArray(), border_bits, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Returns one of the predefined dictionaries defined in PredefinedDictionaryType

#[inline]

pub fn get_predefined_dictionary(name: crate::objdetect::PredefinedDictionaryType) -> Result<crate::objdetect::Dictionary> {

return_send!(via ocvrs_return);

unsafe { sys::cv_aruco_getPredefinedDictionary_PredefinedDictionaryType(name, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::Dictionary::opencv_from_extern(ret) };

Ok(ret)

}

/// Returns one of the predefined dictionaries referenced by DICT_*.

#[inline]

pub fn get_predefined_dictionary_i32(dict: i32) -> Result<crate::objdetect::Dictionary> {

return_send!(via ocvrs_return);

unsafe { sys::cv_aruco_getPredefinedDictionary_int(dict, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::Dictionary::opencv_from_extern(ret) };

Ok(ret)

}

#[inline]

pub fn create_face_detection_mask_generator() -> Result<core::Ptr<dyn crate::objdetect::BaseCascadeClassifier_MaskGenerator>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_createFaceDetectionMaskGenerator(ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<dyn crate::objdetect::BaseCascadeClassifier_MaskGenerator>::opencv_from_extern(ret) };

Ok(ret)

}

/// This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts.

///

/// ## C++ default parameters

/// * detect_threshold: 0.0

/// * win_det_size: Size(64,128)

#[inline]

pub fn group_rectangles_meanshift(rect_list: &mut core::Vector<core::Rect>, found_weights: &mut core::Vector<f64>, found_scales: &mut core::Vector<f64>, detect_threshold: f64, win_det_size: core::Size) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_groupRectangles_meanshift_vectorLRectGR_vectorLdoubleGR_vectorLdoubleGR_double_Size(rect_list.as_raw_mut_VectorOfRect(), found_weights.as_raw_mut_VectorOff64(), found_scales.as_raw_mut_VectorOff64(), detect_threshold, win_det_size.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Groups the object candidate rectangles.

///

/// ## Parameters

/// * rectList: Input/output vector of rectangles. Output vector includes retained and grouped

/// rectangles. (The Python list is not modified in place.)

/// * groupThreshold: Minimum possible number of rectangles minus 1. The threshold is used in a

/// group of rectangles to retain it.

/// * eps: Relative difference between sides of the rectangles to merge them into a group.

///

/// The function is a wrapper for the generic function partition . It clusters all the input rectangles

/// using the rectangle equivalence criteria that combines rectangles with similar sizes and similar

/// locations. The similarity is defined by eps. When eps=0 , no clustering is done at all. If

///  , all the rectangles are put in one cluster. Then, the small

/// clusters containing less than or equal to groupThreshold rectangles are rejected. In each other

/// cluster, the average rectangle is computed and put into the output rectangle list.

///

/// ## C++ default parameters

/// * eps: 0.2

#[inline]

pub fn group_rectangles(rect_list: &mut core::Vector<core::Rect>, group_threshold: i32, eps: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_groupRectangles_vectorLRectGR_int_double(rect_list.as_raw_mut_VectorOfRect(), group_threshold, eps, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Groups the object candidate rectangles.

///

/// ## Parameters

/// * rectList: Input/output vector of rectangles. Output vector includes retained and grouped

/// rectangles. (The Python list is not modified in place.)

/// * groupThreshold: Minimum possible number of rectangles minus 1. The threshold is used in a

/// group of rectangles to retain it.

/// * eps: Relative difference between sides of the rectangles to merge them into a group.

///

/// The function is a wrapper for the generic function partition . It clusters all the input rectangles

/// using the rectangle equivalence criteria that combines rectangles with similar sizes and similar

/// locations. The similarity is defined by eps. When eps=0 , no clustering is done at all. If

///  , all the rectangles are put in one cluster. Then, the small

/// clusters containing less than or equal to groupThreshold rectangles are rejected. In each other

/// cluster, the average rectangle is computed and put into the output rectangle list.

///

/// ## Overloaded parameters

#[inline]

pub fn group_rectangles_levelweights(rect_list: &mut core::Vector<core::Rect>, group_threshold: i32, eps: f64, weights: &mut core::Vector<i32>, level_weights: &mut core::Vector<f64>) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_groupRectangles_vectorLRectGR_int_double_vectorLintGX_vectorLdoubleGX(rect_list.as_raw_mut_VectorOfRect(), group_threshold, eps, weights.as_raw_mut_VectorOfi32(), level_weights.as_raw_mut_VectorOff64(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Groups the object candidate rectangles.

///

/// ## Parameters

/// * rectList: Input/output vector of rectangles. Output vector includes retained and grouped

/// rectangles. (The Python list is not modified in place.)

/// * groupThreshold: Minimum possible number of rectangles minus 1. The threshold is used in a

/// group of rectangles to retain it.

/// * eps: Relative difference between sides of the rectangles to merge them into a group.

///

/// The function is a wrapper for the generic function partition . It clusters all the input rectangles

/// using the rectangle equivalence criteria that combines rectangles with similar sizes and similar

/// locations. The similarity is defined by eps. When eps=0 , no clustering is done at all. If

///  , all the rectangles are put in one cluster. Then, the small

/// clusters containing less than or equal to groupThreshold rectangles are rejected. In each other

/// cluster, the average rectangle is computed and put into the output rectangle list.

///

/// ## Overloaded parameters

///

/// ## C++ default parameters

/// * eps: 0.2

#[inline]

pub fn group_rectangles_weights(rect_list: &mut core::Vector<core::Rect>, weights: &mut core::Vector<i32>, group_threshold: i32, eps: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_groupRectangles_vectorLRectGR_vectorLintGR_int_double(rect_list.as_raw_mut_VectorOfRect(), weights.as_raw_mut_VectorOfi32(), group_threshold, eps, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Groups the object candidate rectangles.

///

/// ## Parameters

/// * rectList: Input/output vector of rectangles. Output vector includes retained and grouped

/// rectangles. (The Python list is not modified in place.)

/// * groupThreshold: Minimum possible number of rectangles minus 1. The threshold is used in a

/// group of rectangles to retain it.

/// * eps: Relative difference between sides of the rectangles to merge them into a group.

///

/// The function is a wrapper for the generic function partition . It clusters all the input rectangles

/// using the rectangle equivalence criteria that combines rectangles with similar sizes and similar

/// locations. The similarity is defined by eps. When eps=0 , no clustering is done at all. If

///  , all the rectangles are put in one cluster. Then, the small

/// clusters containing less than or equal to groupThreshold rectangles are rejected. In each other

/// cluster, the average rectangle is computed and put into the output rectangle list.

///

/// ## Overloaded parameters

///

/// ## C++ default parameters

/// * eps: 0.2

#[inline]

pub fn group_rectangles_levels(rect_list: &mut core::Vector<core::Rect>, reject_levels: &mut core::Vector<i32>, level_weights: &mut core::Vector<f64>, group_threshold: i32, eps: f64) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_groupRectangles_vectorLRectGR_vectorLintGR_vectorLdoubleGR_int_double(rect_list.as_raw_mut_VectorOfRect(), reject_levels.as_raw_mut_VectorOfi32(), level_weights.as_raw_mut_VectorOff64(), group_threshold, eps, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Constant methods for [crate::objdetect::BaseCascadeClassifier]

pub trait BaseCascadeClassifierConst: core::AlgorithmTraitConst {

fn as_raw_BaseCascadeClassifier(&self) -> *const c_void;

#[inline]

fn empty(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_empty_const(self.as_raw_BaseCascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn is_old_format_cascade(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_isOldFormatCascade_const(self.as_raw_BaseCascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_original_window_size(&self) -> Result<core::Size> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_getOriginalWindowSize_const(self.as_raw_BaseCascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_feature_type(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_getFeatureType_const(self.as_raw_BaseCascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

pub trait BaseCascadeClassifier: core::AlgorithmTrait + crate::objdetect::BaseCascadeClassifierConst {

fn as_raw_mut_BaseCascadeClassifier(&mut self) -> *mut c_void;

#[inline]

fn load(&mut self, filename: &str) -> Result<bool> {

extern_container_arg!(filename);

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_load_const_StringR(self.as_raw_mut_BaseCascadeClassifier(), filename.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn detect_multi_scale(&mut self, image: &dyn core::ToInputArray, objects: &mut core::Vector<core::Rect>, scale_factor: f64, min_neighbors: i32, flags: i32, min_size: core::Size, max_size: core::Size) -> Result<()> {

extern_container_arg!(image);

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_detectMultiScale_const__InputArrayR_vectorLRectGR_double_int_int_Size_Size(self.as_raw_mut_BaseCascadeClassifier(), image.as_raw__InputArray(), objects.as_raw_mut_VectorOfRect(), scale_factor, min_neighbors, flags, min_size.opencv_as_extern(), max_size.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn detect_multi_scale_num(&mut self, image: &dyn core::ToInputArray, objects: &mut core::Vector<core::Rect>, num_detections: &mut core::Vector<i32>, scale_factor: f64, min_neighbors: i32, flags: i32, min_size: core::Size, max_size: core::Size) -> Result<()> {

extern_container_arg!(image);

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_detectMultiScale_const__InputArrayR_vectorLRectGR_vectorLintGR_double_int_int_Size_Size(self.as_raw_mut_BaseCascadeClassifier(), image.as_raw__InputArray(), objects.as_raw_mut_VectorOfRect(), num_detections.as_raw_mut_VectorOfi32(), scale_factor, min_neighbors, flags, min_size.opencv_as_extern(), max_size.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn detect_multi_scale_levels(&mut self, image: &dyn core::ToInputArray, objects: &mut core::Vector<core::Rect>, reject_levels: &mut core::Vector<i32>, level_weights: &mut core::Vector<f64>, scale_factor: f64, min_neighbors: i32, flags: i32, min_size: core::Size, max_size: core::Size, output_reject_levels: bool) -> Result<()> {

extern_container_arg!(image);

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_detectMultiScale_const__InputArrayR_vectorLRectGR_vectorLintGR_vectorLdoubleGR_double_int_int_Size_Size_bool(self.as_raw_mut_BaseCascadeClassifier(), image.as_raw__InputArray(), objects.as_raw_mut_VectorOfRect(), reject_levels.as_raw_mut_VectorOfi32(), level_weights.as_raw_mut_VectorOff64(), scale_factor, min_neighbors, flags, min_size.opencv_as_extern(), max_size.opencv_as_extern(), output_reject_levels, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_old_cascade(&mut self) -> Result<*mut c_void> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_getOldCascade(self.as_raw_mut_BaseCascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_mask_generator(&mut self, mask_generator: &core::Ptr<dyn crate::objdetect::BaseCascadeClassifier_MaskGenerator>) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_setMaskGenerator_const_PtrLMaskGeneratorGR(self.as_raw_mut_BaseCascadeClassifier(), mask_generator.as_raw_PtrOfBaseCascadeClassifier_MaskGenerator(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_mask_generator(&mut self) -> Result<core::Ptr<dyn crate::objdetect::BaseCascadeClassifier_MaskGenerator>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_getMaskGenerator(self.as_raw_mut_BaseCascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<dyn crate::objdetect::BaseCascadeClassifier_MaskGenerator>::opencv_from_extern(ret) };

Ok(ret)

}

}

/// Constant methods for [crate::objdetect::BaseCascadeClassifier_MaskGenerator]

pub trait BaseCascadeClassifier_MaskGeneratorConst {

fn as_raw_BaseCascadeClassifier_MaskGenerator(&self) -> *const c_void;

}

pub trait BaseCascadeClassifier_MaskGenerator: crate::objdetect::BaseCascadeClassifier_MaskGeneratorConst {

fn as_raw_mut_BaseCascadeClassifier_MaskGenerator(&mut self) -> *mut c_void;

#[inline]

fn generate_mask(&mut self, src: &core::Mat) -> Result<core::Mat> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_MaskGenerator_generateMask_const_MatR(self.as_raw_mut_BaseCascadeClassifier_MaskGenerator(), src.as_raw_Mat(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Mat::opencv_from_extern(ret) };

Ok(ret)

}

#[inline]

fn initialize_mask(&mut self, unnamed: &core::Mat) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_BaseCascadeClassifier_MaskGenerator_initializeMask_const_MatR(self.as_raw_mut_BaseCascadeClassifier_MaskGenerator(), unnamed.as_raw_Mat(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Constant methods for [crate::objdetect::CascadeClassifier]

pub trait CascadeClassifierTraitConst {

fn as_raw_CascadeClassifier(&self) -> *const c_void;

/// Checks whether the classifier has been loaded.

#[inline]

fn empty(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_empty_const(self.as_raw_CascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn is_old_format_cascade(&self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_isOldFormatCascade_const(self.as_raw_CascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_original_window_size(&self) -> Result<core::Size> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_getOriginalWindowSize_const(self.as_raw_CascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_feature_type(&self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_getFeatureType_const(self.as_raw_CascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Mutable methods for [crate::objdetect::CascadeClassifier]

pub trait CascadeClassifierTrait: crate::objdetect::CascadeClassifierTraitConst {

fn as_raw_mut_CascadeClassifier(&mut self) -> *mut c_void;

#[inline]

fn cc(&mut self) -> core::Ptr<dyn crate::objdetect::BaseCascadeClassifier> {

let ret = unsafe { sys::cv_CascadeClassifier_getPropCc(self.as_raw_mut_CascadeClassifier()) };

let ret = unsafe { core::Ptr::<dyn crate::objdetect::BaseCascadeClassifier>::opencv_from_extern(ret) };

ret

}

#[inline]

fn set_cc(&mut self, mut val: core::Ptr<dyn crate::objdetect::BaseCascadeClassifier>) {

let ret = unsafe { sys::cv_CascadeClassifier_setPropCc_PtrLBaseCascadeClassifierG(self.as_raw_mut_CascadeClassifier(), val.as_raw_mut_PtrOfBaseCascadeClassifier()) };

ret

}

/// Loads a classifier from a file.

///

/// ## Parameters

/// * filename: Name of the file from which the classifier is loaded. The file may contain an old

/// HAAR classifier trained by the haartraining application or a new cascade classifier trained by the

/// traincascade application.

#[inline]

fn load(&mut self, filename: &str) -> Result<bool> {

extern_container_arg!(filename);

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_load_const_StringR(self.as_raw_mut_CascadeClassifier(), filename.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Reads a classifier from a FileStorage node.

///

///

/// Note: The file may contain a new cascade classifier (trained by the traincascade application) only.

#[inline]

fn read(&mut self, node: &core::FileNode) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_read_const_FileNodeR(self.as_raw_mut_CascadeClassifier(), node.as_raw_FileNode(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Detects objects of different sizes in the input image. The detected objects are returned as a list

/// of rectangles.

///

/// ## Parameters

/// * image: Matrix of the type CV_8U containing an image where objects are detected.

/// * objects: Vector of rectangles where each rectangle contains the detected object, the

/// rectangles may be partially outside the original image.

/// * scaleFactor: Parameter specifying how much the image size is reduced at each image scale.

/// * minNeighbors: Parameter specifying how many neighbors each candidate rectangle should have

/// to retain it.

/// * flags: Parameter with the same meaning for an old cascade as in the function

/// cvHaarDetectObjects. It is not used for a new cascade.

/// * minSize: Minimum possible object size. Objects smaller than that are ignored.

/// * maxSize: Maximum possible object size. Objects larger than that are ignored. If `maxSize == minSize` model is evaluated on single scale.

///

/// ## C++ default parameters

/// * scale_factor: 1.1

/// * min_neighbors: 3

/// * flags: 0

/// * min_size: Size()

/// * max_size: Size()

#[inline]

fn detect_multi_scale(&mut self, image: &dyn core::ToInputArray, objects: &mut core::Vector<core::Rect>, scale_factor: f64, min_neighbors: i32, flags: i32, min_size: core::Size, max_size: core::Size) -> Result<()> {

extern_container_arg!(image);

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_detectMultiScale_const__InputArrayR_vectorLRectGR_double_int_int_Size_Size(self.as_raw_mut_CascadeClassifier(), image.as_raw__InputArray(), objects.as_raw_mut_VectorOfRect(), scale_factor, min_neighbors, flags, min_size.opencv_as_extern(), max_size.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Detects objects of different sizes in the input image. The detected objects are returned as a list

/// of rectangles.

///

/// ## Parameters

/// * image: Matrix of the type CV_8U containing an image where objects are detected.

/// * objects: Vector of rectangles where each rectangle contains the detected object, the

/// rectangles may be partially outside the original image.

/// * scaleFactor: Parameter specifying how much the image size is reduced at each image scale.

/// * minNeighbors: Parameter specifying how many neighbors each candidate rectangle should have

/// to retain it.

/// * flags: Parameter with the same meaning for an old cascade as in the function

/// cvHaarDetectObjects. It is not used for a new cascade.

/// * minSize: Minimum possible object size. Objects smaller than that are ignored.

/// * maxSize: Maximum possible object size. Objects larger than that are ignored. If `maxSize == minSize` model is evaluated on single scale.

///

/// ## Overloaded parameters

///

/// * image: Matrix of the type CV_8U containing an image where objects are detected.

/// * objects: Vector of rectangles where each rectangle contains the detected object, the

/// rectangles may be partially outside the original image.

/// * numDetections: Vector of detection numbers for the corresponding objects. An object's number

/// of detections is the number of neighboring positively classified rectangles that were joined

/// together to form the object.

/// * scaleFactor: Parameter specifying how much the image size is reduced at each image scale.

/// * minNeighbors: Parameter specifying how many neighbors each candidate rectangle should have

/// to retain it.

/// * flags: Parameter with the same meaning for an old cascade as in the function

/// cvHaarDetectObjects. It is not used for a new cascade.

/// * minSize: Minimum possible object size. Objects smaller than that are ignored.

/// * maxSize: Maximum possible object size. Objects larger than that are ignored. If `maxSize == minSize` model is evaluated on single scale.

///

/// ## C++ default parameters

/// * scale_factor: 1.1

/// * min_neighbors: 3

/// * flags: 0

/// * min_size: Size()

/// * max_size: Size()

#[inline]

fn detect_multi_scale2(&mut self, image: &dyn core::ToInputArray, objects: &mut core::Vector<core::Rect>, num_detections: &mut core::Vector<i32>, scale_factor: f64, min_neighbors: i32, flags: i32, min_size: core::Size, max_size: core::Size) -> Result<()> {

extern_container_arg!(image);

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_detectMultiScale_const__InputArrayR_vectorLRectGR_vectorLintGR_double_int_int_Size_Size(self.as_raw_mut_CascadeClassifier(), image.as_raw__InputArray(), objects.as_raw_mut_VectorOfRect(), num_detections.as_raw_mut_VectorOfi32(), scale_factor, min_neighbors, flags, min_size.opencv_as_extern(), max_size.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Detects objects of different sizes in the input image. The detected objects are returned as a list

/// of rectangles.

///

/// ## Parameters

/// * image: Matrix of the type CV_8U containing an image where objects are detected.

/// * objects: Vector of rectangles where each rectangle contains the detected object, the

/// rectangles may be partially outside the original image.

/// * scaleFactor: Parameter specifying how much the image size is reduced at each image scale.

/// * minNeighbors: Parameter specifying how many neighbors each candidate rectangle should have

/// to retain it.

/// * flags: Parameter with the same meaning for an old cascade as in the function

/// cvHaarDetectObjects. It is not used for a new cascade.

/// * minSize: Minimum possible object size. Objects smaller than that are ignored.

/// * maxSize: Maximum possible object size. Objects larger than that are ignored. If `maxSize == minSize` model is evaluated on single scale.

///

/// ## Overloaded parameters

///

/// This function allows you to retrieve the final stage decision certainty of classification.

/// For this, one needs to set `outputRejectLevels` on true and provide the `rejectLevels` and `levelWeights` parameter.

/// For each resulting detection, `levelWeights` will then contain the certainty of classification at the final stage.

/// This value can then be used to separate strong from weaker classifications.

///

/// A code sample on how to use it efficiently can be found below:

/// ```C++

/// Mat img;

/// vector<double> weights;

/// vector<int> levels;

/// vector<Rect> detections;

/// CascadeClassifier model("/path/to/your/model.xml");

/// model.detectMultiScale(img, detections, levels, weights, 1.1, 3, 0, Size(), Size(), true);

/// cerr << "Detection " << detections[0] << " with weight " << weights[0] << endl;

/// ```

///

///

/// ## C++ default parameters

/// * scale_factor: 1.1

/// * min_neighbors: 3

/// * flags: 0

/// * min_size: Size()

/// * max_size: Size()

/// * output_reject_levels: false

#[inline]

fn detect_multi_scale3(&mut self, image: &dyn core::ToInputArray, objects: &mut core::Vector<core::Rect>, reject_levels: &mut core::Vector<i32>, level_weights: &mut core::Vector<f64>, scale_factor: f64, min_neighbors: i32, flags: i32, min_size: core::Size, max_size: core::Size, output_reject_levels: bool) -> Result<()> {

extern_container_arg!(image);

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_detectMultiScale_const__InputArrayR_vectorLRectGR_vectorLintGR_vectorLdoubleGR_double_int_int_Size_Size_bool(self.as_raw_mut_CascadeClassifier(), image.as_raw__InputArray(), objects.as_raw_mut_VectorOfRect(), reject_levels.as_raw_mut_VectorOfi32(), level_weights.as_raw_mut_VectorOff64(), scale_factor, min_neighbors, flags, min_size.opencv_as_extern(), max_size.opencv_as_extern(), output_reject_levels, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_old_cascade(&mut self) -> Result<*mut c_void> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_getOldCascade(self.as_raw_mut_CascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_mask_generator(&mut self, mask_generator: &core::Ptr<dyn crate::objdetect::BaseCascadeClassifier_MaskGenerator>) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_setMaskGenerator_const_PtrLMaskGeneratorGR(self.as_raw_mut_CascadeClassifier(), mask_generator.as_raw_PtrOfBaseCascadeClassifier_MaskGenerator(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_mask_generator(&mut self) -> Result<core::Ptr<dyn crate::objdetect::BaseCascadeClassifier_MaskGenerator>> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_getMaskGenerator(self.as_raw_mut_CascadeClassifier(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { core::Ptr::<dyn crate::objdetect::BaseCascadeClassifier_MaskGenerator>::opencv_from_extern(ret) };

Ok(ret)

}

}

/// @example samples/cpp/facedetect.cpp

/// This program demonstrates usage of the Cascade classifier class

/// \image html Cascade_Classifier_Tutorial_Result_Haar.jpg "Sample screenshot" width=321 height=254

///

/// Cascade classifier class for object detection.

pub struct CascadeClassifier {

ptr: *mut c_void

}

opencv_type_boxed! { CascadeClassifier }

impl Drop for CascadeClassifier {

fn drop(&mut self) {

extern "C" { fn cv_CascadeClassifier_delete(instance: *mut c_void); }

unsafe { cv_CascadeClassifier_delete(self.as_raw_mut_CascadeClassifier()) };

}

}

unsafe impl Send for CascadeClassifier {}

impl crate::objdetect::CascadeClassifierTraitConst for CascadeClassifier {

#[inline] fn as_raw_CascadeClassifier(&self) -> *const c_void { self.as_raw() }

}

impl crate::objdetect::CascadeClassifierTrait for CascadeClassifier {

#[inline] fn as_raw_mut_CascadeClassifier(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl CascadeClassifier {

#[inline]

pub fn default() -> Result<crate::objdetect::CascadeClassifier> {

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_CascadeClassifier(ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::CascadeClassifier::opencv_from_extern(ret) };

Ok(ret)

}

/// Loads a classifier from a file.

///

/// ## Parameters

/// * filename: Name of the file from which the classifier is loaded.

#[inline]

pub fn new(filename: &str) -> Result<crate::objdetect::CascadeClassifier> {

extern_container_arg!(filename);

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_CascadeClassifier_const_StringR(filename.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::CascadeClassifier::opencv_from_extern(ret) };

Ok(ret)

}

#[inline]

pub fn convert(oldcascade: &str, newcascade: &str) -> Result<bool> {

extern_container_arg!(oldcascade);

extern_container_arg!(newcascade);

return_send!(via ocvrs_return);

unsafe { sys::cv_CascadeClassifier_convert_const_StringR_const_StringR(oldcascade.opencv_as_extern(), newcascade.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Constant methods for [crate::objdetect::DetectionBasedTracker]

pub trait DetectionBasedTrackerTraitConst {

fn as_raw_DetectionBasedTracker(&self) -> *const c_void;

#[inline]

fn get_parameters(&self) -> Result<crate::objdetect::DetectionBasedTracker_Parameters> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_getParameters_const(self.as_raw_DetectionBasedTracker(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::DetectionBasedTracker_Parameters::opencv_from_extern(ret) };

Ok(ret)

}

#[inline]

fn get_objects(&self, result: &mut core::Vector<core::Rect>) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_getObjects_const_vectorLRectGR(self.as_raw_DetectionBasedTracker(), result.as_raw_mut_VectorOfRect(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_objects_1(&self, result: &mut core::Vector<crate::objdetect::DetectionBasedTracker_Object>) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_getObjects_const_vectorLObjectGR(self.as_raw_DetectionBasedTracker(), result.as_raw_mut_VectorOfDetectionBasedTracker_Object(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_objects_2(&self, result: &mut core::Vector<crate::objdetect::DetectionBasedTracker_ExtObject>) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_getObjects_const_vectorLExtObjectGR(self.as_raw_DetectionBasedTracker(), result.as_raw_mut_VectorOfDetectionBasedTracker_ExtObject(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Mutable methods for [crate::objdetect::DetectionBasedTracker]

pub trait DetectionBasedTrackerTrait: crate::objdetect::DetectionBasedTrackerTraitConst {

fn as_raw_mut_DetectionBasedTracker(&mut self) -> *mut c_void;

#[inline]

fn run(&mut self) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_run(self.as_raw_mut_DetectionBasedTracker(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn stop(&mut self) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_stop(self.as_raw_mut_DetectionBasedTracker(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn reset_tracking(&mut self) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_resetTracking(self.as_raw_mut_DetectionBasedTracker(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn process(&mut self, image_gray: &core::Mat) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_process_const_MatR(self.as_raw_mut_DetectionBasedTracker(), image_gray.as_raw_Mat(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_parameters(&mut self, params: &crate::objdetect::DetectionBasedTracker_Parameters) -> Result<bool> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_setParameters_const_ParametersR(self.as_raw_mut_DetectionBasedTracker(), params.as_raw_DetectionBasedTracker_Parameters(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn add_object(&mut self, location: core::Rect) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_addObject_const_RectR(self.as_raw_mut_DetectionBasedTracker(), &location, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

pub struct DetectionBasedTracker {

ptr: *mut c_void

}

opencv_type_boxed! { DetectionBasedTracker }

impl Drop for DetectionBasedTracker {

fn drop(&mut self) {

extern "C" { fn cv_DetectionBasedTracker_delete(instance: *mut c_void); }

unsafe { cv_DetectionBasedTracker_delete(self.as_raw_mut_DetectionBasedTracker()) };

}

}

unsafe impl Send for DetectionBasedTracker {}

impl crate::objdetect::DetectionBasedTrackerTraitConst for DetectionBasedTracker {

#[inline] fn as_raw_DetectionBasedTracker(&self) -> *const c_void { self.as_raw() }

}

impl crate::objdetect::DetectionBasedTrackerTrait for DetectionBasedTracker {

#[inline] fn as_raw_mut_DetectionBasedTracker(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl DetectionBasedTracker {

#[inline]

pub fn new(mut main_detector: core::Ptr<dyn crate::objdetect::DetectionBasedTracker_IDetector>, mut tracking_detector: core::Ptr<dyn crate::objdetect::DetectionBasedTracker_IDetector>, params: &crate::objdetect::DetectionBasedTracker_Parameters) -> Result<crate::objdetect::DetectionBasedTracker> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_DetectionBasedTracker_PtrLIDetectorG_PtrLIDetectorG_const_ParametersR(main_detector.as_raw_mut_PtrOfDetectionBasedTracker_IDetector(), tracking_detector.as_raw_mut_PtrOfDetectionBasedTracker_IDetector(), params.as_raw_DetectionBasedTracker_Parameters(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::DetectionBasedTracker::opencv_from_extern(ret) };

Ok(ret)

}

}

/// Constant methods for [crate::objdetect::DetectionBasedTracker_ExtObject]

pub trait DetectionBasedTracker_ExtObjectTraitConst {

fn as_raw_DetectionBasedTracker_ExtObject(&self) -> *const c_void;

#[inline]

fn id(&self) -> i32 {

let ret = unsafe { sys::cv_DetectionBasedTracker_ExtObject_getPropId_const(self.as_raw_DetectionBasedTracker_ExtObject()) };

ret

}

#[inline]

fn location(&self) -> core::Rect {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_ExtObject_getPropLocation_const(self.as_raw_DetectionBasedTracker_ExtObject(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

ret

}

#[inline]

fn status(&self) -> crate::objdetect::DetectionBasedTracker_ObjectStatus {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_ExtObject_getPropStatus_const(self.as_raw_DetectionBasedTracker_ExtObject(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

ret

}

}

/// Mutable methods for [crate::objdetect::DetectionBasedTracker_ExtObject]

pub trait DetectionBasedTracker_ExtObjectTrait: crate::objdetect::DetectionBasedTracker_ExtObjectTraitConst {

fn as_raw_mut_DetectionBasedTracker_ExtObject(&mut self) -> *mut c_void;

#[inline]

fn set_id(&mut self, val: i32) {

let ret = unsafe { sys::cv_DetectionBasedTracker_ExtObject_setPropId_int(self.as_raw_mut_DetectionBasedTracker_ExtObject(), val) };

ret

}

#[inline]

fn set_location(&mut self, val: core::Rect) {

let ret = unsafe { sys::cv_DetectionBasedTracker_ExtObject_setPropLocation_Rect(self.as_raw_mut_DetectionBasedTracker_ExtObject(), val.opencv_as_extern()) };

ret

}

#[inline]

fn set_status(&mut self, val: crate::objdetect::DetectionBasedTracker_ObjectStatus) {

let ret = unsafe { sys::cv_DetectionBasedTracker_ExtObject_setPropStatus_ObjectStatus(self.as_raw_mut_DetectionBasedTracker_ExtObject(), val) };

ret

}

}

pub struct DetectionBasedTracker_ExtObject {

ptr: *mut c_void

}

opencv_type_boxed! { DetectionBasedTracker_ExtObject }

impl Drop for DetectionBasedTracker_ExtObject {

fn drop(&mut self) {

extern "C" { fn cv_DetectionBasedTracker_ExtObject_delete(instance: *mut c_void); }

unsafe { cv_DetectionBasedTracker_ExtObject_delete(self.as_raw_mut_DetectionBasedTracker_ExtObject()) };

}

}

unsafe impl Send for DetectionBasedTracker_ExtObject {}

impl crate::objdetect::DetectionBasedTracker_ExtObjectTraitConst for DetectionBasedTracker_ExtObject {

#[inline] fn as_raw_DetectionBasedTracker_ExtObject(&self) -> *const c_void { self.as_raw() }

}

impl crate::objdetect::DetectionBasedTracker_ExtObjectTrait for DetectionBasedTracker_ExtObject {

#[inline] fn as_raw_mut_DetectionBasedTracker_ExtObject(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl DetectionBasedTracker_ExtObject {

#[inline]

pub fn new(_id: i32, _location: core::Rect, _status: crate::objdetect::DetectionBasedTracker_ObjectStatus) -> Result<crate::objdetect::DetectionBasedTracker_ExtObject> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_ExtObject_ExtObject_int_Rect_ObjectStatus(_id, _location.opencv_as_extern(), _status, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::DetectionBasedTracker_ExtObject::opencv_from_extern(ret) };

Ok(ret)

}

}

/// Constant methods for [crate::objdetect::DetectionBasedTracker_IDetector]

pub trait DetectionBasedTracker_IDetectorConst {

fn as_raw_DetectionBasedTracker_IDetector(&self) -> *const c_void;

#[inline]

fn get_min_object_size(&self) -> Result<core::Size> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_getMinObjectSize_const(self.as_raw_DetectionBasedTracker_IDetector(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_max_object_size(&self) -> Result<core::Size> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_getMaxObjectSize_const(self.as_raw_DetectionBasedTracker_IDetector(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

pub trait DetectionBasedTracker_IDetector: crate::objdetect::DetectionBasedTracker_IDetectorConst {

fn as_raw_mut_DetectionBasedTracker_IDetector(&mut self) -> *mut c_void;

#[inline]

fn detect(&mut self, image: &core::Mat, objects: &mut core::Vector<core::Rect>) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_detect_const_MatR_vectorLRectGR(self.as_raw_mut_DetectionBasedTracker_IDetector(), image.as_raw_Mat(), objects.as_raw_mut_VectorOfRect(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_min_object_size(&mut self, min: core::Size) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_setMinObjectSize_const_SizeR(self.as_raw_mut_DetectionBasedTracker_IDetector(), &min, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_max_object_size(&mut self, max: core::Size) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_setMaxObjectSize_const_SizeR(self.as_raw_mut_DetectionBasedTracker_IDetector(), &max, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_scale_factor(&mut self) -> Result<f32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_getScaleFactor(self.as_raw_mut_DetectionBasedTracker_IDetector(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_scale_factor(&mut self, value: f32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_setScaleFactor_float(self.as_raw_mut_DetectionBasedTracker_IDetector(), value, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_min_neighbours(&mut self) -> Result<i32> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_getMinNeighbours(self.as_raw_mut_DetectionBasedTracker_IDetector(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn set_min_neighbours(&mut self, value: i32) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_IDetector_setMinNeighbours_int(self.as_raw_mut_DetectionBasedTracker_IDetector(), value, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

}

/// Constant methods for [crate::objdetect::DetectionBasedTracker_Parameters]

pub trait DetectionBasedTracker_ParametersTraitConst {

fn as_raw_DetectionBasedTracker_Parameters(&self) -> *const c_void;

#[inline]

fn max_track_lifetime(&self) -> i32 {

let ret = unsafe { sys::cv_DetectionBasedTracker_Parameters_getPropMaxTrackLifetime_const(self.as_raw_DetectionBasedTracker_Parameters()) };

ret

}

#[inline]

fn min_detection_period(&self) -> i32 {

let ret = unsafe { sys::cv_DetectionBasedTracker_Parameters_getPropMinDetectionPeriod_const(self.as_raw_DetectionBasedTracker_Parameters()) };

ret

}

}

/// Mutable methods for [crate::objdetect::DetectionBasedTracker_Parameters]

pub trait DetectionBasedTracker_ParametersTrait: crate::objdetect::DetectionBasedTracker_ParametersTraitConst {

fn as_raw_mut_DetectionBasedTracker_Parameters(&mut self) -> *mut c_void;

#[inline]

fn set_max_track_lifetime(&mut self, val: i32) {

let ret = unsafe { sys::cv_DetectionBasedTracker_Parameters_setPropMaxTrackLifetime_int(self.as_raw_mut_DetectionBasedTracker_Parameters(), val) };

ret

}

#[inline]

fn set_min_detection_period(&mut self, val: i32) {

let ret = unsafe { sys::cv_DetectionBasedTracker_Parameters_setPropMinDetectionPeriod_int(self.as_raw_mut_DetectionBasedTracker_Parameters(), val) };

ret

}

}

pub struct DetectionBasedTracker_Parameters {

ptr: *mut c_void

}

opencv_type_boxed! { DetectionBasedTracker_Parameters }

impl Drop for DetectionBasedTracker_Parameters {

fn drop(&mut self) {

extern "C" { fn cv_DetectionBasedTracker_Parameters_delete(instance: *mut c_void); }

unsafe { cv_DetectionBasedTracker_Parameters_delete(self.as_raw_mut_DetectionBasedTracker_Parameters()) };

}

}

unsafe impl Send for DetectionBasedTracker_Parameters {}

impl crate::objdetect::DetectionBasedTracker_ParametersTraitConst for DetectionBasedTracker_Parameters {

#[inline] fn as_raw_DetectionBasedTracker_Parameters(&self) -> *const c_void { self.as_raw() }

}

impl crate::objdetect::DetectionBasedTracker_ParametersTrait for DetectionBasedTracker_Parameters {

#[inline] fn as_raw_mut_DetectionBasedTracker_Parameters(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl DetectionBasedTracker_Parameters {

#[inline]

pub fn default() -> Result<crate::objdetect::DetectionBasedTracker_Parameters> {

return_send!(via ocvrs_return);

unsafe { sys::cv_DetectionBasedTracker_Parameters_Parameters(ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

let ret = unsafe { crate::objdetect::DetectionBasedTracker_Parameters::opencv_from_extern(ret) };

Ok(ret)

}

}

/// Constant methods for [crate::objdetect::DetectionROI]

pub trait DetectionROITraitConst {

fn as_raw_DetectionROI(&self) -> *const c_void;

/// scale(size) of the bounding box

#[inline]

fn scale(&self) -> f64 {

let ret = unsafe { sys::cv_DetectionROI_getPropScale_const(self.as_raw_DetectionROI()) };

ret

}

/// set of requested locations to be evaluated

#[inline]

fn locations(&self) -> core::Vector<core::Point> {

let ret = unsafe { sys::cv_DetectionROI_getPropLocations_const(self.as_raw_DetectionROI()) };

let ret = unsafe { core::Vector::<core::Point>::opencv_from_extern(ret) };

ret

}

/// vector that will contain confidence values for each location

#[inline]

fn confidences(&self) -> core::Vector<f64> {

let ret = unsafe { sys::cv_DetectionROI_getPropConfidences_const(self.as_raw_DetectionROI()) };

let ret = unsafe { core::Vector::<f64>::opencv_from_extern(ret) };

ret

}

}

/// Mutable methods for [crate::objdetect::DetectionROI]

pub trait DetectionROITrait: crate::objdetect::DetectionROITraitConst {

fn as_raw_mut_DetectionROI(&mut self) -> *mut c_void;

/// scale(size) of the bounding box

#[inline]

fn set_scale(&mut self, val: f64) {

let ret = unsafe { sys::cv_DetectionROI_setPropScale_double(self.as_raw_mut_DetectionROI(), val) };

ret

}

/// set of requested locations to be evaluated

#[inline]

fn set_locations(&mut self, mut val: core::Vector<core::Point>) {

let ret = unsafe { sys::cv_DetectionROI_setPropLocations_vectorLPointG(self.as_raw_mut_DetectionROI(), val.as_raw_mut_VectorOfPoint()) };

ret

}

/// vector that will contain confidence values for each location

#[inline]

fn set_confidences(&mut self, mut val: core::Vector<f64>) {

let ret = unsafe { sys::cv_DetectionROI_setPropConfidences_vectorLdoubleG(self.as_raw_mut_DetectionROI(), val.as_raw_mut_VectorOff64()) };

ret

}

}

/// struct for detection region of interest (ROI)

pub struct DetectionROI {

ptr: *mut c_void

}

opencv_type_boxed! { DetectionROI }

impl Drop for DetectionROI {

fn drop(&mut self) {

extern "C" { fn cv_DetectionROI_delete(instance: *mut c_void); }

unsafe { cv_DetectionROI_delete(self.as_raw_mut_DetectionROI()) };

}

}

unsafe impl Send for DetectionROI {}

impl crate::objdetect::DetectionROITraitConst for DetectionROI {

#[inline] fn as_raw_DetectionROI(&self) -> *const c_void { self.as_raw() }

}

impl crate::objdetect::DetectionROITrait for DetectionROI {

#[inline] fn as_raw_mut_DetectionROI(&mut self) -> *mut c_void { self.as_raw_mut() }

}

impl DetectionROI {

}

/// Constant methods for [crate::objdetect::FaceDetectorYN]

pub trait FaceDetectorYNConst {

fn as_raw_FaceDetectorYN(&self) -> *const c_void;

}

/// DNN-based face detector

///

/// model download link: <https://github.com/opencv/opencv_zoo/tree/master/models/face_detection_yunet>

pub trait FaceDetectorYN: crate::objdetect::FaceDetectorYNConst {

fn as_raw_mut_FaceDetectorYN(&mut self) -> *mut c_void;

/// Set the size for the network input, which overwrites the input size of creating model. Call this method when the size of input image does not match the input size when creating model

///

/// ## Parameters

/// * input_size: the size of the input image

#[inline]

fn set_input_size(&mut self, input_size: core::Size) -> Result<()> {

return_send!(via ocvrs_return);

unsafe { sys::cv_FaceDetectorYN_setInputSize_const_SizeR(self.as_raw_mut_FaceDetectorYN(), &input_size, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

#[inline]

fn get_input_size(&mut self) -> Result<core::Size> {

return_send!(via ocvrs_return);

unsafe { sys::cv_FaceDetectorYN_getInputSize(self.as_raw_mut_FaceDetectorYN(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Set the score threshold to filter out bounding boxes of score less than the given value

///

/// ## Parameters

/// * score_threshold: threshold for filtering out bounding boxes

#[inline]

fn set_score_threshold(&mut self, score_threshold: f32) -> Result<()> {

return_send!(via ocvrs_return);