#![allow(

unused_parens,

clippy::excessive_precision,

clippy::missing_safety_doc,

clippy::should_implement_trait,

clippy::too_many_arguments,

clippy::unused_unit,

clippy::let_unit_value,

clippy::derive_partial_eq_without_eq,

)]

//! # Camera Calibration and 3D Reconstruction

//!

//! The functions in this section use a so-called pinhole camera model. The view of a scene

//! is obtained by projecting a scene's 3D point  into the image plane using a perspective

//! transformation which forms the corresponding pixel . Both  and  are

//! represented in homogeneous coordinates, i.e. as 3D and 2D homogeneous vector respectively. You will

//! find a brief introduction to projective geometry, homogeneous vectors and homogeneous

//! transformations at the end of this section's introduction. For more succinct notation, we often drop

//! the 'homogeneous' and say vector instead of homogeneous vector.

//!

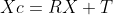

//! The distortion-free projective transformation given by a pinhole camera model is shown below.

//!

//!

//!

//! where  is a 3D point expressed with respect to the world coordinate system,

//!  is a 2D pixel in the image plane,  is the camera intrinsic matrix,

//!  and  are the rotation and translation that describe the change of coordinates from

//! world to camera coordinate systems (or camera frame) and  is the projective transformation's

//! arbitrary scaling and not part of the camera model.

//!

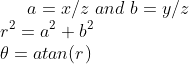

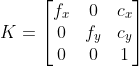

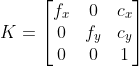

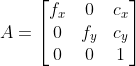

//! The camera intrinsic matrix  (notation used as in [Zhang2000](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Zhang2000) and also generally notated

//! as ) projects 3D points given in the camera coordinate system to 2D pixel coordinates, i.e.

//!

//!

//!

//! The camera intrinsic matrix  is composed of the focal lengths  and , which are

//! expressed in pixel units, and the principal point , that is usually close to the

//! image center:

//!

//!

//!

//! and thus

//!

//!

//!

//! The matrix of intrinsic parameters does not depend on the scene viewed. So, once estimated, it can

//! be re-used as long as the focal length is fixed (in case of a zoom lens). Thus, if an image from the

//! camera is scaled by a factor, all of these parameters need to be scaled (multiplied/divided,

//! respectively) by the same factor.

//!

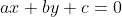

//! The joint rotation-translation matrix  is the matrix product of a projective

//! transformation and a homogeneous transformation. The 3-by-4 projective transformation maps 3D points

//! represented in camera coordinates to 2D points in the image plane and represented in normalized

//! camera coordinates  and :

//!

//!

//!

//! The homogeneous transformation is encoded by the extrinsic parameters  and  and

//! represents the change of basis from world coordinate system  to the camera coordinate sytem

//! . Thus, given the representation of the point  in world coordinates, , we

//! obtain 's representation in the camera coordinate system, , by

//!

//!

//!

//! This homogeneous transformation is composed out of , a 3-by-3 rotation matrix, and , a

//! 3-by-1 translation vector:

//!

//!

//!

//! and therefore

//!

//!

//!

//! Combining the projective transformation and the homogeneous transformation, we obtain the projective

//! transformation that maps 3D points in world coordinates into 2D points in the image plane and in

//! normalized camera coordinates:

//!

//!

//!

//! with  and . Putting the equations for instrincs and extrinsics together, we can write out

//!  as

//!

//!

//!

//! If , the transformation above is equivalent to the following,

//!

//!

//!

//! with

//!

//!

//!

//! The following figure illustrates the pinhole camera model.

//!

//!

//!

//! Real lenses usually have some distortion, mostly radial distortion, and slight tangential distortion.

//! So, the above model is extended as:

//!

//!

//!

//! where

//!

//!

//!

//! with

//!

//!

//!

//! and

//!

//!

//!

//! if .

//!

//! The distortion parameters are the radial coefficients , , , , , and

//! , and  are the tangential distortion coefficients, and , , , and ,

//! are the thin prism distortion coefficients. Higher-order coefficients are not considered in OpenCV.

//!

//! The next figures show two common types of radial distortion: barrel distortion

//! ( monotonically decreasing)

//! and pincushion distortion ( monotonically increasing).

//! Radial distortion is always monotonic for real lenses,

//! and if the estimator produces a non-monotonic result,

//! this should be considered a calibration failure.

//! More generally, radial distortion must be monotonic and the distortion function must be bijective.

//! A failed estimation result may look deceptively good near the image center

//! but will work poorly in e.g. AR/SFM applications.

//! The optimization method used in OpenCV camera calibration does not include these constraints as

//! the framework does not support the required integer programming and polynomial inequalities.

//! See [issue #15992](https://github.com/opencv/opencv/issues/15992) for additional information.

//!

//!

//!

//!

//! In some cases, the image sensor may be tilted in order to focus an oblique plane in front of the

//! camera (Scheimpflug principle). This can be useful for particle image velocimetry (PIV) or

//! triangulation with a laser fan. The tilt causes a perspective distortion of  and

//! . This distortion can be modeled in the following way, see e.g. [Louhichi07](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Louhichi07).

//!

//!

//!

//! where

//!

//!

//!

//! and the matrix  is defined by two rotations with angular parameter

//!  and , respectively,

//!

//!

//!

//! In the functions below the coefficients are passed or returned as

//!

//!

//!

//! vector. That is, if the vector contains four elements, it means that  . The distortion

//! coefficients do not depend on the scene viewed. Thus, they also belong to the intrinsic camera

//! parameters. And they remain the same regardless of the captured image resolution. If, for example, a

//! camera has been calibrated on images of 320 x 240 resolution, absolutely the same distortion

//! coefficients can be used for 640 x 480 images from the same camera while , ,

//! , and  need to be scaled appropriately.

//!

//! The functions below use the above model to do the following:

//!

//! * Project 3D points to the image plane given intrinsic and extrinsic parameters.

//! * Compute extrinsic parameters given intrinsic parameters, a few 3D points, and their

//! projections.

//! * Estimate intrinsic and extrinsic camera parameters from several views of a known calibration

//! pattern (every view is described by several 3D-2D point correspondences).

//! * Estimate the relative position and orientation of the stereo camera "heads" and compute the

//! *rectification* transformation that makes the camera optical axes parallel.

//!

//! <B> Homogeneous Coordinates </B><br>

//! Homogeneous Coordinates are a system of coordinates that are used in projective geometry. Their use

//! allows to represent points at infinity by finite coordinates and simplifies formulas when compared

//! to the cartesian counterparts, e.g. they have the advantage that affine transformations can be

//! expressed as linear homogeneous transformation.

//!

//! One obtains the homogeneous vector  by appending a 1 along an n-dimensional cartesian

//! vector  e.g. for a 3D cartesian vector the mapping  is:

//!

//!

//!

//! For the inverse mapping , one divides all elements of the homogeneous vector

//! by its last element, e.g. for a 3D homogeneous vector one gets its 2D cartesian counterpart by:

//!

//!

//!

//! if .

//!

//! Due to this mapping, all multiples , for , of a homogeneous point represent

//! the same point . An intuitive understanding of this property is that under a projective

//! transformation, all multiples of  are mapped to the same point. This is the physical

//! observation one does for pinhole cameras, as all points along a ray through the camera's pinhole are

//! projected to the same image point, e.g. all points along the red ray in the image of the pinhole

//! camera model above would be mapped to the same image coordinate. This property is also the source

//! for the scale ambiguity s in the equation of the pinhole camera model.

//!

//! As mentioned, by using homogeneous coordinates we can express any change of basis parameterized by

//!  and  as a linear transformation, e.g. for the change of basis from coordinate system

//! 0 to coordinate system 1 becomes:

//!

//!

//!

//!

//! Note:

//! * Many functions in this module take a camera intrinsic matrix as an input parameter. Although all

//! functions assume the same structure of this parameter, they may name it differently. The

//! parameter's description, however, will be clear in that a camera intrinsic matrix with the structure

//! shown above is required.

//! * A calibration sample for 3 cameras in a horizontal position can be found at

//! opencv_source_code/samples/cpp/3calibration.cpp

//! * A calibration sample based on a sequence of images can be found at

//! opencv_source_code/samples/cpp/calibration.cpp

//! * A calibration sample in order to do 3D reconstruction can be found at

//! opencv_source_code/samples/cpp/build3dmodel.cpp

//! * A calibration example on stereo calibration can be found at

//! opencv_source_code/samples/cpp/stereo_calib.cpp

//! * A calibration example on stereo matching can be found at

//! opencv_source_code/samples/cpp/stereo_match.cpp

//! * (Python) A camera calibration sample can be found at

//! opencv_source_code/samples/python/calibrate.py

//! # Fisheye camera model

//!

//! Definitions: Let P be a point in 3D of coordinates X in the world reference frame (stored in the

//! matrix X) The coordinate vector of P in the camera reference frame is:

//!

//!

//!

//! where R is the rotation matrix corresponding to the rotation vector om: R = rodrigues(om); call x, y

//! and z the 3 coordinates of Xc:

//!

//!

//!

//! The pinhole projection coordinates of P is [a; b] where

//!

//!

//!

//! Fisheye distortion:

//!

//!

//!

//! The distorted point coordinates are [x'; y'] where

//!

//!

//!

//! Finally, conversion into pixel coordinates: The final pixel coordinates vector [u; v] where:

//!

//!

//!

//! Summary:

//! Generic camera model [Kannala2006](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Kannala2006) with perspective projection and without distortion correction

//!

//! # C API

use crate::{mod_prelude::*, core, sys, types};

pub mod prelude {

pub use { super::LMSolver_CallbackConst, super::LMSolver_Callback, super::LMSolverConst, super::LMSolver, super::StereoMatcherConst, super::StereoMatcher, super::StereoBMConst, super::StereoBM, super::StereoSGBMConst, super::StereoSGBM };

}

pub const CALIB_CB_ACCURACY: i32 = 32;

pub const CALIB_CB_ADAPTIVE_THRESH: i32 = 1;

pub const CALIB_CB_ASYMMETRIC_GRID: i32 = 2;

pub const CALIB_CB_CLUSTERING: i32 = 4;

pub const CALIB_CB_EXHAUSTIVE: i32 = 16;

pub const CALIB_CB_FAST_CHECK: i32 = 8;

pub const CALIB_CB_FILTER_QUADS: i32 = 4;

pub const CALIB_CB_LARGER: i32 = 64;

pub const CALIB_CB_MARKER: i32 = 128;

pub const CALIB_CB_NORMALIZE_IMAGE: i32 = 2;

pub const CALIB_CB_SYMMETRIC_GRID: i32 = 1;

pub const CALIB_FIX_ASPECT_RATIO: i32 = 2;

pub const CALIB_FIX_FOCAL_LENGTH: i32 = 16;

pub const CALIB_FIX_INTRINSIC: i32 = 256;

pub const CALIB_FIX_K1: i32 = 32;

pub const CALIB_FIX_K2: i32 = 64;

pub const CALIB_FIX_K3: i32 = 128;

pub const CALIB_FIX_K4: i32 = 2048;

pub const CALIB_FIX_K5: i32 = 4096;

pub const CALIB_FIX_K6: i32 = 8192;

pub const CALIB_FIX_PRINCIPAL_POINT: i32 = 4;

pub const CALIB_FIX_S1_S2_S3_S4: i32 = 65536;

pub const CALIB_FIX_TANGENT_DIST: i32 = 2097152;

pub const CALIB_FIX_TAUX_TAUY: i32 = 524288;

/// On-line Hand-Eye Calibration [Andreff99](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Andreff99)

pub const CALIB_HAND_EYE_ANDREFF: i32 = 3;

/// Hand-Eye Calibration Using Dual Quaternions [Daniilidis98](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Daniilidis98)

pub const CALIB_HAND_EYE_DANIILIDIS: i32 = 4;

/// Hand-eye Calibration [Horaud95](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Horaud95)

pub const CALIB_HAND_EYE_HORAUD: i32 = 2;

/// Robot Sensor Calibration: Solving AX = XB on the Euclidean Group [Park94](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Park94)

pub const CALIB_HAND_EYE_PARK: i32 = 1;

/// A New Technique for Fully Autonomous and Efficient 3D Robotics Hand/Eye Calibration [Tsai89](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Tsai89)

pub const CALIB_HAND_EYE_TSAI: i32 = 0;

pub const CALIB_NINTRINSIC: i32 = 18;

pub const CALIB_RATIONAL_MODEL: i32 = 16384;

/// Simultaneous robot-world and hand-eye calibration using dual-quaternions and kronecker product [Li2010SimultaneousRA](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Li2010SimultaneousRA)

pub const CALIB_ROBOT_WORLD_HAND_EYE_LI: i32 = 1;

/// Solving the robot-world/hand-eye calibration problem using the kronecker product [Shah2013SolvingTR](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Shah2013SolvingTR)

pub const CALIB_ROBOT_WORLD_HAND_EYE_SHAH: i32 = 0;

pub const CALIB_SAME_FOCAL_LENGTH: i32 = 512;

pub const CALIB_THIN_PRISM_MODEL: i32 = 32768;

pub const CALIB_TILTED_MODEL: i32 = 262144;

/// for stereoCalibrate

pub const CALIB_USE_EXTRINSIC_GUESS: i32 = 4194304;

pub const CALIB_USE_INTRINSIC_GUESS: i32 = 1;

/// use LU instead of SVD decomposition for solving. much faster but potentially less precise

pub const CALIB_USE_LU: i32 = 131072;

/// use QR instead of SVD decomposition for solving. Faster but potentially less precise

pub const CALIB_USE_QR: i32 = 1048576;

pub const CALIB_ZERO_DISPARITY: i32 = 1024;

pub const CALIB_ZERO_TANGENT_DIST: i32 = 8;

/// 7-point algorithm

pub const FM_7POINT: i32 = 1;

/// 8-point algorithm

pub const FM_8POINT: i32 = 2;

/// least-median algorithm. 7-point algorithm is used.

pub const FM_LMEDS: i32 = 4;

/// RANSAC algorithm. It needs at least 15 points. 7-point algorithm is used.

pub const FM_RANSAC: i32 = 8;

pub const Fisheye_CALIB_CHECK_COND: i32 = 4;

pub const Fisheye_CALIB_FIX_FOCAL_LENGTH: i32 = 2048;

pub const Fisheye_CALIB_FIX_INTRINSIC: i32 = 256;

pub const Fisheye_CALIB_FIX_K1: i32 = 16;

pub const Fisheye_CALIB_FIX_K2: i32 = 32;

pub const Fisheye_CALIB_FIX_K3: i32 = 64;

pub const Fisheye_CALIB_FIX_K4: i32 = 128;

pub const Fisheye_CALIB_FIX_PRINCIPAL_POINT: i32 = 512;

pub const Fisheye_CALIB_FIX_SKEW: i32 = 8;

pub const Fisheye_CALIB_RECOMPUTE_EXTRINSIC: i32 = 2;

pub const Fisheye_CALIB_USE_INTRINSIC_GUESS: i32 = 1;

pub const Fisheye_CALIB_ZERO_DISPARITY: i32 = 1024;

/// least-median of squares algorithm

pub const LMEDS: i32 = 4;

pub const LOCAL_OPTIM_GC: i32 = 3;

pub const LOCAL_OPTIM_INNER_AND_ITER_LO: i32 = 2;

pub const LOCAL_OPTIM_INNER_LO: i32 = 1;

pub const LOCAL_OPTIM_NULL: i32 = 0;

pub const LOCAL_OPTIM_SIGMA: i32 = 4;

pub const NEIGH_FLANN_KNN: i32 = 0;

pub const NEIGH_FLANN_RADIUS: i32 = 2;

pub const NEIGH_GRID: i32 = 1;

pub const PROJ_SPHERICAL_EQRECT: i32 = 1;

pub const PROJ_SPHERICAL_ORTHO: i32 = 0;

/// RANSAC algorithm

pub const RANSAC: i32 = 8;

/// RHO algorithm

pub const RHO: i32 = 16;

pub const SAMPLING_NAPSAC: i32 = 2;

pub const SAMPLING_PROGRESSIVE_NAPSAC: i32 = 1;

pub const SAMPLING_PROSAC: i32 = 3;

pub const SAMPLING_UNIFORM: i32 = 0;

pub const SCORE_METHOD_LMEDS: i32 = 3;

pub const SCORE_METHOD_MAGSAC: i32 = 2;

pub const SCORE_METHOD_MSAC: i32 = 1;

pub const SCORE_METHOD_RANSAC: i32 = 0;

/// An Efficient Algebraic Solution to the Perspective-Three-Point Problem [Ke17](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Ke17)

pub const SOLVEPNP_AP3P: i32 = 5;

/// **Broken implementation. Using this flag will fallback to EPnP.**

///

/// A Direct Least-Squares (DLS) Method for PnP [hesch2011direct](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_hesch2011direct)

pub const SOLVEPNP_DLS: i32 = 3;

/// EPnP: Efficient Perspective-n-Point Camera Pose Estimation [lepetit2009epnp](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_lepetit2009epnp)

pub const SOLVEPNP_EPNP: i32 = 1;

/// Infinitesimal Plane-Based Pose Estimation [Collins14](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Collins14)

///

/// Object points must be coplanar.

pub const SOLVEPNP_IPPE: i32 = 6;

/// Infinitesimal Plane-Based Pose Estimation [Collins14](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Collins14)

///

/// This is a special case suitable for marker pose estimation.

///

/// 4 coplanar object points must be defined in the following order:

/// - point 0: [-squareLength / 2, squareLength / 2, 0]

/// - point 1: [ squareLength / 2, squareLength / 2, 0]

/// - point 2: [ squareLength / 2, -squareLength / 2, 0]

/// - point 3: [-squareLength / 2, -squareLength / 2, 0]

pub const SOLVEPNP_IPPE_SQUARE: i32 = 7;

/// Pose refinement using non-linear Levenberg-Marquardt minimization scheme [Madsen04](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Madsen04) [Eade13](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Eade13)

///

/// Initial solution for non-planar "objectPoints" needs at least 6 points and uses the DLT algorithm.

///

/// Initial solution for planar "objectPoints" needs at least 4 points and uses pose from homography decomposition.

pub const SOLVEPNP_ITERATIVE: i32 = 0;

/// Used for count

pub const SOLVEPNP_MAX_COUNT: i32 = 9;

/// Complete Solution Classification for the Perspective-Three-Point Problem [gao2003complete](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_gao2003complete)

pub const SOLVEPNP_P3P: i32 = 2;

/// SQPnP: A Consistently Fast and Globally OptimalSolution to the Perspective-n-Point Problem [Terzakis2020SQPnP](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Terzakis2020SQPnP)

pub const SOLVEPNP_SQPNP: i32 = 8;

/// **Broken implementation. Using this flag will fallback to EPnP.**

///

/// Exhaustive Linearization for Robust Camera Pose and Focal Length Estimation [penate2013exhaustive](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_penate2013exhaustive)

pub const SOLVEPNP_UPNP: i32 = 4;

pub const StereoBM_PREFILTER_NORMALIZED_RESPONSE: i32 = 0;

pub const StereoBM_PREFILTER_XSOBEL: i32 = 1;

pub const StereoMatcher_DISP_SCALE: i32 = 16;

pub const StereoMatcher_DISP_SHIFT: i32 = 4;

pub const StereoSGBM_MODE_HH: i32 = 1;

pub const StereoSGBM_MODE_HH4: i32 = 3;

pub const StereoSGBM_MODE_SGBM: i32 = 0;

pub const StereoSGBM_MODE_SGBM_3WAY: i32 = 2;

/// USAC, accurate settings

pub const USAC_ACCURATE: i32 = 36;

/// USAC algorithm, default settings

pub const USAC_DEFAULT: i32 = 32;

/// USAC, fast settings

pub const USAC_FAST: i32 = 35;

/// USAC, fundamental matrix 8 points

pub const USAC_FM_8PTS: i32 = 34;

/// USAC, runs MAGSAC++

pub const USAC_MAGSAC: i32 = 38;

/// USAC, parallel version

pub const USAC_PARALLEL: i32 = 33;

/// USAC, sorted points, runs PROSAC

pub const USAC_PROSAC: i32 = 37;

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum CirclesGridFinderParameters_GridType {

SYMMETRIC_GRID = 0,

ASYMMETRIC_GRID = 1,

}

opencv_type_enum! { crate::calib3d::CirclesGridFinderParameters_GridType }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum HandEyeCalibrationMethod {

/// A New Technique for Fully Autonomous and Efficient 3D Robotics Hand/Eye Calibration [Tsai89](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Tsai89)

CALIB_HAND_EYE_TSAI = 0,

/// Robot Sensor Calibration: Solving AX = XB on the Euclidean Group [Park94](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Park94)

CALIB_HAND_EYE_PARK = 1,

/// Hand-eye Calibration [Horaud95](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Horaud95)

CALIB_HAND_EYE_HORAUD = 2,

/// On-line Hand-Eye Calibration [Andreff99](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Andreff99)

CALIB_HAND_EYE_ANDREFF = 3,

/// Hand-Eye Calibration Using Dual Quaternions [Daniilidis98](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Daniilidis98)

CALIB_HAND_EYE_DANIILIDIS = 4,

}

opencv_type_enum! { crate::calib3d::HandEyeCalibrationMethod }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum LocalOptimMethod {

LOCAL_OPTIM_NULL = 0,

LOCAL_OPTIM_INNER_LO = 1,

LOCAL_OPTIM_INNER_AND_ITER_LO = 2,

LOCAL_OPTIM_GC = 3,

LOCAL_OPTIM_SIGMA = 4,

}

opencv_type_enum! { crate::calib3d::LocalOptimMethod }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum NeighborSearchMethod {

NEIGH_FLANN_KNN = 0,

NEIGH_GRID = 1,

NEIGH_FLANN_RADIUS = 2,

}

opencv_type_enum! { crate::calib3d::NeighborSearchMethod }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum RobotWorldHandEyeCalibrationMethod {

/// Solving the robot-world/hand-eye calibration problem using the kronecker product [Shah2013SolvingTR](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Shah2013SolvingTR)

CALIB_ROBOT_WORLD_HAND_EYE_SHAH = 0,

/// Simultaneous robot-world and hand-eye calibration using dual-quaternions and kronecker product [Li2010SimultaneousRA](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Li2010SimultaneousRA)

CALIB_ROBOT_WORLD_HAND_EYE_LI = 1,

}

opencv_type_enum! { crate::calib3d::RobotWorldHandEyeCalibrationMethod }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum SamplingMethod {

SAMPLING_UNIFORM = 0,

SAMPLING_PROGRESSIVE_NAPSAC = 1,

SAMPLING_NAPSAC = 2,

SAMPLING_PROSAC = 3,

}

opencv_type_enum! { crate::calib3d::SamplingMethod }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum ScoreMethod {

SCORE_METHOD_RANSAC = 0,

SCORE_METHOD_MSAC = 1,

SCORE_METHOD_MAGSAC = 2,

SCORE_METHOD_LMEDS = 3,

}

opencv_type_enum! { crate::calib3d::ScoreMethod }

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum SolvePnPMethod {

/// Pose refinement using non-linear Levenberg-Marquardt minimization scheme [Madsen04](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Madsen04) [Eade13](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Eade13)

///

/// Initial solution for non-planar "objectPoints" needs at least 6 points and uses the DLT algorithm.

///

/// Initial solution for planar "objectPoints" needs at least 4 points and uses pose from homography decomposition.

SOLVEPNP_ITERATIVE = 0,

/// EPnP: Efficient Perspective-n-Point Camera Pose Estimation [lepetit2009epnp](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_lepetit2009epnp)

SOLVEPNP_EPNP = 1,

/// Complete Solution Classification for the Perspective-Three-Point Problem [gao2003complete](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_gao2003complete)

SOLVEPNP_P3P = 2,

/// **Broken implementation. Using this flag will fallback to EPnP.**

///

/// A Direct Least-Squares (DLS) Method for PnP [hesch2011direct](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_hesch2011direct)

SOLVEPNP_DLS = 3,

/// **Broken implementation. Using this flag will fallback to EPnP.**

///

/// Exhaustive Linearization for Robust Camera Pose and Focal Length Estimation [penate2013exhaustive](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_penate2013exhaustive)

SOLVEPNP_UPNP = 4,

/// An Efficient Algebraic Solution to the Perspective-Three-Point Problem [Ke17](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Ke17)

SOLVEPNP_AP3P = 5,

/// Infinitesimal Plane-Based Pose Estimation [Collins14](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Collins14)

///

/// Object points must be coplanar.

SOLVEPNP_IPPE = 6,

/// Infinitesimal Plane-Based Pose Estimation [Collins14](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Collins14)

///

/// This is a special case suitable for marker pose estimation.

///

/// 4 coplanar object points must be defined in the following order:

/// - point 0: [-squareLength / 2, squareLength / 2, 0]

/// - point 1: [ squareLength / 2, squareLength / 2, 0]

/// - point 2: [ squareLength / 2, -squareLength / 2, 0]

/// - point 3: [-squareLength / 2, -squareLength / 2, 0]

SOLVEPNP_IPPE_SQUARE = 7,

/// SQPnP: A Consistently Fast and Globally OptimalSolution to the Perspective-n-Point Problem [Terzakis2020SQPnP](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Terzakis2020SQPnP)

SOLVEPNP_SQPNP = 8,

/// Used for count

SOLVEPNP_MAX_COUNT = 9,

}

opencv_type_enum! { crate::calib3d::SolvePnPMethod }

/// cv::undistort mode

#[repr(C)]

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub enum UndistortTypes {

PROJ_SPHERICAL_ORTHO = 0,

PROJ_SPHERICAL_EQRECT = 1,

}

opencv_type_enum! { crate::calib3d::UndistortTypes }

pub type CirclesGridFinderParameters2 = crate::calib3d::CirclesGridFinderParameters;

/// Computes an RQ decomposition of 3x3 matrices.

///

/// ## Parameters

/// * src: 3x3 input matrix.

/// * mtxR: Output 3x3 upper-triangular matrix.

/// * mtxQ: Output 3x3 orthogonal matrix.

/// * Qx: Optional output 3x3 rotation matrix around x-axis.

/// * Qy: Optional output 3x3 rotation matrix around y-axis.

/// * Qz: Optional output 3x3 rotation matrix around z-axis.

///

/// The function computes a RQ decomposition using the given rotations. This function is used in

/// #decomposeProjectionMatrix to decompose the left 3x3 submatrix of a projection matrix into a camera

/// and a rotation matrix.

///

/// It optionally returns three rotation matrices, one for each axis, and the three Euler angles in

/// degrees (as the return value) that could be used in OpenGL. Note, there is always more than one

/// sequence of rotations about the three principal axes that results in the same orientation of an

/// object, e.g. see [Slabaugh](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Slabaugh) . Returned tree rotation matrices and corresponding three Euler angles

/// are only one of the possible solutions.

///

/// ## C++ default parameters

/// * qx: noArray()

/// * qy: noArray()

/// * qz: noArray()

#[inline]

pub fn rq_decomp3x3(src: &dyn core::ToInputArray, mtx_r: &mut dyn core::ToOutputArray, mtx_q: &mut dyn core::ToOutputArray, qx: &mut dyn core::ToOutputArray, qy: &mut dyn core::ToOutputArray, qz: &mut dyn core::ToOutputArray) -> Result<core::Vec3d> {

extern_container_arg!(src);

extern_container_arg!(mtx_r);

extern_container_arg!(mtx_q);

extern_container_arg!(qx);

extern_container_arg!(qy);

extern_container_arg!(qz);

return_send!(via ocvrs_return);

unsafe { sys::cv_RQDecomp3x3_const__InputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR(src.as_raw__InputArray(), mtx_r.as_raw__OutputArray(), mtx_q.as_raw__OutputArray(), qx.as_raw__OutputArray(), qy.as_raw__OutputArray(), qz.as_raw__OutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Converts a rotation matrix to a rotation vector or vice versa.

///

/// ## Parameters

/// * src: Input rotation vector (3x1 or 1x3) or rotation matrix (3x3).

/// * dst: Output rotation matrix (3x3) or rotation vector (3x1 or 1x3), respectively.

/// * jacobian: Optional output Jacobian matrix, 3x9 or 9x3, which is a matrix of partial

/// derivatives of the output array components with respect to the input array components.

///

///

///

/// Inverse transformation can be also done easily, since

///

///

///

/// A rotation vector is a convenient and most compact representation of a rotation matrix (since any

/// rotation matrix has just 3 degrees of freedom). The representation is used in the global 3D geometry

/// optimization procedures like [calibrateCamera], [stereoCalibrate], or [solvePnP] .

///

///

/// Note: More information about the computation of the derivative of a 3D rotation matrix with respect to its exponential coordinate

/// can be found in:

/// - A Compact Formula for the Derivative of a 3-D Rotation in Exponential Coordinates, Guillermo Gallego, Anthony J. Yezzi [Gallego2014ACF](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Gallego2014ACF)

///

///

/// Note: Useful information on SE(3) and Lie Groups can be found in:

/// - A tutorial on SE(3) transformation parameterizations and on-manifold optimization, Jose-Luis Blanco [blanco2010tutorial](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_blanco2010tutorial)

/// - Lie Groups for 2D and 3D Transformation, Ethan Eade [Eade17](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Eade17)

/// - A micro Lie theory for state estimation in robotics, Joan Solà, Jérémie Deray, Dinesh Atchuthan [Sol2018AML](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Sol2018AML)

///

/// ## C++ default parameters

/// * jacobian: noArray()

#[inline]

pub fn rodrigues(src: &dyn core::ToInputArray, dst: &mut dyn core::ToOutputArray, jacobian: &mut dyn core::ToOutputArray) -> Result<()> {

extern_container_arg!(src);

extern_container_arg!(dst);

extern_container_arg!(jacobian);

return_send!(via ocvrs_return);

unsafe { sys::cv_Rodrigues_const__InputArrayR_const__OutputArrayR_const__OutputArrayR(src.as_raw__InputArray(), dst.as_raw__OutputArray(), jacobian.as_raw__OutputArray(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Finds the camera intrinsic and extrinsic parameters from several views of a calibration pattern.

///

/// This function is an extension of #calibrateCamera with the method of releasing object which was

/// proposed in [strobl2011iccv](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_strobl2011iccv). In many common cases with inaccurate, unmeasured, roughly planar

/// targets (calibration plates), this method can dramatically improve the precision of the estimated

/// camera parameters. Both the object-releasing method and standard method are supported by this

/// function. Use the parameter **iFixedPoint** for method selection. In the internal implementation,

/// #calibrateCamera is a wrapper for this function.

///

/// ## Parameters

/// * objectPoints: Vector of vectors of calibration pattern points in the calibration pattern

/// coordinate space. See #calibrateCamera for details. If the method of releasing object to be used,

/// the identical calibration board must be used in each view and it must be fully visible, and all

/// objectPoints[i] must be the same and all points should be roughly close to a plane. **The calibration

/// target has to be rigid, or at least static if the camera (rather than the calibration target) is

/// shifted for grabbing images.**

/// * imagePoints: Vector of vectors of the projections of calibration pattern points. See

/// #calibrateCamera for details.

/// * imageSize: Size of the image used only to initialize the intrinsic camera matrix.

/// * iFixedPoint: The index of the 3D object point in objectPoints[0] to be fixed. It also acts as

/// a switch for calibration method selection. If object-releasing method to be used, pass in the

/// parameter in the range of [1, objectPoints[0].size()-2], otherwise a value out of this range will

/// make standard calibration method selected. Usually the top-right corner point of the calibration

/// board grid is recommended to be fixed when object-releasing method being utilized. According to

/// \cite strobl2011iccv, two other points are also fixed. In this implementation, objectPoints[0].front

/// and objectPoints[0].back.z are used. With object-releasing method, accurate rvecs, tvecs and

/// newObjPoints are only possible if coordinates of these three fixed points are accurate enough.

/// * cameraMatrix: Output 3x3 floating-point camera matrix. See #calibrateCamera for details.

/// * distCoeffs: Output vector of distortion coefficients. See #calibrateCamera for details.

/// * rvecs: Output vector of rotation vectors estimated for each pattern view. See #calibrateCamera

/// for details.

/// * tvecs: Output vector of translation vectors estimated for each pattern view.

/// * newObjPoints: The updated output vector of calibration pattern points. The coordinates might

/// be scaled based on three fixed points. The returned coordinates are accurate only if the above

/// mentioned three fixed points are accurate. If not needed, noArray() can be passed in. This parameter

/// is ignored with standard calibration method.

/// * stdDeviationsIntrinsics: Output vector of standard deviations estimated for intrinsic parameters.

/// See #calibrateCamera for details.

/// * stdDeviationsExtrinsics: Output vector of standard deviations estimated for extrinsic parameters.

/// See #calibrateCamera for details.

/// * stdDeviationsObjPoints: Output vector of standard deviations estimated for refined coordinates

/// of calibration pattern points. It has the same size and order as objectPoints[0] vector. This

/// parameter is ignored with standard calibration method.

/// * perViewErrors: Output vector of the RMS re-projection error estimated for each pattern view.

/// * flags: Different flags that may be zero or a combination of some predefined values. See

/// #calibrateCamera for details. If the method of releasing object is used, the calibration time may

/// be much longer. CALIB_USE_QR or CALIB_USE_LU could be used for faster calibration with potentially

/// less precise and less stable in some rare cases.

/// * criteria: Termination criteria for the iterative optimization algorithm.

///

/// ## Returns

/// the overall RMS re-projection error.

///

/// The function estimates the intrinsic camera parameters and extrinsic parameters for each of the

/// views. The algorithm is based on [Zhang2000](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Zhang2000), [BouguetMCT](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_BouguetMCT) and [strobl2011iccv](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_strobl2011iccv). See

/// #calibrateCamera for other detailed explanations.

/// ## See also

/// calibrateCamera, findChessboardCorners, solvePnP, initCameraMatrix2D, stereoCalibrate, undistort

///

/// ## C++ default parameters

/// * flags: 0

/// * criteria: TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,30,DBL_EPSILON)

#[inline]

pub fn calibrate_camera_ro_extended(object_points: &dyn core::ToInputArray, image_points: &dyn core::ToInputArray, image_size: core::Size, i_fixed_point: i32, camera_matrix: &mut dyn core::ToInputOutputArray, dist_coeffs: &mut dyn core::ToInputOutputArray, rvecs: &mut dyn core::ToOutputArray, tvecs: &mut dyn core::ToOutputArray, new_obj_points: &mut dyn core::ToOutputArray, std_deviations_intrinsics: &mut dyn core::ToOutputArray, std_deviations_extrinsics: &mut dyn core::ToOutputArray, std_deviations_obj_points: &mut dyn core::ToOutputArray, per_view_errors: &mut dyn core::ToOutputArray, flags: i32, criteria: core::TermCriteria) -> Result<f64> {

extern_container_arg!(object_points);

extern_container_arg!(image_points);

extern_container_arg!(camera_matrix);

extern_container_arg!(dist_coeffs);

extern_container_arg!(rvecs);

extern_container_arg!(tvecs);

extern_container_arg!(new_obj_points);

extern_container_arg!(std_deviations_intrinsics);

extern_container_arg!(std_deviations_extrinsics);

extern_container_arg!(std_deviations_obj_points);

extern_container_arg!(per_view_errors);

return_send!(via ocvrs_return);

unsafe { sys::cv_calibrateCameraRO_const__InputArrayR_const__InputArrayR_Size_int_const__InputOutputArrayR_const__InputOutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_int_TermCriteria(object_points.as_raw__InputArray(), image_points.as_raw__InputArray(), image_size.opencv_as_extern(), i_fixed_point, camera_matrix.as_raw__InputOutputArray(), dist_coeffs.as_raw__InputOutputArray(), rvecs.as_raw__OutputArray(), tvecs.as_raw__OutputArray(), new_obj_points.as_raw__OutputArray(), std_deviations_intrinsics.as_raw__OutputArray(), std_deviations_extrinsics.as_raw__OutputArray(), std_deviations_obj_points.as_raw__OutputArray(), per_view_errors.as_raw__OutputArray(), flags, criteria.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Finds the camera intrinsic and extrinsic parameters from several views of a calibration pattern.

///

/// This function is an extension of #calibrateCamera with the method of releasing object which was

/// proposed in [strobl2011iccv](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_strobl2011iccv). In many common cases with inaccurate, unmeasured, roughly planar

/// targets (calibration plates), this method can dramatically improve the precision of the estimated

/// camera parameters. Both the object-releasing method and standard method are supported by this

/// function. Use the parameter **iFixedPoint** for method selection. In the internal implementation,

/// #calibrateCamera is a wrapper for this function.

///

/// ## Parameters

/// * objectPoints: Vector of vectors of calibration pattern points in the calibration pattern

/// coordinate space. See #calibrateCamera for details. If the method of releasing object to be used,

/// the identical calibration board must be used in each view and it must be fully visible, and all

/// objectPoints[i] must be the same and all points should be roughly close to a plane. **The calibration

/// target has to be rigid, or at least static if the camera (rather than the calibration target) is

/// shifted for grabbing images.**

/// * imagePoints: Vector of vectors of the projections of calibration pattern points. See

/// #calibrateCamera for details.

/// * imageSize: Size of the image used only to initialize the intrinsic camera matrix.

/// * iFixedPoint: The index of the 3D object point in objectPoints[0] to be fixed. It also acts as

/// a switch for calibration method selection. If object-releasing method to be used, pass in the

/// parameter in the range of [1, objectPoints[0].size()-2], otherwise a value out of this range will

/// make standard calibration method selected. Usually the top-right corner point of the calibration

/// board grid is recommended to be fixed when object-releasing method being utilized. According to

/// \cite strobl2011iccv, two other points are also fixed. In this implementation, objectPoints[0].front

/// and objectPoints[0].back.z are used. With object-releasing method, accurate rvecs, tvecs and

/// newObjPoints are only possible if coordinates of these three fixed points are accurate enough.

/// * cameraMatrix: Output 3x3 floating-point camera matrix. See #calibrateCamera for details.

/// * distCoeffs: Output vector of distortion coefficients. See #calibrateCamera for details.

/// * rvecs: Output vector of rotation vectors estimated for each pattern view. See #calibrateCamera

/// for details.

/// * tvecs: Output vector of translation vectors estimated for each pattern view.

/// * newObjPoints: The updated output vector of calibration pattern points. The coordinates might

/// be scaled based on three fixed points. The returned coordinates are accurate only if the above

/// mentioned three fixed points are accurate. If not needed, noArray() can be passed in. This parameter

/// is ignored with standard calibration method.

/// * stdDeviationsIntrinsics: Output vector of standard deviations estimated for intrinsic parameters.

/// See #calibrateCamera for details.

/// * stdDeviationsExtrinsics: Output vector of standard deviations estimated for extrinsic parameters.

/// See #calibrateCamera for details.

/// * stdDeviationsObjPoints: Output vector of standard deviations estimated for refined coordinates

/// of calibration pattern points. It has the same size and order as objectPoints[0] vector. This

/// parameter is ignored with standard calibration method.

/// * perViewErrors: Output vector of the RMS re-projection error estimated for each pattern view.

/// * flags: Different flags that may be zero or a combination of some predefined values. See

/// #calibrateCamera for details. If the method of releasing object is used, the calibration time may

/// be much longer. CALIB_USE_QR or CALIB_USE_LU could be used for faster calibration with potentially

/// less precise and less stable in some rare cases.

/// * criteria: Termination criteria for the iterative optimization algorithm.

///

/// ## Returns

/// the overall RMS re-projection error.

///

/// The function estimates the intrinsic camera parameters and extrinsic parameters for each of the

/// views. The algorithm is based on [Zhang2000](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Zhang2000), [BouguetMCT](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_BouguetMCT) and [strobl2011iccv](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_strobl2011iccv). See

/// #calibrateCamera for other detailed explanations.

/// ## See also

/// calibrateCamera, findChessboardCorners, solvePnP, initCameraMatrix2D, stereoCalibrate, undistort

///

/// ## Overloaded parameters

///

/// ## C++ default parameters

/// * flags: 0

/// * criteria: TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,30,DBL_EPSILON)

#[inline]

pub fn calibrate_camera_ro(object_points: &dyn core::ToInputArray, image_points: &dyn core::ToInputArray, image_size: core::Size, i_fixed_point: i32, camera_matrix: &mut dyn core::ToInputOutputArray, dist_coeffs: &mut dyn core::ToInputOutputArray, rvecs: &mut dyn core::ToOutputArray, tvecs: &mut dyn core::ToOutputArray, new_obj_points: &mut dyn core::ToOutputArray, flags: i32, criteria: core::TermCriteria) -> Result<f64> {

extern_container_arg!(object_points);

extern_container_arg!(image_points);

extern_container_arg!(camera_matrix);

extern_container_arg!(dist_coeffs);

extern_container_arg!(rvecs);

extern_container_arg!(tvecs);

extern_container_arg!(new_obj_points);

return_send!(via ocvrs_return);

unsafe { sys::cv_calibrateCameraRO_const__InputArrayR_const__InputArrayR_Size_int_const__InputOutputArrayR_const__InputOutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_int_TermCriteria(object_points.as_raw__InputArray(), image_points.as_raw__InputArray(), image_size.opencv_as_extern(), i_fixed_point, camera_matrix.as_raw__InputOutputArray(), dist_coeffs.as_raw__InputOutputArray(), rvecs.as_raw__OutputArray(), tvecs.as_raw__OutputArray(), new_obj_points.as_raw__OutputArray(), flags, criteria.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Finds the camera intrinsic and extrinsic parameters from several views of a calibration

/// pattern.

///

/// ## Parameters

/// * objectPoints: In the new interface it is a vector of vectors of calibration pattern points in

/// the calibration pattern coordinate space (e.g. std::vector<std::vector<cv::Vec3f>>). The outer

/// vector contains as many elements as the number of pattern views. If the same calibration pattern

/// is shown in each view and it is fully visible, all the vectors will be the same. Although, it is

/// possible to use partially occluded patterns or even different patterns in different views. Then,

/// the vectors will be different. Although the points are 3D, they all lie in the calibration pattern's

/// XY coordinate plane (thus 0 in the Z-coordinate), if the used calibration pattern is a planar rig.

/// In the old interface all the vectors of object points from different views are concatenated

/// together.

/// * imagePoints: In the new interface it is a vector of vectors of the projections of calibration

/// pattern points (e.g. std::vector<std::vector<cv::Vec2f>>). imagePoints.size() and

/// objectPoints.size(), and imagePoints[i].size() and objectPoints[i].size() for each i, must be equal,

/// respectively. In the old interface all the vectors of object points from different views are

/// concatenated together.

/// * imageSize: Size of the image used only to initialize the camera intrinsic matrix.

/// * cameraMatrix: Input/output 3x3 floating-point camera intrinsic matrix

///  . If [CALIB_USE_INTRINSIC_GUESS]

/// and/or [CALIB_FIX_ASPECT_RATIO], [CALIB_FIX_PRINCIPAL_POINT] or [CALIB_FIX_FOCAL_LENGTH]

/// are specified, some or all of fx, fy, cx, cy must be initialized before calling the function.

/// * distCoeffs: Input/output vector of distortion coefficients

/// .

/// * rvecs: Output vector of rotation vectors ([Rodrigues] ) estimated for each pattern view

/// (e.g. std::vector<cv::Mat>>). That is, each i-th rotation vector together with the corresponding

/// i-th translation vector (see the next output parameter description) brings the calibration pattern

/// from the object coordinate space (in which object points are specified) to the camera coordinate

/// space. In more technical terms, the tuple of the i-th rotation and translation vector performs

/// a change of basis from object coordinate space to camera coordinate space. Due to its duality, this

/// tuple is equivalent to the position of the calibration pattern with respect to the camera coordinate

/// space.

/// * tvecs: Output vector of translation vectors estimated for each pattern view, see parameter

/// describtion above.

/// * stdDeviationsIntrinsics: Output vector of standard deviations estimated for intrinsic

/// parameters. Order of deviations values:

///  If one of parameters is not estimated, it's deviation is equals to zero.

/// * stdDeviationsExtrinsics: Output vector of standard deviations estimated for extrinsic

/// parameters. Order of deviations values:  where M is

/// the number of pattern views.  are concatenated 1x3 vectors.

/// * perViewErrors: Output vector of the RMS re-projection error estimated for each pattern view.

/// * flags: Different flags that may be zero or a combination of the following values:

/// * [CALIB_USE_INTRINSIC_GUESS] cameraMatrix contains valid initial values of

/// fx, fy, cx, cy that are optimized further. Otherwise, (cx, cy) is initially set to the image

/// center ( imageSize is used), and focal distances are computed in a least-squares fashion.

/// Note, that if intrinsic parameters are known, there is no need to use this function just to

/// estimate extrinsic parameters. Use [solvePnP] instead.

/// * [CALIB_FIX_PRINCIPAL_POINT] The principal point is not changed during the global

/// optimization. It stays at the center or at a different location specified when

/// [CALIB_USE_INTRINSIC_GUESS] is set too.

/// * [CALIB_FIX_ASPECT_RATIO] The functions consider only fy as a free parameter. The

/// ratio fx/fy stays the same as in the input cameraMatrix . When

/// [CALIB_USE_INTRINSIC_GUESS] is not set, the actual input values of fx and fy are

/// ignored, only their ratio is computed and used further.

/// * [CALIB_ZERO_TANGENT_DIST] Tangential distortion coefficients  are set

/// to zeros and stay zero.

/// * [CALIB_FIX_FOCAL_LENGTH] The focal length is not changed during the global optimization if

/// [CALIB_USE_INTRINSIC_GUESS] is set.

/// * [CALIB_FIX_K1],..., [CALIB_FIX_K6] The corresponding radial distortion

/// coefficient is not changed during the optimization. If [CALIB_USE_INTRINSIC_GUESS] is

/// set, the coefficient from the supplied distCoeffs matrix is used. Otherwise, it is set to 0.

/// * [CALIB_RATIONAL_MODEL] Coefficients k4, k5, and k6 are enabled. To provide the

/// backward compatibility, this extra flag should be explicitly specified to make the

/// calibration function use the rational model and return 8 coefficients or more.

/// * [CALIB_THIN_PRISM_MODEL] Coefficients s1, s2, s3 and s4 are enabled. To provide the

/// backward compatibility, this extra flag should be explicitly specified to make the

/// calibration function use the thin prism model and return 12 coefficients or more.

/// * [CALIB_FIX_S1_S2_S3_S4] The thin prism distortion coefficients are not changed during

/// the optimization. If [CALIB_USE_INTRINSIC_GUESS] is set, the coefficient from the

/// supplied distCoeffs matrix is used. Otherwise, it is set to 0.

/// * [CALIB_TILTED_MODEL] Coefficients tauX and tauY are enabled. To provide the

/// backward compatibility, this extra flag should be explicitly specified to make the

/// calibration function use the tilted sensor model and return 14 coefficients.

/// * [CALIB_FIX_TAUX_TAUY] The coefficients of the tilted sensor model are not changed during

/// the optimization. If [CALIB_USE_INTRINSIC_GUESS] is set, the coefficient from the

/// supplied distCoeffs matrix is used. Otherwise, it is set to 0.

/// * criteria: Termination criteria for the iterative optimization algorithm.

///

/// ## Returns

/// the overall RMS re-projection error.

///

/// The function estimates the intrinsic camera parameters and extrinsic parameters for each of the

/// views. The algorithm is based on [Zhang2000](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Zhang2000) and [BouguetMCT](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_BouguetMCT) . The coordinates of 3D object

/// points and their corresponding 2D projections in each view must be specified. That may be achieved

/// by using an object with known geometry and easily detectable feature points. Such an object is

/// called a calibration rig or calibration pattern, and OpenCV has built-in support for a chessboard as

/// a calibration rig (see [findChessboardCorners]). Currently, initialization of intrinsic

/// parameters (when [CALIB_USE_INTRINSIC_GUESS] is not set) is only implemented for planar calibration

/// patterns (where Z-coordinates of the object points must be all zeros). 3D calibration rigs can also

/// be used as long as initial cameraMatrix is provided.

///

/// The algorithm performs the following steps:

///

/// * Compute the initial intrinsic parameters (the option only available for planar calibration

/// patterns) or read them from the input parameters. The distortion coefficients are all set to

/// zeros initially unless some of CALIB_FIX_K? are specified.

///

/// * Estimate the initial camera pose as if the intrinsic parameters have been already known. This is

/// done using [solvePnP] .

///

/// * Run the global Levenberg-Marquardt optimization algorithm to minimize the reprojection error,

/// that is, the total sum of squared distances between the observed feature points imagePoints and

/// the projected (using the current estimates for camera parameters and the poses) object points

/// objectPoints. See [projectPoints] for details.

///

///

/// Note:

/// If you use a non-square (i.e. non-N-by-N) grid and [findChessboardCorners] for calibration,

/// and [calibrateCamera] returns bad values (zero distortion coefficients,  and

///  very far from the image center, and/or large differences between  and

///  (ratios of 10:1 or more)), then you are probably using patternSize=cvSize(rows,cols)

/// instead of using patternSize=cvSize(cols,rows) in [findChessboardCorners].

/// ## See also

/// calibrateCameraRO, findChessboardCorners, solvePnP, initCameraMatrix2D, stereoCalibrate,

/// undistort

///

/// ## C++ default parameters

/// * flags: 0

/// * criteria: TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,30,DBL_EPSILON)

#[inline]

pub fn calibrate_camera_extended(object_points: &dyn core::ToInputArray, image_points: &dyn core::ToInputArray, image_size: core::Size, camera_matrix: &mut dyn core::ToInputOutputArray, dist_coeffs: &mut dyn core::ToInputOutputArray, rvecs: &mut dyn core::ToOutputArray, tvecs: &mut dyn core::ToOutputArray, std_deviations_intrinsics: &mut dyn core::ToOutputArray, std_deviations_extrinsics: &mut dyn core::ToOutputArray, per_view_errors: &mut dyn core::ToOutputArray, flags: i32, criteria: core::TermCriteria) -> Result<f64> {

extern_container_arg!(object_points);

extern_container_arg!(image_points);

extern_container_arg!(camera_matrix);

extern_container_arg!(dist_coeffs);

extern_container_arg!(rvecs);

extern_container_arg!(tvecs);

extern_container_arg!(std_deviations_intrinsics);

extern_container_arg!(std_deviations_extrinsics);

extern_container_arg!(per_view_errors);

return_send!(via ocvrs_return);

unsafe { sys::cv_calibrateCamera_const__InputArrayR_const__InputArrayR_Size_const__InputOutputArrayR_const__InputOutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_const__OutputArrayR_int_TermCriteria(object_points.as_raw__InputArray(), image_points.as_raw__InputArray(), image_size.opencv_as_extern(), camera_matrix.as_raw__InputOutputArray(), dist_coeffs.as_raw__InputOutputArray(), rvecs.as_raw__OutputArray(), tvecs.as_raw__OutputArray(), std_deviations_intrinsics.as_raw__OutputArray(), std_deviations_extrinsics.as_raw__OutputArray(), per_view_errors.as_raw__OutputArray(), flags, criteria.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Finds the camera intrinsic and extrinsic parameters from several views of a calibration

/// pattern.

///

/// ## Parameters

/// * objectPoints: In the new interface it is a vector of vectors of calibration pattern points in

/// the calibration pattern coordinate space (e.g. std::vector<std::vector<cv::Vec3f>>). The outer

/// vector contains as many elements as the number of pattern views. If the same calibration pattern

/// is shown in each view and it is fully visible, all the vectors will be the same. Although, it is

/// possible to use partially occluded patterns or even different patterns in different views. Then,

/// the vectors will be different. Although the points are 3D, they all lie in the calibration pattern's

/// XY coordinate plane (thus 0 in the Z-coordinate), if the used calibration pattern is a planar rig.

/// In the old interface all the vectors of object points from different views are concatenated

/// together.

/// * imagePoints: In the new interface it is a vector of vectors of the projections of calibration

/// pattern points (e.g. std::vector<std::vector<cv::Vec2f>>). imagePoints.size() and

/// objectPoints.size(), and imagePoints[i].size() and objectPoints[i].size() for each i, must be equal,

/// respectively. In the old interface all the vectors of object points from different views are

/// concatenated together.

/// * imageSize: Size of the image used only to initialize the camera intrinsic matrix.

/// * cameraMatrix: Input/output 3x3 floating-point camera intrinsic matrix

///  . If [CALIB_USE_INTRINSIC_GUESS]

/// and/or [CALIB_FIX_ASPECT_RATIO], [CALIB_FIX_PRINCIPAL_POINT] or [CALIB_FIX_FOCAL_LENGTH]

/// are specified, some or all of fx, fy, cx, cy must be initialized before calling the function.

/// * distCoeffs: Input/output vector of distortion coefficients

/// .

/// * rvecs: Output vector of rotation vectors ([Rodrigues] ) estimated for each pattern view

/// (e.g. std::vector<cv::Mat>>). That is, each i-th rotation vector together with the corresponding

/// i-th translation vector (see the next output parameter description) brings the calibration pattern

/// from the object coordinate space (in which object points are specified) to the camera coordinate

/// space. In more technical terms, the tuple of the i-th rotation and translation vector performs

/// a change of basis from object coordinate space to camera coordinate space. Due to its duality, this

/// tuple is equivalent to the position of the calibration pattern with respect to the camera coordinate

/// space.

/// * tvecs: Output vector of translation vectors estimated for each pattern view, see parameter

/// describtion above.

/// * stdDeviationsIntrinsics: Output vector of standard deviations estimated for intrinsic

/// parameters. Order of deviations values:

///  If one of parameters is not estimated, it's deviation is equals to zero.

/// * stdDeviationsExtrinsics: Output vector of standard deviations estimated for extrinsic

/// parameters. Order of deviations values:  where M is

/// the number of pattern views.  are concatenated 1x3 vectors.

/// * perViewErrors: Output vector of the RMS re-projection error estimated for each pattern view.

/// * flags: Different flags that may be zero or a combination of the following values:

/// * [CALIB_USE_INTRINSIC_GUESS] cameraMatrix contains valid initial values of

/// fx, fy, cx, cy that are optimized further. Otherwise, (cx, cy) is initially set to the image

/// center ( imageSize is used), and focal distances are computed in a least-squares fashion.

/// Note, that if intrinsic parameters are known, there is no need to use this function just to

/// estimate extrinsic parameters. Use [solvePnP] instead.

/// * [CALIB_FIX_PRINCIPAL_POINT] The principal point is not changed during the global

/// optimization. It stays at the center or at a different location specified when

/// [CALIB_USE_INTRINSIC_GUESS] is set too.

/// * [CALIB_FIX_ASPECT_RATIO] The functions consider only fy as a free parameter. The

/// ratio fx/fy stays the same as in the input cameraMatrix . When

/// [CALIB_USE_INTRINSIC_GUESS] is not set, the actual input values of fx and fy are

/// ignored, only their ratio is computed and used further.

/// * [CALIB_ZERO_TANGENT_DIST] Tangential distortion coefficients  are set

/// to zeros and stay zero.

/// * [CALIB_FIX_FOCAL_LENGTH] The focal length is not changed during the global optimization if

/// [CALIB_USE_INTRINSIC_GUESS] is set.

/// * [CALIB_FIX_K1],..., [CALIB_FIX_K6] The corresponding radial distortion

/// coefficient is not changed during the optimization. If [CALIB_USE_INTRINSIC_GUESS] is

/// set, the coefficient from the supplied distCoeffs matrix is used. Otherwise, it is set to 0.

/// * [CALIB_RATIONAL_MODEL] Coefficients k4, k5, and k6 are enabled. To provide the

/// backward compatibility, this extra flag should be explicitly specified to make the

/// calibration function use the rational model and return 8 coefficients or more.

/// * [CALIB_THIN_PRISM_MODEL] Coefficients s1, s2, s3 and s4 are enabled. To provide the

/// backward compatibility, this extra flag should be explicitly specified to make the

/// calibration function use the thin prism model and return 12 coefficients or more.

/// * [CALIB_FIX_S1_S2_S3_S4] The thin prism distortion coefficients are not changed during

/// the optimization. If [CALIB_USE_INTRINSIC_GUESS] is set, the coefficient from the

/// supplied distCoeffs matrix is used. Otherwise, it is set to 0.

/// * [CALIB_TILTED_MODEL] Coefficients tauX and tauY are enabled. To provide the

/// backward compatibility, this extra flag should be explicitly specified to make the

/// calibration function use the tilted sensor model and return 14 coefficients.

/// * [CALIB_FIX_TAUX_TAUY] The coefficients of the tilted sensor model are not changed during

/// the optimization. If [CALIB_USE_INTRINSIC_GUESS] is set, the coefficient from the

/// supplied distCoeffs matrix is used. Otherwise, it is set to 0.

/// * criteria: Termination criteria for the iterative optimization algorithm.

///

/// ## Returns

/// the overall RMS re-projection error.

///

/// The function estimates the intrinsic camera parameters and extrinsic parameters for each of the

/// views. The algorithm is based on [Zhang2000](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_Zhang2000) and [BouguetMCT](https://docs.opencv.org/4.7.0/d0/de3/citelist.html#CITEREF_BouguetMCT) . The coordinates of 3D object

/// points and their corresponding 2D projections in each view must be specified. That may be achieved

/// by using an object with known geometry and easily detectable feature points. Such an object is

/// called a calibration rig or calibration pattern, and OpenCV has built-in support for a chessboard as

/// a calibration rig (see [findChessboardCorners]). Currently, initialization of intrinsic

/// parameters (when [CALIB_USE_INTRINSIC_GUESS] is not set) is only implemented for planar calibration

/// patterns (where Z-coordinates of the object points must be all zeros). 3D calibration rigs can also

/// be used as long as initial cameraMatrix is provided.

///

/// The algorithm performs the following steps:

///

/// * Compute the initial intrinsic parameters (the option only available for planar calibration

/// patterns) or read them from the input parameters. The distortion coefficients are all set to

/// zeros initially unless some of CALIB_FIX_K? are specified.

///

/// * Estimate the initial camera pose as if the intrinsic parameters have been already known. This is

/// done using [solvePnP] .

///

/// * Run the global Levenberg-Marquardt optimization algorithm to minimize the reprojection error,

/// that is, the total sum of squared distances between the observed feature points imagePoints and

/// the projected (using the current estimates for camera parameters and the poses) object points

/// objectPoints. See [projectPoints] for details.

///

///

/// Note:

/// If you use a non-square (i.e. non-N-by-N) grid and [findChessboardCorners] for calibration,

/// and [calibrateCamera] returns bad values (zero distortion coefficients,  and

///  very far from the image center, and/or large differences between  and

///  (ratios of 10:1 or more)), then you are probably using patternSize=cvSize(rows,cols)

/// instead of using patternSize=cvSize(cols,rows) in [findChessboardCorners].

/// ## See also

/// calibrateCameraRO, findChessboardCorners, solvePnP, initCameraMatrix2D, stereoCalibrate,

/// undistort

///

/// ## Overloaded parameters

///

/// ## C++ default parameters

/// * flags: 0

/// * criteria: TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,30,DBL_EPSILON)

#[inline]

pub fn calibrate_camera(object_points: &dyn core::ToInputArray, image_points: &dyn core::ToInputArray, image_size: core::Size, camera_matrix: &mut dyn core::ToInputOutputArray, dist_coeffs: &mut dyn core::ToInputOutputArray, rvecs: &mut dyn core::ToOutputArray, tvecs: &mut dyn core::ToOutputArray, flags: i32, criteria: core::TermCriteria) -> Result<f64> {

extern_container_arg!(object_points);

extern_container_arg!(image_points);

extern_container_arg!(camera_matrix);

extern_container_arg!(dist_coeffs);

extern_container_arg!(rvecs);

extern_container_arg!(tvecs);

return_send!(via ocvrs_return);

unsafe { sys::cv_calibrateCamera_const__InputArrayR_const__InputArrayR_Size_const__InputOutputArrayR_const__InputOutputArrayR_const__OutputArrayR_const__OutputArrayR_int_TermCriteria(object_points.as_raw__InputArray(), image_points.as_raw__InputArray(), image_size.opencv_as_extern(), camera_matrix.as_raw__InputOutputArray(), dist_coeffs.as_raw__InputOutputArray(), rvecs.as_raw__OutputArray(), tvecs.as_raw__OutputArray(), flags, criteria.opencv_as_extern(), ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Computes Hand-Eye calibration:

///

/// ## Parameters

/// * R_gripper2base: Rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the gripper frame to the robot base frame ().

/// This is a vector (`vector<Mat>`) that contains the rotation, `(3x3)` rotation matrices or `(3x1)` rotation vectors,

/// for all the transformations from gripper frame to robot base frame.

/// * t_gripper2base: Translation part extracted from the homogeneous matrix that transforms a point

/// expressed in the gripper frame to the robot base frame ().

/// This is a vector (`vector<Mat>`) that contains the `(3x1)` translation vectors for all the transformations

/// from gripper frame to robot base frame.

/// * R_target2cam: Rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the target frame to the camera frame ().

/// This is a vector (`vector<Mat>`) that contains the rotation, `(3x3)` rotation matrices or `(3x1)` rotation vectors,

/// for all the transformations from calibration target frame to camera frame.

/// * t_target2cam: Rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the target frame to the camera frame ().

/// This is a vector (`vector<Mat>`) that contains the `(3x1)` translation vectors for all the transformations

/// from calibration target frame to camera frame.

/// * R_cam2gripper:[out] Estimated `(3x3)` rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the camera frame to the gripper frame ().

/// * t_cam2gripper:[out] Estimated `(3x1)` translation part extracted from the homogeneous matrix that transforms a point

/// expressed in the camera frame to the gripper frame ().

/// * method: One of the implemented Hand-Eye calibration method, see cv::HandEyeCalibrationMethod

///

/// The function performs the Hand-Eye calibration using various methods. One approach consists in estimating the

/// rotation then the translation (separable solutions) and the following methods are implemented:

/// - R. Tsai, R. Lenz A New Technique for Fully Autonomous and Efficient 3D Robotics Hand/EyeCalibration \cite Tsai89

/// - F. Park, B. Martin Robot Sensor Calibration: Solving AX = XB on the Euclidean Group \cite Park94

/// - R. Horaud, F. Dornaika Hand-Eye Calibration \cite Horaud95

///

/// Another approach consists in estimating simultaneously the rotation and the translation (simultaneous solutions),

/// with the following implemented methods:

/// - N. Andreff, R. Horaud, B. Espiau On-line Hand-Eye Calibration \cite Andreff99

/// - K. Daniilidis Hand-Eye Calibration Using Dual Quaternions \cite Daniilidis98

///

/// The following picture describes the Hand-Eye calibration problem where the transformation between a camera ("eye")

/// mounted on a robot gripper ("hand") has to be estimated. This configuration is called eye-in-hand.

///

/// The eye-to-hand configuration consists in a static camera observing a calibration pattern mounted on the robot

/// end-effector. The transformation from the camera to the robot base frame can then be estimated by inputting

/// the suitable transformations to the function, see below.

///

///

///

/// The calibration procedure is the following:

/// - a static calibration pattern is used to estimate the transformation between the target frame

/// and the camera frame

/// - the robot gripper is moved in order to acquire several poses

/// - for each pose, the homogeneous transformation between the gripper frame and the robot base frame is recorded using for

/// instance the robot kinematics

///

/// - for each pose, the homogeneous transformation between the calibration target frame and the camera frame is recorded using

/// for instance a pose estimation method (PnP) from 2D-3D point correspondences

///

///

/// The Hand-Eye calibration procedure returns the following homogeneous transformation

///

///

/// This problem is also known as solving the  equation:

/// - for an eye-in-hand configuration

///

///

/// - for an eye-to-hand configuration

///

///

/// \note

/// Additional information can be found on this [website](http://campar.in.tum.de/Chair/HandEyeCalibration).

/// \note

/// A minimum of 2 motions with non parallel rotation axes are necessary to determine the hand-eye transformation.

/// So at least 3 different poses are required, but it is strongly recommended to use many more poses.

///

/// ## C++ default parameters

/// * method: CALIB_HAND_EYE_TSAI

#[inline]

pub fn calibrate_hand_eye(r_gripper2base: &dyn core::ToInputArray, t_gripper2base: &dyn core::ToInputArray, r_target2cam: &dyn core::ToInputArray, t_target2cam: &dyn core::ToInputArray, r_cam2gripper: &mut dyn core::ToOutputArray, t_cam2gripper: &mut dyn core::ToOutputArray, method: crate::calib3d::HandEyeCalibrationMethod) -> Result<()> {

extern_container_arg!(r_gripper2base);

extern_container_arg!(t_gripper2base);

extern_container_arg!(r_target2cam);

extern_container_arg!(t_target2cam);

extern_container_arg!(r_cam2gripper);

extern_container_arg!(t_cam2gripper);

return_send!(via ocvrs_return);

unsafe { sys::cv_calibrateHandEye_const__InputArrayR_const__InputArrayR_const__InputArrayR_const__InputArrayR_const__OutputArrayR_const__OutputArrayR_HandEyeCalibrationMethod(r_gripper2base.as_raw__InputArray(), t_gripper2base.as_raw__InputArray(), r_target2cam.as_raw__InputArray(), t_target2cam.as_raw__InputArray(), r_cam2gripper.as_raw__OutputArray(), t_cam2gripper.as_raw__OutputArray(), method, ocvrs_return.as_mut_ptr()) };

return_receive!(unsafe ocvrs_return => ret);

let ret = ret.into_result()?;

Ok(ret)

}

/// Computes Robot-World/Hand-Eye calibration:  and

///

/// ## Parameters

/// * R_world2cam: Rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the world frame to the camera frame ().

/// This is a vector (`vector<Mat>`) that contains the rotation, `(3x3)` rotation matrices or `(3x1)` rotation vectors,

/// for all the transformations from world frame to the camera frame.

/// * t_world2cam: Translation part extracted from the homogeneous matrix that transforms a point

/// expressed in the world frame to the camera frame ().

/// This is a vector (`vector<Mat>`) that contains the `(3x1)` translation vectors for all the transformations

/// from world frame to the camera frame.

/// * R_base2gripper: Rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the robot base frame to the gripper frame ().

/// This is a vector (`vector<Mat>`) that contains the rotation, `(3x3)` rotation matrices or `(3x1)` rotation vectors,

/// for all the transformations from robot base frame to the gripper frame.

/// * t_base2gripper: Rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the robot base frame to the gripper frame ().

/// This is a vector (`vector<Mat>`) that contains the `(3x1)` translation vectors for all the transformations

/// from robot base frame to the gripper frame.

/// * R_base2world:[out] Estimated `(3x3)` rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the robot base frame to the world frame ().

/// * t_base2world:[out] Estimated `(3x1)` translation part extracted from the homogeneous matrix that transforms a point

/// expressed in the robot base frame to the world frame ().

/// * R_gripper2cam:[out] Estimated `(3x3)` rotation part extracted from the homogeneous matrix that transforms a point

/// expressed in the gripper frame to the camera frame ().

/// * t_gripper2cam:[out] Estimated `(3x1)` translation part extracted from the homogeneous matrix that transforms a point

/// expressed in the gripper frame to the camera frame ().

/// * method: One of the implemented Robot-World/Hand-Eye calibration method, see cv::RobotWorldHandEyeCalibrationMethod

///

/// The function performs the Robot-World/Hand-Eye calibration using various methods. One approach consists in estimating the

/// rotation then the translation (separable solutions):

/// - M. Shah, Solving the robot-world/hand-eye calibration problem using the kronecker product \cite Shah2013SolvingTR

///

/// Another approach consists in estimating simultaneously the rotation and the translation (simultaneous solutions),

/// with the following implemented method:

/// - A. Li, L. Wang, and D. Wu, Simultaneous robot-world and hand-eye calibration using dual-quaternions and kronecker product \cite Li2010SimultaneousRA

///

/// The following picture describes the Robot-World/Hand-Eye calibration problem where the transformations between a robot and a world frame

/// and between a robot gripper ("hand") and a camera ("eye") mounted at the robot end-effector have to be estimated.

///

///

///

/// The calibration procedure is the following:

/// - a static calibration pattern is used to estimate the transformation between the target frame

/// and the camera frame

/// - the robot gripper is moved in order to acquire several poses

/// - for each pose, the homogeneous transformation between the gripper frame and the robot base frame is recorded using for

/// instance the robot kinematics

///

/// - for each pose, the homogeneous transformation between the calibration target frame (the world frame) and the camera frame is recorded using

/// for instance a pose estimation method (PnP) from 2D-3D point correspondences

///

///

/// The Robot-World/Hand-Eye calibration procedure returns the following homogeneous transformations

///

///

///

/// This problem is also known as solving the  equation, with:

/// -

/// -

/// -

/// -

///

/// \note

/// At least 3 measurements are required (input vectors size must be greater or equal to 3).

///

/// ## C++ default parameters

/// * method: CALIB_ROBOT_WORLD_HAND_EYE_SHAH

#[inline]